Protein Data Characterization for Deep Learning: A Comprehensive Guide for Biomedical Research and Drug Discovery

This article provides a comprehensive overview of modern protein data characterization for deep learning applications.

Protein Data Characterization for Deep Learning: A Comprehensive Guide for Biomedical Research and Drug Discovery

Abstract

This article provides a comprehensive overview of modern protein data characterization for deep learning applications. It covers foundational concepts, from essential databases like the RCSB PDB to core deep learning architectures like Graph Neural Networks (GNNs) and Transformers. The piece explores practical methodologies and tools for data preprocessing and feature extraction, addresses common troubleshooting and optimization challenges, and concludes with rigorous validation and comparative analysis techniques. Designed for researchers, scientists, and drug development professionals, this guide synthesizes the latest advances to empower the development of robust, accurate, and clinically relevant computational models.

The Building Blocks: Foundational Concepts and Data Sources for Protein Characterization

In the realm of deep learning research for protein science, the accurate characterization of protein data is foundational to advancing our understanding of cellular functions and accelerating drug discovery. Proteins execute the vast majority of biological processes by interacting with each other and other molecules, forming complex networks that regulate everything from signal transduction to metabolic pathways [1]. The dramatic increase in available biological data has enabled deep learning models to uncover patterns and make predictions with unprecedented accuracy [1]. This guide provides an in-depth technical examination of the three core data types—sequences, structures, and interactions—that are essential for protein data characterization, framing them within the context of modern computational biology research aimed at researchers, scientists, and drug development professionals.

Protein Sequences: The Primary Blueprint

Description and Biological Significance

The protein sequence—a linear chain of amino acids—represents the most fundamental data type in bioinformatics. This primary structure dictates how a protein will fold into its three-dimensional conformation, which in turn determines its specific biological function. Deep learning models leverage sequence information to predict various protein properties, including secondary structure, solubility, and subcellular localization. The exponential growth of protein sequence databases has been a critical enabler for the development of large-scale predictive models.

Table 1: Major Public Databases for Protein Sequence and Interaction Data

| Database Name | Primary Content | URL | Key Features |

|---|---|---|---|

| UniProt | Protein sequences and functional information | https://www.uniprot.org/ | Comprehensive resource with expertly annotated entries (Swiss-Prot) and automatically annotated entries (TrEMBL) |

| STRING | Known and predicted protein-protein interactions | https://string-db.org/ | Includes both experimental and computationally predicted interactions across numerous species |

| BioGRID | Protein-protein and genetic interactions | https://thebiogrid.org/ | Curated biological interaction repository with focus on genetic and physical interactions |

| IntAct | Molecular interaction data | https://www.ebi.ac.uk/intact/ | Open-source database system for molecular interaction data |

| PDB | 3D protein structures | https://www.rcsb.org/ | Primary repository for experimentally determined 3D structures of proteins and nucleic acids |

Experimental Methodologies for Sequence Determination

Sanger Sequencing Protocol: For targeted protein sequencing, the Edman degradation method remains a foundational approach, though mass spectrometry-based techniques have largely superseded it for high-throughput applications.

Next-Generation Sequencing (NGS) Workflows: While NGS primarily determines nucleic acid sequences, it indirectly provides protein sequences through the genetic code. The standard protocol involves: (1) Library preparation - fragmenting DNA and adding adapters; (2) Cluster generation - amplifying fragments on a flow cell; (3) Sequencing by synthesis - using fluorescently-labeled nucleotides to determine sequence; (4) Data analysis - translating nucleic acid sequences to protein sequences.

Mass Spectrometry-Based Proteomics: This approach directly identifies protein sequences: (1) Protein extraction and digestion with trypsin; (2) Liquid chromatography separation of peptides; (3) Tandem mass spectrometry (MS/MS) analysis; (4) Database searching using tools like MaxQuant to match spectra to sequences.

Protein Structures: The Three-Dimensional Reality

Description and Biological Significance

Protein structures represent the three-dimensional arrangement of atoms within a protein, providing critical insights into function, stability, and molecular recognition. The structure of a protein is hierarchically organized into primary (sequence), secondary (α-helices and β-sheets), tertiary (overall folding of a single chain), and quaternary (multi-chain complexes) levels of organization. Determining protein structures is crucial for understanding and mastering biological functions, as the spatial arrangement of residues defines binding sites, catalytic centers, and interaction interfaces [2].

Key Experimental and Computational Approaches

X-ray Crystallography Protocol: (1) Protein purification and crystallization; (2) Data collection - exposing crystals to X-rays and measuring diffraction patterns; (3) Phase determination using molecular replacement or experimental methods; (4) Model building and refinement against electron density maps.

Cryo-Electron Microscopy (Cryo-EM) Workflow: (1) Sample vitrification - rapid freezing of protein solutions in liquid ethane; (2) Data collection - imaging under cryo-conditions using electron microscope; (3) Particle picking and 2D classification; (4) 3D reconstruction and refinement.

Nuclear Magnetic Resonance (NMR) Spectroscopy Methodology: (1) Sample preparation with isotopic labeling (15N, 13C); (2) Data collection through multi-dimensional NMR experiments; (3) Resonance assignment using sequential walking techniques; (4) Structure calculation with distance and angle restraints.

Computational Structure Prediction: Recent advances in deep learning have revolutionized protein structure prediction. AlphaFold2 represents a groundbreaking approach that uses multiple sequence alignments and attention-based neural networks to predict protein structures with remarkable accuracy [2]. The methodology involves: (1) Multiple sequence alignment construction using tools like HHblits; (2) Template identification from PDB; (3) Structure module with Evoformer architecture; (4) Recycling iterations for refinement.

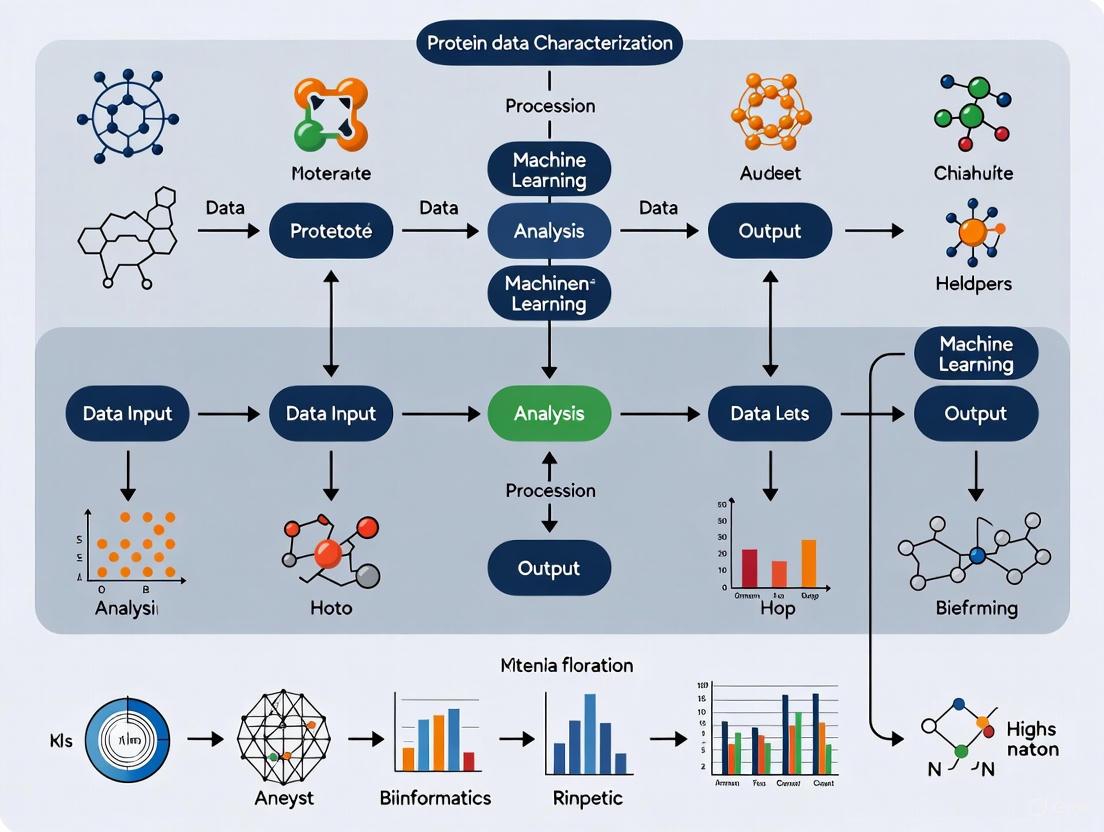

Diagram 1: Deep Learning-Based Protein Structure Prediction Workflow

Protein Interactions: The Dynamic Network

Description and Biological Significance

Protein-protein interactions (PPIs) are fundamental regulators of biological functions, influencing diverse cellular processes including signal transduction, cell cycle regulation, transcriptional control, and metabolic pathway regulation [1]. PPIs can be categorized based on their nature, temporal characteristics, and functions: direct and indirect interactions, stable and transient interactions, as well as homodimeric and heterodimeric interactions [1]. Different types of interactions shape their functional characteristics and work in concert to regulate cellular biological processes. The accurate identification and characterization of PPIs is therefore essential for understanding cellular systems and developing therapeutic interventions.

Key Experimental Methodologies

Yeast Two-Hybrid (Y2H) System Protocol: (1) Clone bait protein into DNA-binding domain vector; (2) Clone prey protein into activation domain vector; (3) Co-transform both vectors into yeast reporter strain; (4) Plate transformations on selective media to detect interactions.

Co-Immunoprecipitation (Co-IP) Workflow: (1) Cell lysis under non-denaturing conditions; (2) Pre-clearing with control beads; (3) Immunoprecipitation with specific antibody; (4) Western blot analysis to detect co-precipitated proteins.

Surface Plasmon Resonance (SPR) Methodology: (1) Immobilize bait protein on sensor chip; (2) Flow prey protein over surface; (3) Monitor association phase; (4) Monitor dissociation phase with buffer alone; (5) Analyze kinetics using appropriate binding models.

Computational Prediction Methods

Computational approaches for predicting PPIs have evolved significantly, with structural information proving particularly valuable. As demonstrated by the PrePPI algorithm, three-dimensional structural information can predict PPIs with accuracy and coverage superior to predictions based on non-structural evidence [3]. The methodology combines structural information with other functional clues using Bayesian statistics: (1) Identify structural representatives for query proteins; (2) Find structural neighbors using structural alignment; (3) Identify template complexes from PDB; (4) Generate interaction models; (5) Evaluate models using empirical scores; (6) Combine evidence using Bayesian network [3].

Recent deep learning approaches have further advanced the field. Graph Neural Networks (GNNs) based on graph structures and message passing adeptly capture local patterns and global relationships in protein structures [1]. Variants such as Graph Convolutional Networks (GCNs), Graph Attention Networks (GATs), and Graph Autoencoders provide flexible toolsets for PPI prediction [1].

Diagram 2: Computational Prediction of Protein-Protein Interactions

Integrating Data Types: Advanced Computational Frameworks

Deep Learning Architectures for Multi-Modal Data Integration

The most powerful computational approaches for protein data characterization integrate multiple data types. Recent advances in deep learning have enabled the development of architectures that can process sequences, structures, and interactions in a unified framework. Graph Neural Networks (GNNs) have emerged as particularly effective tools, as they can naturally represent relational information between proteins or residues [1]. These networks operate by aggregating information from neighboring nodes in a graph, generating representations that reveal complex interactions and spatial dependencies in proteins [1].

Multi-Scale Feature Extraction: Advanced frameworks now incorporate both local residue-level features and global topological properties. For instance, the RGCNPPIS system integrates GCN and GraphSAGE, enabling simultaneous extraction of macro-scale topological patterns and micro-scale structural motifs [1]. Similarly, the AG-GATCN framework developed by Yang et al. integrates Graph Attention Networks and Temporal Convolutional Networks to provide robust solutions against noise interference in PPI analysis [1].

Recent Methodological Advances

Recent years have witnessed significant methodological innovations. DeepSCFold represents a cutting-edge approach that uses sequence-based deep learning models to predict protein-protein structural similarity and interaction probability, providing a foundation for identifying interaction partners and constructing deep paired multiple-sequence alignments for protein complex structure prediction [2]. Benchmark results demonstrate that DeepSCFold significantly increases the accuracy of protein complex structure prediction compared with state-of-the-art methods, achieving an improvement of 11.6% and 10.3% in TM-score compared to AlphaFold-Multimer and AlphaFold3, respectively, on CASP15 targets [2].

For challenging cases such as peptide-protein interactions, TopoDockQ introduces topological deep learning that leverages persistent combinatorial Laplacian features to predict DockQ scores for accurately evaluating peptide-protein interface quality [4]. This approach reduces false positives by at least 42% and increases precision by 6.7% across evaluation datasets filtered to ≤70% peptide-protein sequence identity, while maintaining relatively high recall and F1 scores [4].

Table 2: Performance Comparison of Advanced Protein Complex Prediction Methods

| Method | TM-score Improvement | Interface Success Rate | Key Innovation |

|---|---|---|---|

| DeepSCFold | +11.6% vs. AlphaFold-Multimer, +10.3% vs. AlphaFold3 | N/A | Sequence-derived structure complementarity and interaction probability |

| TopoDockQ | N/A | +24.7% vs. AlphaFold-Multimer, +12.4% vs. AlphaFold3 (antibody-antigen) | Topological deep learning with persistent Laplacian features |

| PrePPI | Comparable to high-throughput experiments | Identifies unexpected PPIs of biological interest | Bayesian combination of structural and non-structural clues |

Table 3: Essential Research Reagents and Computational Tools for Protein Data Characterization

| Resource Category | Specific Tools/Reagents | Function/Purpose | Application Context |

|---|---|---|---|

| Experimental Databases | UniProt, PDB, BioGRID, IntAct | Provide reference data for sequences, structures, and interactions | Experimental design, validation, and data interpretation |

| Deep Learning Frameworks | AlphaFold-Multimer, DeepSCFold, TopoDockQ | Predict protein complex structures and interaction quality | Computational prediction of protein structures and interactions |

| Sequence Analysis Tools | HHblits, Jackhammer, MMseqs2 | Generate multiple sequence alignments and identify homologs | Feature extraction for sequence-based predictions |

| Structural Analysis | PyMOL, ChimeraX, PDBeFold | Visualize, analyze, and compare protein structures | Structural interpretation and quality assessment |

| Specialized Reagents | Isotopically-labeled amino acids (15N, 13C), cross-linking agents, specific antibodies | Enable specific experimental approaches including NMR, cross-linking studies, and immunoprecipitation | Experimental determination of structures and interactions |

The integration of sequences, structures, and interactions provides a comprehensive framework for protein data characterization that is transforming deep learning research in structural biology and drug discovery. As computational methods continue to advance, particularly through sophisticated deep learning architectures that can leverage multi-modal biological data, we are witnessing unprecedented capabilities in predicting protein functions, interactions, and therapeutic potential. The ongoing development of integrated computational-experimental workflows, coupled with the growing availability of high-quality biological data, promises to accelerate both fundamental biological discoveries and the development of novel therapeutics for human disease.

In the era of data-driven biological science, public databases have become indispensable for progress in structural bioinformatics and deep learning research. The integration of experimentally determined and computationally predicted protein structures provides a foundational resource for understanding biological function and driving therapeutic development. This technical guide provides an in-depth analysis of three essential databases—RCSB PDB, SAbDab, and AlphaFold DB—framed within the context of protein data characterization for machine learning applications. These resources collectively offer researchers unprecedented access to structural information, from empirical measurements to AI-powered predictions, enabling novel approaches to biological inquiry and drug discovery.

Database Core Characteristics & Comparative Analysis

Fundamental Attributes and Applications

The three databases serve complementary roles in the structural biology ecosystem, each with distinct data sources, primary functions, and applications in research and development.

Table 1: Core Database Characteristics and Applications

| Characteristic | RCSB PDB | SAbDab | AlphaFold DB |

|---|---|---|---|

| Primary Data Source | Experimentally determined structures (X-ray, cryo-EM, NMR) [5] [6] | Curated subset of PDB focused on antibodies [7] [8] | AI-based predictions from protein sequences [9] [10] |

| Data Content Type | Empirical measurements with validation reports [6] | Annotated antibody structures, antibody-antigen complexes, affinity data [7] | Predicted 3D protein models with confidence metrics [9] |

| Key Applications | Structure-function studies, drug docking, molecular mechanics | Antibody engineering, therapeutic design, epitope analysis [7] | Function annotation, experimental design, missing structure coverage [9] |

| Update Frequency | Weekly with new PDB depositions [6] | Regular updates (e.g., 9,521 structures as of May 2025) [7] | Major releases with new proteome coverage [9] |

| Licensing | Free access, multiple export formats [11] | CC-BY 4.0 [8] | CC-BY 4.0 [9] |

Quantitative Data Coverage and Statistics

Understanding the scale and scope of each database is crucial for assessing their utility in research projects and machine learning pipeline development.

Table 2: Quantitative Data Coverage Across Databases

| Metric | RCSB PDB | SAbDab | AlphaFold DB |

|---|---|---|---|

| Total Entries | ~200,000 experimental structures [6] | 19,128 entries from 9,757 PDB structures (as of May 2025) [7] | Over 200 million predictions [9] |

| Coverage Scope | All macromolecular types (proteins, DNA, RNA, complexes) [5] | Antibody structures only, including nanobodies (SAbDab-nano) [8] | Broad proteome coverage for model organisms and human [9] |

| Human Proteome | ~105,000 eukaryotic structures (as of mid-2022) [6] | Therapeutic antibodies cataloged in Thera-SAbDab [8] | Complete human proteome available for download [9] |

| Key Organisms | Comprehensive across all kingdoms of life [6] | Various species with antibody structures | 47 key model organisms and pathogens [9] |

| Special Features | Integrates >1 million CSMs from AlphaFold and ModelArchive [6] | Manually curated binding affinity data, CDR annotation [7] | pLDDT confidence scores, custom sequence annotations [9] |

Technical Methodologies and Data Processing

RCSB PDB: Experimental Structure Management

The RCSB PDB serves as the US data center for the Worldwide PDB (wwPDB) and employs rigorous workflows for structure deposition, validation, and annotation [6]. The technical methodology encompasses:

Deposition and Validation Pipeline: Structural biologists submit experimental data and coordinates through a standardized deposition system. The wwPDB then processes these submissions through automated validation pipelines that assess geometric quality, steric clashes, and agreement with experimental data [6]. This process includes both automated checks and expert biocuration to ensure data integrity and consistency across the archive.

Data Integration and Distribution: The RCSB PDB distributes data in multiple formats, including legacy PDB, mmCIF, and PDBML/XML, to accommodate diverse user needs [11]. The resource performs weekly integration of new structures with related functional annotations from external biodata resources, creating a "living data resource" that provides up-to-date information for the entire corpus of 3D biostructure data [6].

SAbDab: Antibody-Specific Curation

SAbDab employs specialized processing pipelines to extract and annotate antibody-specific structural information from the broader PDB archive. The technical approach includes:

Antibody Chain Identification: Each protein sequence from PDB entries is analyzed using AbRSA to determine whether it contains an antibody chain [7]. Sequences are categorized as heavy chain (HC), light chain (LC), heavy_light chain (HLC), or non-antibody. This classification is crucial for proper database organization and querying.

Structure Validation and Pairing: Antibody chains undergo structural validation using TM-align against high-resolution reference domains to exclude misfolded structures lacking typical antibody domains [7]. Heavy and light chains are then paired based on interaction level calculations, with interface residues defined as those having non-hydrogen atoms within 5Å of atoms in the partner chain [7].

Complementarity Determining Region (CDR) Annotation: The database identifies and annotates CDR residues using AbRSA_PDB, providing essential information for antibody engineering and analysis of binding interfaces [7]. This detailed structural annotation enables researchers to focus on the critical regions responsible for antigen recognition.

AlphaFold DB: AI-Driven Structure Prediction

AlphaFold DB provides access to structures predicted by DeepMind's AlphaFold system, which revolutionized protein structure prediction through a novel neural network architecture [10]. The core methodology includes:

Evoformer Architecture: The AlphaFold network processes inputs through repeated layers of the Evoformer block, which represents the prediction task as a graph inference problem in 3D space [10]. This architecture enables information exchange between multiple sequence alignment (MSA) representations and pair representations, allowing the network to reason about spatial and evolutionary relationships simultaneously.

Structure Module: The Evoformer output feeds into a structure module that explicitly represents 3D structure through rotations and translations for each residue [10]. This module employs an equivariant transformer to enable implicit reasoning about side-chain atoms and uses a loss function that emphasizes orientational correctness. The system implements iterative refinement through recycling, where outputs are recursively fed back into the same modules to gradually improve accuracy [10].

Confidence Estimation: A critical component is the prediction of per-residue confidence estimates (pLDDT) that reliably indicate the local accuracy of the corresponding prediction [10]. This allows researchers to assess which regions of a predicted structure can be trusted for downstream applications.

Integrated Workflow for Deep Learning Research

Multi-Database Integration Strategy

Leveraging these databases in concert provides a powerful framework for deep learning research in structural biology. The integrated workflow enables researchers to maximize the strengths of each resource while mitigating their individual limitations.

Diagram 1: Multi-database integration workflow for deep learning research. This pipeline demonstrates how the three databases can be systematically combined to address complex research questions in structural bioinformatics.

Implementation Protocols

Data Acquisition and Preprocessing: For experimental structures, download data from RCSB PDB using their file download services, which provide multiple formats including mmCIF and PDB [11]. Programmatic access is available through HTTPS URLs (e.g., https://files.wwpdb.org) or rsync capabilities for efficient maintenance of full archive copies [11]. For antibody-specific data, access SAbDab through its web interface or download curated datasets focusing on particular antibody classes or species origins [8]. For predicted structures, retrieve AlphaFold DB entries through the dedicated database, with the option to download entire proteomes or individual proteins [9].

Quality Filtering and Validation: Implement rigorous quality control measures when integrating data from these resources. For experimental structures, utilize validation reports available from RCSB PDB to filter based on resolution, R-factor, and clash scores [6]. For antibody structures, leverage SAbDab's annotations to ensure proper pairing and domain integrity [7]. For AlphaFold DB predictions, use the provided pLDDT scores to identify high-confidence regions, with values above 90 indicating high accuracy and values below 50 potentially indicating disordered regions [10].

Feature Engineering for Machine Learning: Develop meaningful feature representations from the structural data. For sequence-based models, extract evolutionary information from multiple sequence alignments associated with AlphaFold predictions [10]. For structural models, calculate geometric features such as dihedral angles, solvent accessibility, and residue-residue contacts. For antibody-specific applications, leverage SAbDab's CDR annotations to focus on hypervariable regions and interface residues [7].

Research Reagent Solutions

The effective utilization of these databases requires a suite of computational tools and resources that facilitate data access, processing, and analysis.

Table 3: Essential Research Reagents for Database Utilization

| Reagent/Tool | Function | Application Context |

|---|---|---|

| RCSB PDB API [11] | Programmatic access to PDB data and search services | Automated retrieval of structural data for large-scale analyses |

| SAbDab Search Tools [8] | Specialized querying of antibody structures by sequence, CDR, or orientation | Targeted extraction of therapeutic antibody data for engineering studies |

| AlphaFold DB Custom Annotations [9] | Integration of user-provided sequence annotations with predicted structures | Visualizing functional motifs in the context of predicted structures |

| AbRSA [7] | Antibody-specific sequence analysis and typing | Accurate classification of antibody chains in structural data |

| DeepSCFold [2] | Enhanced protein complex structure prediction | Modeling antibody-antigen and other protein-protein interactions |

| ModelCIF Standard [6] | Standardized representation for computed structure models | Consistent processing and integration of predicted structures |

Case Study: Antibody-Antigen Complex Prediction

A practical application integrating all three databases involves predicting antibody-antigen complex structures, a challenging task with significant therapeutic implications. The methodology demonstrates how these resources can address specific research problems:

Data Curation and Template Identification: Initiate the process by querying SAbDab for structures with similar antibody sequences or CDR loop conformations to the target of interest [7]. This provides a set of potential structural templates and information about common folding patterns. Cross-reference these findings with experimental complexes in RCSB PDB to identify relevant binding interfaces and interaction geometries.

Complex Structure Prediction: Implement advanced modeling pipelines such as DeepSCFold, which leverages sequence-derived structure complementarity to improve protein complex modeling [2]. This approach has demonstrated a 24.7% improvement in success rates for antibody-antigen binding interface prediction compared to standard AlphaFold-Multimer [2]. The method uses deep learning to predict protein-protein structural similarity and interaction probability from sequence information alone.

Model Validation and Assessment: Validate predicted complexes against existing experimental structures from RCSB PDB when available. For novel predictions, utilize quality assessment metrics such as interface pLDDT scores from AlphaFold and geometric validation tools available through RCSB PDB [6]. Compare the predicted binding interfaces with known antibody-antigen interactions cataloged in SAbDab to assess biological plausibility.

The synergistic use of RCSB PDB, SAbDab, and AlphaFold DB creates a powerful ecosystem for protein data characterization that directly supports deep learning research in structural biology. Each database brings unique strengths: RCSB PDB provides the empirical foundation of experimentally determined structures, SAbDab offers specialized curation for antibody-specific applications, and AlphaFold DB delivers unprecedented coverage of protein structural space. As deep learning methodologies continue to advance, these databases will play increasingly critical roles in training more accurate models, validating predictions, and generating biological insights. The integration protocols and methodologies outlined in this technical guide provide a framework for researchers to leverage these resources effectively, accelerating progress in both basic science and therapeutic development.

The characterization of protein data represents a central challenge and opportunity in modern computational biology. Proteins, fundamental to virtually all biological processes, inherently possess complex structures—from their linear amino acid sequences to their intricate three-dimensional folds and interaction networks. Traditional machine learning approaches often struggle to capture the rich, relational information embedded within this data. Deep learning architectures, however, offer powerful frameworks for learning directly from these complex representations. This whitepaper provides an in-depth technical guide to four core deep learning architectures—Graph Neural Networks (GNNs), Convolutional Neural Networks (CNNs), Recurrent Neural Networks (RNNs), and Transformers—framed specifically within the context of protein data characterization for drug development and research. We explore the underlying mechanics, applications, and experimental protocols for each architecture, providing researchers and scientists with the practical toolkit needed to advance protein science.

Graph Neural Networks (GNNs) for Protein Structure and Interaction Networks

Architectural Principles

Graph Neural Networks are specialized neural networks designed to operate on graph-structured data, making them exceptionally well-suited for representing and analyzing proteins and their interactions [12] [13]. A graph ( G ) is formally defined as a tuple ( (V, E) ), where ( V ) is a set of nodes (e.g., atoms or residues in a protein) and ( E ) is a set of edges (e.g., chemical bonds or spatial proximities) [13]. The core operation of most GNNs is message passing, where nodes iteratively update their representations by aggregating information from their neighboring nodes [14]. A generic message passing layer can be described by:

[ \mathbf{h}u^{(l+1)} = \phi \left( \mathbf{h}u^{(l)}, \bigoplus{v \in \mathcal{N}(u)} \psi(\mathbf{h}u^{(l)}, \mathbf{h}v^{(l)}, \mathbf{e}{uv}) \right) ]

Here, ( \mathbf{h}_u^{(l)} ) is the representation of node ( u ) at layer ( l ), ( \mathcal{N}(u) ) is its set of neighbors, ( \psi ) is a message function, ( \bigoplus ) is a permutation-invariant aggregation function (e.g., sum, mean, or max), and ( \phi ) is an update function [14]. This mechanism allows GNNs to capture both the local structure and the global topology of molecular graphs.

GNN Variants in Protein Research

Several GNN variants have been developed, each with distinct advantages for protein data:

- Graph Convolutional Networks (GCNs): Apply convolutional operations to graph data by aggregating feature information from a node's local neighborhood using spectral graph theory. The layer-wise propagation rule is often expressed as ( \mathbf{H}^{(l+1)} = \sigma(\hat{\mathbf{D}}^{-\frac{1}{2}} \hat{\mathbf{A}} \hat{\mathbf{D}}^{-\frac{1}{2}} \mathbf{H}^{(l)} \mathbf{\Theta}^{(l)}) ), where ( \hat{\mathbf{A}} ) is the adjacency matrix with self-loops, ( \hat{\mathbf{D}} ) is the corresponding degree matrix, and ( \mathbf{\Theta} ) is a layer-specific trainable weight matrix [14].

- Graph Attention Networks (GATs): Incorporate an attention mechanism that assigns different weights to neighboring nodes, allowing the model to focus on more important interactions [1]. The attention coefficients ( \alpha{uv} ) between nodes ( u ) and ( v ) are computed as: ( \alpha{uv} = \frac{\exp(\text{LeakyReLU}(\mathbf{a}^T[\mathbf{W}\mathbf{h}u \Vert \mathbf{W}\mathbf{h}v]))}{\sum{k \in \mathcal{N}(u)} \exp(\text{LeakyReLU}(\mathbf{a}^T[\mathbf{W}\mathbf{h}u \Vert \mathbf{W}\mathbf{h}_k]))} ) [14].

- Graph Autoencoders (GAEs): Employ an encoder-decoder structure to learn compact latent representations of graphs, useful for interaction prediction and graph generation tasks [1].

Application to Protein-Protein Interactions

GNNs have demonstrated remarkable success in predicting Protein-Protein Interactions (PPIs) [1]. In this context, proteins are represented as nodes in a larger interaction network, with edges indicating known or potential interactions. GNNs can operate on these networks to identify novel interactions or characterize the function of unannotated proteins. For instance, the RGCNPPIS system integrates GCN and GraphSAGE to simultaneously extract macro-scale topological patterns and micro-scale structural motifs from PPI networks [1]. Similarly, the AG-GATCN framework combines Graph Attention Networks and Temporal Convolutional Networks to provide robust predictions against noise in PPI analysis [1].

Table: Key GNN Variants for Protein Data Characterization

| Variant | Core Mechanism | Protein-Specific Application | Key Advantage |

|---|---|---|---|

| Graph Convolutional Network (GCN) | Spectral graph convolution | Protein function prediction [1] | Computationally efficient for large graphs [14] |

| Graph Attention Network (GAT) | Self-attention on neighbors | Protein-protein interaction prediction [1] | Weights importance of different interactions [14] |

| Graph Autoencoder (GAE) | Encoder-decoder for graph embedding | Interaction network reconstruction [1] | Learns compressed representations for downstream tasks [1] |

| GraphSAGE | Neighborhood sampling & aggregation | Large-scale PPI network analysis [1] | Generalizes to unseen nodes & scalable [1] |

Experimental Protocol for PPI Prediction Using GNN

Objective: To predict novel protein-protein interactions from a partially known interaction network.

Dataset Preparation:

- Source: Download experimentally verified PPIs from databases such as STRING, BioGRID, or DIP [1].

- Graph Construction: Represent the PPI network as a graph ( G = (V, E) ), where ( V ) is the set of proteins and ( E ) is the set of known interactions.

- Node Features: Represent each protein node by a feature vector. This can include:

- Amino acid composition or physiochemical properties derived from its sequence.

- Gene Ontology (GO) term annotations [1].

- Pre-trained protein language model embeddings (e.g., from ESM or ProtTrans).

- Split Data: Partition the edges into training, validation, and test sets (e.g., 80/10/10), ensuring the graph remains connected.

Model Implementation (using PyTorch Geometric):

Training Loop:

- Loss Function: Use Binary Cross-Entropy loss for the binary classification task (interaction vs. non-interaction).

- Negative Sampling: Generate negative examples (non-interacting protein pairs) for training, equal in number to positive edges.

- Optimization: Train using the Adam optimizer with a learning rate of 0.01 and early stopping on the validation loss.

Evaluation:

- Assess model performance on the held-out test set using standard metrics: Area Under the Receiver Operating Characteristic Curve (AUC-ROC), Area Under the Precision-Recall Curve (AUPRC), and F1-score.

Convolutional Neural Networks (CNNs) for Protein Sequence and Structure Analysis

Architectural Fundamentals

Convolutional Neural Networks are a class of deep neural networks most commonly applied to analyzing visual imagery but have proven equally powerful for extracting patterns from protein sequences and structural data [15] [16]. The core building blocks of a CNN are:

- Convolutional Layers: These layers apply a set of learnable filters (or kernels) to the input data. Each filter slides (convolves) across the input, computing dot products to produce a feature map that highlights the presence of specific features in the input [16]. For a 1D protein sequence, the convolution operation can be formalized as ( (F * S)[i] = \sum_{j=-k}^{k} F[j] \cdot S[i + j] ), where ( S ) is the sequence representation, ( F ) is the filter of width ( 2k+1 ), and ( * ) denotes the convolution operation.

- Pooling Layers: Pooling (e.g., max pooling or average pooling) performs non-linear down-sampling, reducing the spatial dimensions of the feature maps, providing translation invariance, and controlling overfitting [15] [16].

- Fully Connected Layers: After several convolutional and pooling layers, the high-level reasoning is done via fully connected layers, which compute class scores or final predictions [15].

A key advantage of CNNs is parameter sharing: a filter used in one part of the input can also detect the same feature in another part, making the model efficient and reducing overfitting [16].

Application to Protein Sequence and Structural Data

In protein informatics, CNNs are predominantly used in two modalities:

1D-CNNs for Protein Sequences: Protein sequences are treated as 1D strings of amino acids. These are first converted into a numerical matrix via embeddings (e.g., one-hot encoding or more sophisticated learned embeddings). 1D convolutional filters then scan along the sequence to detect conserved motifs, domains, or functional signatures [16]. This approach is fundamental for tasks like secondary structure prediction, residue-level contact prediction, and protein family classification.

2D/3D-CNNs for Protein Structures and Contact Maps: Protein 3D structures can be represented as 3D voxel grids (density maps) or 2D distance/contact maps. 2D and 3D CNNs can process these representations to learn spatial hierarchies of structural features, which is crucial for function prediction, binding site identification, and protein design [16].

Table: CNN Configurations for Protein Data Types

| Data Type | CNN Dimension | Input Representation | Example Application |

|---|---|---|---|

| Amino Acid Sequence | 1D | Sequence of residue indices or embeddings | Secondary structure prediction, signal peptide detection |

| Evolutionary Profile | 1D | Position-Specific Scoring Matrix (PSSM) | Protein family classification, solvent accessibility |

| Distance/Contact Map | 2D | 2D matrix of inter-residue distances | Tertiary structure assessment, protein folding |

| Molecular Surface | 3D | Voxelized 3D grid of physicochemical properties | Ligand binding site prediction, protein-protein docking |

Experimental Protocol for Sequence-Based Protein Classification

Objective: Classify protein sequences into functional families using a 1D-CNN.

Dataset Preparation:

- Sequence Retrieval: Obtain protein sequences and their functional labels from databases like UniProt or Pfam.

- Sequence Encoding:

- One-Hot Encoding: Represent each amino acid in a sequence as a 20-dimensional binary vector (plus one for a padding token if needed).

- Alternative Embeddings: Use pre-trained continuous representations like those from language models (ESM, ProtBert) for richer input features.

- Sequence Padding/Truncation: Standardize sequence lengths to a fixed value ( L ) by padding shorter sequences or truncating longer ones.

- Data Split: Split the dataset into training, validation, and test sets, ensuring no data leakage between splits.

Model Implementation (using PyTorch):

Training and Evaluation:

- Loss Function: Use Cross-Entropy loss for multi-class classification.

- Optimizer: Use Adam optimizer with a learning rate of 0.001.

- Training: Train for a fixed number of epochs (e.g., 50) with mini-batches, monitoring validation accuracy to avoid overfitting.

- Evaluation: Report final performance on the test set using accuracy, precision, recall, and F1-score.

Recurrent Neural Networks (RNNs) for Protein Sequence Modeling

Architectural Principles

Recurrent Neural Networks are a family of neural networks designed for sequential data, making them a natural fit for protein sequences [17] [18]. Unlike feedforward networks, RNNs possess an internal state or "memory" that captures information about previous elements in the sequence. The core component is the recurrent unit, which processes inputs step-by-step while maintaining a hidden state ( h_t ) that is updated at each time step ( t ).

The fundamental equations for a simple RNN (often called a "vanilla RNN") are: [ ht = \tanh(W{xh} xt + W{hh} h{t-1} + bh) ] [ yt = W{hy} ht + by ] where ( xt ) is the input at time ( t ), ( ht ) is the hidden state, ( y_t ) is the output, ( W ) matrices are learnable weights, and ( b ) terms are biases [17].

Advanced RNN Architectures

Simple RNNs suffer from the vanishing/exploding gradient problem, which makes it difficult to learn long-range dependencies in sequences like long protein chains [18]. This limitation led to the development of more sophisticated gated architectures:

- Long Short-Term Memory (LSTM): Introduces a gating mechanism with a cell state ( C_t ) that runs through the entire sequence, acting as a conveyor belt for information. It uses three gates to regulate information flow:

- Gated Recurrent Unit (GRU): A simplified alternative to LSTM that combines the forget and input gates into a single "update gate" and has fewer parameters, often making it faster to train while performing comparably on many tasks [18].

RNNs can be configured in different ways for various tasks: Many-to-One (e.g., sequence classification), One-to-Many (e.g., sequence generation), and Many-to-Many (e.g., sequence labeling) [17].

Application to Protein Sequence Analysis

In protein research, RNNs and their variants are primarily used for:

- Sequence Labeling: Predicting a label for each residue in a protein sequence, such as secondary structure (alpha-helix, beta-sheet, coil), solvent accessibility, or disorder.

- Protein Generation: Designing novel protein sequences with desired properties by modeling the probability distribution over amino acid sequences.

- Evolutionary Modeling: Analyzing and modeling the dependencies between different positions in a multiple sequence alignment.

Table: RNN Architectures for Protein Sequence Tasks

| Architecture | Gating Mechanism | Protein Task Example | Advantage for Protein Data |

|---|---|---|---|

| Simple RNN | None (tanh activation) | Baseline residue property prediction | Simple, low computational cost [17] |

| Long Short-Term Memory (LSTM) | Input, Forget, Output Gates | Long-range contact prediction [18] | Captures long-range dependencies in structure [18] |

| Gated Recurrent Unit (GRU) | Update and Reset Gates | Secondary structure prediction | Efficient; good for shorter sequences [18] |

| Bidirectional RNN (BiRNN) | Any (e.g., BiLSTM) | Residue-level function annotation | Uses context from both N and C-termini [18] |

Experimental Protocol for Per-Residue Secondary Structure Prediction

Objective: Predict the secondary structure state (Helix, Sheet, Coil) for each amino acid in a protein sequence using a Bidirectional LSTM.

Dataset Preparation:

- Data Source: Use a standard benchmark dataset like the Protein Data Bank (PDB) with DSSP-annotated secondary structures [1].

- Input Features: For each residue, create a feature vector containing:

- One-hot encoded amino acid type.

- PSSM (Position-Specific Scoring Matrix) profiles from PSI-BLAST.

- Other relevant features like predicted solvent accessibility or backbone angles.

- Label Encoding: Convert the 8-state DSSP classification into the standard 3-state (H, E, C).

- Sequence Padding: Use padding to handle variable-length sequences and masking to ignore padding during loss calculation.

Model Implementation (using PyTorch):

Training and Evaluation:

- Loss Function: Use Cross-Entropy loss, ignoring the padded positions.

- Optimizer: Use Adam optimizer with a learning rate of 0.001.

- Training: Monitor the Q3 accuracy (3-state accuracy) on the validation set.

- Evaluation: Report per-residue accuracy (Q3) and segment-level metrics (SOV) on the test set.

Transformers for Protein Language Modeling and Function Prediction

Architectural Fundamentals

The Transformer architecture, introduced in the "Attention Is All You Need" paper, has become the dominant paradigm for sequence processing tasks, largely displacing RNNs in many natural language processing applications [19]. Its core innovation is the self-attention mechanism, which allows the model to weigh the importance of all elements in a sequence when processing each element. For protein sequences, this is revolutionary as it can capture long-range interactions between residues that are spatially close in the 3D structure but distant in the primary sequence.

The key components of a Transformer are:

- Self-Attention: Computes a weighted sum of values, where the weights (attention scores) are based on the compatibility between a query and a set of keys. For a sequence of embeddings ( X ), the output is computed as ( \text{Attention}(Q, K, V) = \text{softmax}\left(\frac{QK^T}{\sqrt{d_k}}\right)V ), where ( Q = XW^Q ), ( K = XW^K ), and ( V = XW^V ) are the Query, Key, and Value matrices [19].

- Multi-Head Attention: Runs multiple self-attention mechanisms in parallel, allowing the model to jointly attend to information from different representation subspaces [19].

- Positional Encoding: Since Transformers lack inherent recurrence or convolution, they require explicit positional encodings (sinusoidal or learned) to incorporate the order of the sequence [19].

- Encoder-Decoder Architecture: The original Transformer uses an encoder to map an input sequence to a representation and a decoder to generate an output sequence autoregressively. For protein tasks, encoder-only models (like those based on BERT) are common for understanding, and decoder-only models (like GPT) are used for generation [19].

Application to Protein Science

Transformers have been spectacularly successful in protein bioinformatics, primarily through protein language models (pLMs):

- Pre-training and Fine-tuning: Large pLMs like ESM (Evolutionary Scale Modeling) and ProtTrans are pre-trained on millions of protein sequences from UniRef using masked language modeling objectives [19] [1]. The learned representations capture evolutionary, structural, and functional information. These pre-trained models can then be fine-tuned on specific downstream tasks with much less data, such as:

- Remote Homology Detection: Identifying evolutionarily related proteins.

- Function Prediction: Annotating proteins with Gene Ontology terms.

- Structure Prediction: Informing models like AlphaFold2 about residue-residue contacts.

- Structure-Based Transformers: Recent models like AlphaFold2 and its successors incorporate Transformer-like attention mechanisms over multiple sequence alignments and structural representations to achieve unprecedented accuracy in protein structure prediction [19].

Experimental Protocol for Fine-Tuning a Protein Language Model

Objective: Fine-tune a pre-trained protein Transformer (e.g., ESM-2) for a specific protein function prediction task.

Dataset Preparation:

- Task Definition: Choose a specific function prediction task, e.g., predicting enzyme commission numbers.

- Data Collection: Gather a labeled dataset of protein sequences and their corresponding functional labels.

- Sequence Tokenization: Use the tokenizer associated with the pre-trained model (e.g., ESM-2's tokenizer) to convert amino acid sequences into the expected input format.

- Data Split: Create training, validation, and test splits, ensuring no homology bias (e.g., using sequence identity clustering).

Model Implementation (using Hugging Face Transformers and PyTorch):

Training and Evaluation:

- Strategy: Use a small learning rate (e.g., 1e-5 to 5e-5) for fine-tuning to avoid catastrophic forgetting of the pre-trained knowledge.

- Optimizer: AdamW optimizer with linear warmup and decay.

- Training: Monitor performance on the validation set. Early stopping is recommended.

- Evaluation: Report accuracy, precision, recall, and F1-score on the held-out test set. For multi-label tasks, use mean average precision (mAP).

Successful application of deep learning to protein characterization requires both data and software resources. The table below catalogues essential "research reagents" for computational experiments in this domain.

Table: Essential Research Reagents for Protein Deep Learning

| Resource Name | Type | Primary Function | Relevance to Deep Learning |

|---|---|---|---|

| STRING | Database | Known and predicted PPIs [1] | Ground truth for training and evaluating GNNs for interaction prediction [1] |

| Protein Data Bank (PDB) | Database | Experimentally determined 3D structures [1] | Source of structural data for training structure prediction models and generating 3D/2D representations [1] |

| UniProt | Database | Comprehensive protein sequence & functional annotation | Primary source of sequences and labels for training sequence-based models (CNNs, RNNs, Transformers) |

| ESM / ProtTrans | Pre-trained Model | Protein Language Models (Transformers) [1] | Provides powerful contextualized residue embeddings for transfer learning, used as input features for various downstream tasks [1] |

| Pfam | Database | Protein family and domain annotations | Used for defining classification tasks for CNNs/Transformers and for functional analysis |

| PyTorch Geometric | Software Library | Graph Neural Network Implementation [14] | Facilitates the implementation and training of GNNs on protein graphs and PPI networks [14] |

| Hugging Face Transformers | Software Library | Transformer Model Implementation | Provides easy access to pre-trained Transformers (like ESM) for fine-tuning on protein tasks |

| DSSP | Algorithm | Secondary Structure Assignment | Generates ground truth labels from 3D structures for training RNNs/Transformers on secondary structure prediction |

The characterization of protein data through deep learning has moved from a niche application to a central paradigm in computational biology and drug discovery. Each of the four architectures discussed—GNNs, CNNs, RNNs, and Transformers—offers a unique set of strengths for different protein data modalities. GNNs excel at modeling relational information in structures and interaction networks. CNNs provide powerful feature extraction from sequences and structural images. RNNs effectively model the sequential dependencies in amino acid chains. Transformers, through pre-training and self-attention, capture complex, long-range dependencies and have become the foundation for general-purpose protein language models. The future of protein data characterization lies in the intelligent integration of these architectures—for example, combining the geometric reasoning of GNNs with the contextual power of Transformers—to create models that more fully capture the intricate relationship between protein sequence, structure, function, and interaction. This integrated approach will undoubtedly accelerate the pace of discovery in basic biological research and the development of novel therapeutics.

The Centrality of Protein-Protein Interactions (PPIs) in Biological Function

Protein-Protein Interactions (PPIs) are fundamental physical contacts between proteins that serve as the primary regulators of cellular function, influencing a vast array of biological processes including signal transduction, metabolic regulation, cell cycle progression, and transcriptional control [1] [20]. These interactions form complex, large-scale networks that define the functional state of a cell, and their disruption is frequently linked to disease pathogenesis [20] [21]. The comprehensive characterization of PPIs is therefore critical for elucidating the molecular mechanisms of life and for identifying potential therapeutic targets.

In the context of modern deep learning research, PPI data presents both a unique opportunity and a significant challenge. The inherent complexity and high-dimensional nature of protein interaction data make it particularly suited for analysis with advanced computational models. This whitepaper provides an in-depth technical guide to the central role of PPIs in biological function, framing the discussion within the scope of protein data characterization for deep learning. It details experimental and computational methodologies, data resources, and emerging analytical frameworks that are shaping this rapidly evolving field.

Biological Significance of PPI Networks

Functional Roles and Interaction Types

PPIs are not monolithic; they can be categorized based on their nature, temporal characteristics, and biological functions. Understanding these categories is essential for accurate data annotation and model training in machine learning applications.

Table 1: Types of Protein-Protein Interactions

| Categorization | Type | Functional Characteristics |

|---|---|---|

| Stability | Stable Interactions | Form long-lasting complexes (e.g., ribosomes) [1] [22] |

| Transient Interactions | Temporary binding for signaling and regulation [1] [22] | |

| Interaction Nature | Direct (Physical) | Direct physical contact between proteins [20] |

| Indirect (Functional) | Proteins are part of the same pathway or complex without direct contact [20] | |

| Composition | Homodimeric | Interactions between identical proteins [1] |

| Heterodimeric | Interactions between different proteins [1] |

At a systems level, PPIs form large-scale networks that exhibit distinct topological properties, often described as "scale-free," meaning a majority of proteins have few connections, while a small number of highly connected "hub" proteins play critical roles in network integrity [20]. The analysis of these networks relies on specific metrics to identify functionally important elements.

Table 2: Key Topological Properties of PPI Networks

| Term | Definition | Biological Interpretation |

|---|---|---|

| Degree (k) | The number of direct interactions a node (protein) has [20]. | Proteins with high degree (hubs) are often essential for cellular function. |

| Clustering Coefficient (C) | Measures the tendency of a node's neighbors to connect to each other [20]. | Identifies tightly knit functional modules or protein complexes. |

| Betweenness Centrality | Measures how often a node appears on the shortest path between other nodes [20] [22]. | Identifies proteins that act as bridges between different network modules. |

| Shortest Path Length | The minimum number of steps required to connect two nodes [20]. | Indicates the efficiency of communication or signaling between two proteins. |

Experimental Methods for PPI Detection

High-quality, experimentally-derived data is the foundation for training robust deep learning models. Several well-established experimental techniques are used to identify and validate PPIs, each with distinct strengths and limitations.

Key Experimental Protocols

1. Yeast Two-Hybrid (Y2H) System

- Principle: A genetic method conducted in living yeast cells. The "bait" protein is fused to a DNA-binding domain, and the "prey" protein is fused to an activation domain. If the bait and prey interact, they reconstitute a functional transcription factor that drives the expression of a reporter gene [20] [22].

- Workflow:

- Clone genes of interest into bait and prey vectors.

- Co-transform vectors into a suitable yeast strain.

- Plate transformed yeast on selective media lacking specific nutrients.

- Assess reporter gene activation (e.g., through growth or colorimetric assays).

- Data Output: Binary interaction data for pair-wise testing.

- Considerations for DL: Can generate a high number of false positives; results require validation [22].

2. Affinity Purification-Mass Spectrometry (AP-MS)

- Principle: A tagged "bait" protein is expressed in cells and used to purify its interacting partners ("prey") from a complex mixture. The co-purified proteins are then identified using mass spectrometry [22].

- Workflow:

- Introduce a tag (e.g., FLAG, HA) to the bait protein.

- Lyse cells and incubate the lysate with an antibody or resin specific to the tag.

- Wash away non-specifically bound proteins.

- Elute the protein complex and digest it with trypsin.

- Analyze the resulting peptides by LC-MS/MS to identify the prey proteins.

- Data Output: Identifies components of protein complexes, providing information on composition and stoichiometry [22].

- Considerations for DL: Identifies both stable and transient interactions, but does not distinguish between direct and indirect binding.

3. Förster Resonance Energy Transfer (FRET)

- Principle: A technique to measure very close proximity (1-10 nm) between two proteins. If two proteins of interest are tagged with different fluorophores, energy transfer can occur only if they are in direct physical contact [22].

- Workflow:

- Fuse proteins of interest to donor (e.g., CFP) and acceptor (e.g., YFP) fluorophores.

- Express the fusion proteins in cells.

- Excite the donor fluorophore with a specific wavelength of light.

- Measure emission from the acceptor fluorophore, which indicates interaction.

- Data Output: Spatio-temporal information about protein interactions in living cells.

- Considerations for DL: Provides high-resolution, dynamic data but is low-throughput.

The Scientist's Toolkit: Key Research Reagents

Table 3: Essential Reagents for PPI Experimental Methods

| Reagent / Resource | Function in PPI Analysis |

|---|---|

| Plasmid Vectors (Bait/Prey) | Used in Y2H to express proteins as fusions with DNA-binding or activation domains [22]. |

| Affinity Tags (e.g., FLAG, HA) | Fused to a protein of interest for purification and detection in AP-MS [22]. |

| Specific Antibodies | Bind to affinity tags or native proteins to pull down complexes in co-IP and AP-MS [1] [22]. |

| Fluorophores (e.g., CFP, YFP) | Protein tags used in FRET to detect close-proximity interactions [22]. |

| Mass Spectrometry | Identifies proteins in a complex by measuring the mass-to-charge ratio of peptides [22]. |

Computational Prediction and Deep Learning for PPIs

The limitations of experimental methods—including cost, time, and scalability—have driven the development of computational approaches. Deep learning models, in particular, have shown remarkable success in predicting PPIs directly from protein data.

Core Deep Learning Architectures for PPI Prediction

A. Graph Neural Networks (GNNs) GNNs have become a dominant architecture for PPI prediction because they natively operate on graph-structured data, perfectly matching both the 3D structure of individual proteins and the network structure of interactomes [1] [23].

- Graph Construction: A protein structure is represented as a graph where nodes are amino acid residues and edges represent spatial proximity or chemical bonds [23] [24].

- Message Passing: GNNs update node features by aggregating information from neighboring nodes, capturing both local and global structural contexts [1].

- Key Variants:

- Graph Convolutional Networks (GCNs): Apply convolutional operations to aggregate neighbor information [1] [23].

- Graph Attention Networks (GATs): Use attention mechanisms to weight the importance of different neighbors, improving model flexibility and interpretability [1] [25].

- Graph Isomorphism Networks (GINs): Offer high discriminative power for graph classification tasks [23].

B. Hierarchical Graph Learning (HIGH-PPI) The HIGH-PPI framework models the natural hierarchy of PPIs by employing two GNNs [23]:

- Bottom Inside-of-Protein View (BGNN): Learns representations from the 3D graph of a single protein (residues as nodes).

- Top Outside-of-Protein View (TGNN): Learns from the PPI network where each node is a protein graph from the bottom view. This dual perspective allows mutual optimization, where protein representations improve network learning and vice versa, leading to state-of-the-art prediction accuracy and model interpretability [23].

C. SpatialPPIv2 This advanced model leverages large language models (e.g., ESM-2) to embed protein sequence features and combines them with a GAT to capture structural information [25]. Its key advancement is reduced dependency on experimentally determined protein structures, as it can utilize predicted structures from tools like AlphaFold2/3 and ESMFold, making it highly versatile and robust [25].

Structure-Based Prediction Workflow (Struct2Graph)

Struct2Graph is a GAT-based model that predicts PPIs directly from the 3D atomic coordinates of folded protein structures [24]. The following protocol details its operation:

- Input: Protein Data Bank (PDB) files for the two candidate proteins.

- Step 1: Graph Representation.

- Each protein is converted into a graph

G(V, E, F). V(Nodes): Represent individual atoms or residues.E(Edges): Defined based on spatial proximity (e.g., Euclidean distance within a cutoff).F(Node Features): Initialized using chemically relevant descriptors (e.g., atom type, charge, residue type) rather than raw sequence [23] [24].

- Each protein is converted into a graph

- Step 2: Graph Attention Network.

- The model employs a mutual attention mechanism across the two protein graphs.

- For each node, the GAT computes attention coefficients that weight the contributions of its neighbors, allowing the model to focus on structurally and chemically important regions [24].

- Step 3: Graph Embedding.

- The updated node features are pooled (e.g., mean pooling) to generate a fixed-dimensional embedding vector for each protein graph.

- Step 4: Interaction Prediction.

- The embeddings of the two proteins are concatenated and passed through a Multi-Layer Perceptron (MLP) classifier.

- The final output is a probability score for the interaction [24].

- Output: A binary prediction (interacting/non-interacting) and, via the attention weights, identification of residues likely involved in the interaction interface.

The development of accurate deep learning models relies on access to large, high-quality datasets. The following table summarizes key public databases essential for training and benchmarking PPI prediction models.

Table 4: Key Databases for PPI Data and Analysis

| Database Name | Description | Primary Use in DL Research |

|---|---|---|

| STRING | A comprehensive database of known and predicted PPIs, integrating multiple sources [1] [23]. | Training and benchmarking network-based prediction models. |

| BioGRID | A repository of protein and genetic interactions curated from high-throughput screens and literature [1] [22]. | Source of high-confidence ground-truth data for model training. |

| IntAct | A protein interaction database maintained by the EBI, offering manually curated molecular interaction data [1] [22]. | Providing reliable, annotated positive examples for classifiers. |

| PINDER | A comprehensive dataset including structural data from RCSB PDB and AlphaFold, designed for training flexible models [25]. | Training and evaluating structure-based deep learning models like SpatialPPIv2. |

| Negatome 2.0 | A curated dataset of high-confidence, non-interacting protein pairs [25]. | Providing critical negative examples to prevent model bias and overfitting. |

| RCSB PDB | The primary database for experimentally determined 3D structures of proteins and nucleic acids [1] [25]. | Source of structural data for graph construction in models like Struct2Graph. |

Applications in Disease and Drug Discovery

The analysis of PPI networks provides a powerful framework for understanding human disease and identifying new therapeutic opportunities. Diseases often arise from mutations that disrupt normal PPIs or create aberrant new interactions [20]. Network medicine approaches analyze PPI networks to uncover disease modules—groups of interconnected proteins associated with a specific pathology [20] [21].

A key application is the identification of druggable PPI interfaces. Unlike traditional drug targets, PPI interfaces tend to be larger, flatter, and more hydrophobic, presenting unique challenges [26]. Computational tools like PPI-Surfer have been developed to compare and quantify the similarity of local surface regions of different PPIs using 3D Zernike descriptors (3DZD), aiding in the repurposing of known protein-protein interaction inhibitors (SMPPIIs) and the identification of novel binding sites [26]. This approach is valuable because it operates without requiring prior structural alignment of protein complexes.

Furthermore, research has shown that disease-associated genes display tissue-specific phenotypes, and their protein products preferentially accumulate in specific functional units (Biological Interacting Units, BioInt-U) within tissue-specific PPI networks [21]. This finding underscores the importance of context-aware network analysis for refining protein-disease associations and identifying tissue-specific therapeutic vulnerabilities.

Protein-Protein Interactions are central to biological function, and their comprehensive characterization is a cornerstone of modern computational biology. The shift from purely experimental identification to integrated computational prediction, powered by deep learning, is revolutionizing the field. Frameworks such as HIGH-PPI and Struct2Graph, which leverage graph neural networks to model the inherent hierarchy and 3D structure of proteins, are demonstrating superior accuracy and interpretability. The continued development of large-scale, high-quality datasets like PINDER, coupled with advanced protein language models, is set to further enhance the robustness and generalizability of these tools. As these methods mature, they will profoundly accelerate the mapping of the human interactome, deepen our understanding of disease mechanisms, and unlock new avenues for therapeutic intervention.

Protein data characterization provides the foundational framework for developing and training sophisticated deep learning models in computational biology. This process transforms raw biological data into structured, machine-readable information that captures the complex physical and functional principles governing protein behavior. Within the context of deep learning research, accurate characterization is not merely preliminary data processing but a critical enabler that allows models to learn the intricate relationships between protein sequence, structure, and function [1]. The reliability of downstream predictive tasks—from interaction prediction to binding site identification—depends fundamentally on the granularity and accuracy of these upstream characterization tasks.

Proteins undertake various vital activities of living organisms through their three-dimensional structures, which are determined by the linear sequence of amino acids [27]. The characterization pipeline systematically deconstructs this complexity into manageable computational tasks, each addressing a specific aspect of protein functionality. As deep learning continues to revolutionize computational biology, particularly in protein-protein interaction (PPI) research, the field is undergoing transformative changes that demand increasingly sophisticated characterization methodologies [1]. This technical guide examines the core characterization tasks that form the essential preprocessing stages for deep learning applications in proteomics and drug development.

Core Protein Characterization Tasks

Protein characterization encompasses multiple specialized tasks that collectively provide a comprehensive understanding of protein function. Each task addresses specific biological questions and generates structured data outputs suitable for deep learning model training.

Protein-Protein Interaction Prediction

Definition and Biological Significance: Protein-protein interactions are fundamental regulators of biological functions, influencing diverse cellular processes such as signal transduction, cell cycle regulation, transcriptional regulation, and cytoskeletal dynamics [1]. PPIs can be categorized based on their nature, temporal characteristics, and functions: direct and indirect interactions, stable and transient interactions, as well as homodimeric and heterodimeric interactions [1]. Different types of interactions shape their functional characteristics and work in concert to regulate cellular biological processes.

Computational Challenge: The core challenge in PPI prediction is to determine whether two proteins interact based on their sequence, structural, or evolutionary features. This binary classification problem is complicated by the enormous search space, with the human proteome alone containing approximately 200 million potential protein pairs [1] [28].

Deep Learning Approaches: Modern deep learning methods have significantly advanced beyond early computational approaches that relied on manually engineered features [1]. Graph neural networks (GNNs) have demonstrated remarkable capabilities in capturing the topological information within PPI networks [28]. Specific architectures include:

- Graph Convolutional Networks (GCNs): Employ convolutional operations to aggregate information from neighboring nodes in protein interaction graphs [1].

- Graph Attention Networks (GATs): Introduce an attention mechanism that adaptively weights neighboring nodes based on their relevance [1].

- Hyperbolic GCNs: Capture the inherent hierarchical organization of PPI networks by embedding structural and relational information into hyperbolic space, where the distance from the origin naturally reflects the hierarchical level of proteins [28].

The recently developed HI-PPI framework integrates hyperbolic geometry with interaction-specific learning, demonstrating state-of-the-art performance on benchmark datasets with Micro-F1 scores improvements of 2.62%-7.09% over previous methods [28].

Table 1: Key Deep Learning Architectures for PPI Prediction

| Architecture | Key Mechanism | Advantages | Representative Models |

|---|---|---|---|

| Graph Convolutional Networks (GCNs) | Convolutional operations aggregating neighbor information | Effective for node classification and graph embedding | GCN-PPI, BaPPI |

| Graph Attention Networks (GATs) | Attention-based weighting of neighbor nodes | Handles graphs with diverse interaction patterns | AFTGAN |

| Hyperbolic GCNs | Embedding in hyperbolic space to capture hierarchy | Represents natural hierarchical organization of PPI networks | HI-PPI |

| Graph Autoencoders | Encoder-decoder framework for graph reconstruction | Enables hierarchical representation learning | DGAE |

| Multi-modal GNNs | Integration of sequence, structure, and network data | Handles heterogeneous protein data | MAPE-PPI |

Interaction Site Identification

Definition and Biological Significance: Interaction site prediction focuses on identifying specific regions on the protein surface that are likely to participate in molecular interactions [1]. These binding interfaces are typically characterized by specific physicochemical properties and structural motifs that facilitate molecular recognition. Identifying precise interaction sites is crucial for understanding disease mechanisms and designing targeted therapeutics.

Computational Challenge: This task requires high-resolution structural data and involves identifying which specific amino acid residues form the interface between interacting proteins [1]. This is fundamentally a sequence labeling problem where each residue in a protein sequence is classified as either belonging to an interaction interface or not.

Deep Learning Approaches: Interaction site prediction leverages both sequence-based and structure-based deep learning models:

- Convolutional Neural Networks (CNNs): Applied to protein sequences and structures to detect local patterns indicative of binding sites.

- Geometric Deep Learning: Operates directly on 3D protein structures to identify spatial features that characterize interaction interfaces.

- Multi-scale Architectures: Methods like Topotein employ topological deep learning through Protein Combinatorial Complex (PCC) representations that capture hierarchical organization from residues to secondary structures to complete proteins [29].

Cross-Species Interaction Prediction

Definition and Biological Significance: Cross-species interaction prediction aims to predict protein interactions across different species, facilitating the integration of data from diverse organisms and enabling transfer learning applications [1]. This task is particularly valuable for extending knowledge from model organisms to humans or for studying host-pathogen interactions.

Computational Challenge: The fundamental challenge is leveraging interaction patterns learned from well-studied organisms to make predictions in less-characterized species despite evolutionary divergence.

Deep Learning Approaches: Transfer learning and domain adaptation techniques are particularly valuable for this task:

- Domain-Domain Interaction Methods: Leverage the conservation of domain interactions across species, using approaches like Maximum Likelihood Estimation and Bayesian methods to infer interaction probabilities [30].

- Transfer Learning: Pre-training models on large datasets from model organisms followed by fine-tuning on target species.

- Orthology-Based Methods: Utilize evolutionary relationships between proteins across species to transfer interaction annotations.

PPI Network Construction and Analysis

Definition and Biological Significance: The construction and analysis of PPI networks provide invaluable insights into global interaction patterns and the identification of functional modules, which are essential for understanding the complex regulatory mechanisms governing cellular processes [1]. These networks represent proteins as nodes and their interactions as edges, creating a systems-level view of cellular machinery.

Computational Challenge: The key challenges include integrating heterogeneous data sources, handling noise and incompleteness in interaction data, and extracting biologically meaningful patterns from complex networks.

Deep Learning Approaches: GNN-based approaches excel at learning representations that capture both local and global properties of PPI networks:

- Graph Embedding Methods: Learn low-dimensional representations of proteins that preserve their structural roles in the network.

- Community Detection Algorithms: Identify densely connected clusters within PPI networks that often correspond to functional modules or protein complexes.

- Hierarchical Representation Learning: Methods like HI-PPI explicitly model the multi-scale organization of PPI networks, from individual interactions to pathway-level organization [28].

Experimental Methodologies for PPI Characterization

A comprehensive understanding of experimental methods for PPI detection is essential for properly interpreting and leveraging the data these methods generate for deep learning applications.

In Vivo Methods

Yeast Two-Hybrid (Y2H) Systems: Y2H is typically carried out by screening a protein of interest against a random library of potential protein partners [31]. This method detects binary interactions through reconstitution of transcription factor activity in yeast nuclei. While Y2H provides valuable data on direct physical interactions, it suffers from limitations including high false positive rates estimated at 0.2 to 0.5, and an inability to detect interactions that require post-translational modifications not present in the yeast system [30] [31].

Synthetic Lethality: This approach identifies functional interactions rather than direct physical interactions by observing when simultaneous disruption of two genes results in cell death [31]. Synthetic lethality provides information about genetic interactions and functional relationships within pathways.

In Vitro Methods

Tandem Affinity Purification-Mass Spectrometry (TAP-MS): TAP-MS is based on the double tagging of the protein of interest on its chromosomal locus, followed by a two-step purification process and mass spectroscopic analysis [31]. This method identifies protein complexes rather than binary interactions, providing insights into functional modules within the cell. A significant advantage of TAP-tagging is its ability to identify a wide variety of protein complexes and to test the activeness of monomeric or multimeric protein complexes that exist in vivo [31].

Affinity Chromatography: This highly responsive method can detect even weak interactions in proteins and tests all sample proteins equally for interaction with the coupled protein in the column [31]. However, false positive results may occur due to non-specific binding, requiring validation through complementary methods.

Co-immunoprecipitation (Co-IP): This method confirms interactions using a whole cell extract where proteins are present in their native form in a complex mixture of cellular components that may be required for successful interactions [31]. The use of eukaryotic cells enables post-translational modifications which may be essential for interaction.

Protein Microarrays: These involve printing various protein molecules on a glass surface in an ordered manner, allowing high-throughput screening of interactions [31]. Protein microarrays enable efficient and sensitive parallel analysis of thousands of parameters within a single experiment.

X-ray Crystallography and NMR Spectroscopy: These structural biology techniques enable visualization of protein structures at the atomic level, providing detailed information about interaction interfaces [31]. While not high-throughput, these methods offer unparalleled resolution for understanding the structural basis of PPIs.

Table 2: Experimental Methods for PPI Detection

| Method | Type | Key Principle | Throughput | Key Limitation |