Decoding the Protein Universe: A Comprehensive Guide to ESM2 and ProtBERT in Computational Biology

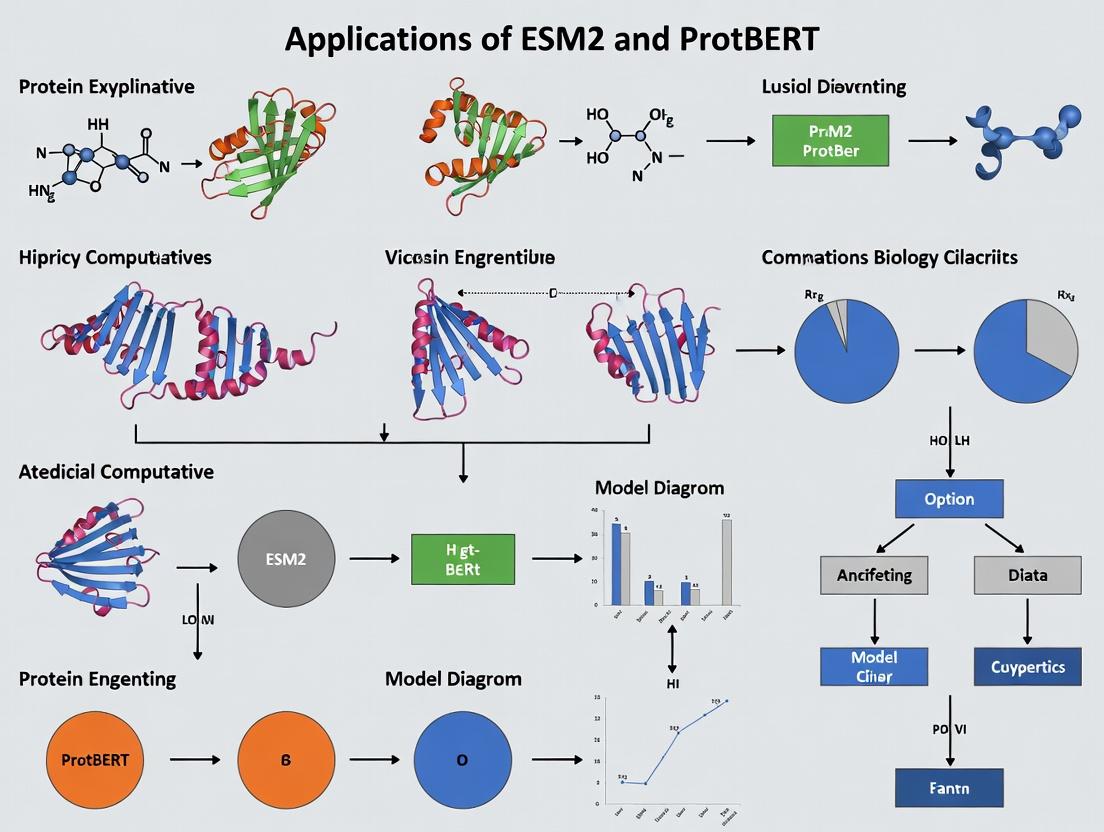

This article provides a thorough exploration of the cutting-edge protein language models, ESM2 and ProtBERT, and their transformative applications in computational biology.

Decoding the Protein Universe: A Comprehensive Guide to ESM2 and ProtBERT in Computational Biology

Abstract

This article provides a thorough exploration of the cutting-edge protein language models, ESM2 and ProtBERT, and their transformative applications in computational biology. Designed for researchers, scientists, and drug development professionals, the content moves from foundational concepts to advanced applications. We cover the fundamental architectures and training principles of these models, detail their practical use in tasks like variant effect prediction, protein design, and function annotation, and address common challenges in implementation and fine-tuning. The guide also offers a comparative analysis of model performance across different benchmarks and concludes by synthesizing the current state of the field and its profound implications for accelerating biomedical discovery and therapeutic development.

Protein Language Models 101: Understanding ESM2 and ProtBERT Architectures and Core Capabilities

The application of Natural Language Processing (NLP) concepts to protein sequences represents a paradigm shift in computational biology. This whitepaper frames this approach within the broader thesis of employing advanced language models, specifically ESM2 and ProtBERT, to decode the "language of life" for transformative research in drug development and fundamental biology. Proteins, composed of amino acid "words," form functional "sentences" with structure, function, and evolutionary meaning, making them intrinsically suitable for language model analysis.

Foundational Concepts: NLP to Protein Linguistics

The core analogy maps NLP components to biological equivalents:

- Vocabulary: The 20 standard amino acids (plus special tokens for padding, mask, etc.).

- Sequence: The linear chain of residues (a "sentence").

- Grammar/Syntax: The physico-chemical rules and constraints governing folding and stability.

- Semantics: The protein's three-dimensional structure and biological function.

- Context: The cellular environment, interacting partners, and evolutionary history.

Large-scale self-supervised learning on massive protein sequence databases allows models like ESM2 and ProtBERT to internalize these complex relationships without explicit structural or functional labels.

Key Models: ESM2 and ProtBERT

ESM2 (Evolutionary Scale Modeling)

A transformer-based model developed by Meta AI, trained on up to 65 billion parameters using the Masked Language Modeling (MLM) objective on the UniRef database. It excels at learning evolutionary patterns and predicting structure directly from sequence.

ProtBERT

A BERT-based model, also trained with MLM on UniRef and BFD databases. It captures deep contextual embeddings for each amino acid, useful for downstream functional predictions.

Table 1: Comparative Overview of ESM2 and ProtBERT

| Feature | ESM2 (15B param version) | ProtBERT (Bert-base) |

|---|---|---|

| Architecture | Transformer (Encoder-only) | BERT (Encoder-only) |

| Parameters | Up to 15 Billion | ~110 Million |

| Training Data | UniRef90 (65M sequences) | UniRef100 (216M seqs) + BFD |

| Primary Training Objective | Masked Language Modeling (MLM) | Masked Language Modeling (MLM) |

| Key Output | Sequence embeddings, contact maps, structure | Contextual residue embeddings |

| Typical Application | Structure prediction, evolutionary analysis | Function prediction, variant effect |

| Model Accessibility | Publicly available (ESM Atlas) | Publicly available (Hugging Face) |

Experimental Protocols & Applications

Protocol: Zero-Shot Prediction of Fitness from Sequence

This protocol uses model-derived embeddings to predict the functional effect of mutations without task-specific training.

- Sequence Embedding Generation: Input the wild-type and mutant protein sequences separately into ESM2. Extract the last hidden layer embeddings for each token (amino acid).

- Embedding Distance Calculation: Compute the cosine distance or Euclidean distance between the wild-type and mutant sequence embeddings. For single-point mutations, focus on the local context window around the mutated residue.

- Fitness Score Correlation: Correlate the computed embedding distance with experimentally measured fitness scores (e.g., from deep mutational scanning studies). A higher distance often correlates with a larger functional impact.

- Validation: Perform statistical validation (e.g., Pearson/Spearman correlation) against held-out experimental data.

Protocol: Embedding-Based Protein Function Prediction

Using ProtBERT/ESM2 embeddings as input features for supervised classifiers.

- Dataset Curation: Assemble a dataset of protein sequences labeled with Gene Ontology (GO) terms or Enzyme Commission (EC) numbers.

- Feature Extraction: For each sequence, generate a per-residue embedding using ProtBERT. Create a single sequence-level embedding by applying mean pooling across the sequence length.

- Classifier Training: Feed the pooled embeddings into a shallow neural network or gradient-boosting classifier (e.g., XGBoost) to predict the functional labels.

- Evaluation: Assess performance using standard metrics (F1-score, AUPRC) in a cross-validation setup, comparing against baseline methods like BLAST.

Table 2: Performance Benchmarks (Representative Studies)

| Task | Model Used | Metric | Reported Performance | Baseline (e.g., BLAST/Physical Model) |

|---|---|---|---|---|

| Contact Prediction | ESM2 (15B) | Precision@L/5 | 0.85 (for large proteins) | 0.45 (from covariation) |

| Variant Effect Prediction | ESM1v (ensemble) | Spearman's ρ | 0.73 (on deep mutational scans) | 0.55 (EVE model) |

| Remote Homology Detection | ProtBERT Embeddings | ROC-AUC | 0.92 | 0.78 (HMMer) |

| Structure Prediction | ESMFold (based on ESM2) | TM-score | >0.7 for many targets | Varies widely |

Visualizing Workflows and Relationships

Title: NLP-Protein Analogy & Model Application Workflow

Title: Zero-Shot Variant Effect Prediction Protocol

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Research Toolkit for Protein Language Modeling

| Item / Resource | Function / Purpose | Example / Provider |

|---|---|---|

| Protein Sequence Databases | Raw "text" for model training and inference. Provides evolutionary context. | UniRef, BFD, MGnify |

| Pre-trained Model Weights | The core trained model enabling transfer learning without costly pre-training. | ESM2 (Hugging Face, Meta), ProtBERT (Hugging Face) |

| Embedding Extraction Code | Software to generate numerical representations from raw sequences using pre-trained models. | bio-embeddings pipeline, ESM transformers library |

| Functional Annotation Databases | Ground truth labels for supervised training and evaluation of model predictions. | Gene Ontology (GO), Pfam, Enzyme Commission (EC) |

| Variant Effect Benchmarks | Experimental datasets for validating zero-shot or fine-tuned predictions. | ProteinGym (DMS assays), ClinVar (human variants) |

| Structural Data Repositories | High-quality 3D structures for validating contact/structure predictions. | Protein Data Bank (PDB), AlphaFold DB |

| High-Performance Computing (HPC) | GPU/TPU clusters necessary for running large models (ESM2) and generating embeddings at scale. | Local clusters, Cloud (AWS, GCP), Academic HPC centers |

The application of large-scale language models to biological sequences represents a paradigm shift in computational biology. Within this thesis, which explores the Applications of ESM2 and ProtBERT in research, ESM2 stands out for its scale and direct evolutionary learning. While ProtBERT, trained on UniRef100, leverages the BERT architecture for protein understanding, ESM2's core innovation is its use of the evolutionary sequence record as its fundamental training signal, modeled at unprecedented scale.

Core Architecture and Key Innovations of ESM2

ESM2 is a transformer-based language model specifically architected for protein sequences. Its key innovations include:

- Evolutionary-Scale Training Objective: It uses a standard masked language modeling (MLM) objective, but applied to the evolutionary "sequence space." The model learns to predict masked amino acids based on the context provided by homologous sequences across the tree of life.

- Scalable Transformer Architecture: ESM2 variants range from 8M to 15B parameters. The largest models incorporate:

- Rotary Position Embeddings (RoPE): For better generalization across sequence lengths.

- Gated Linear Units: Replacing feed-forward networks for efficiency.

- Pre-Layer Normalization: For stable training.

- Contextualized Representation: Each amino acid in a sequence is represented as a high-dimensional vector (embedding) that encodes its structural and functional context within the protein.

Model Scale and Training Data Specifications

ESM2 was trained on the UniRef90 dataset (2022 release), which clusters UniProt sequences at 90% identity. The model family scales across several orders of magnitude in parameters and training compute.

Table 1: ESM2 Model Family Scale and Training Data

| Model Variant | Parameters (Billions) | Layers | Embedding Dimension | Training Tokens (Billions) | Dataset |

|---|---|---|---|---|---|

| ESM2-8M | 0.008 | 6 | 320 | 0.1 | UniRef90 (2022) |

| ESM2-35M | 0.035 | 12 | 480 | 0.5 | UniRef90 (2022) |

| ESM2-150M | 0.15 | 30 | 640 | 1.0 | UniRef90 (2022) |

| ESM2-650M | 0.65 | 33 | 1280 | 2.5 | UniRef90 (2022) |

| ESM2-3B | 3.0 | 36 | 2560 | 12.5 | UniRef90 (2022) |

| ESM2-15B | 15.0 | 48 | 5120 | 25.0+ | UniRef90 (2022) |

Experimental Protocols for Key Applications

Protocol 1: Extracting Protein Structure Representations (for Folding)

- Input: A single protein sequence in FASTA format.

- Tokenization: The sequence is tokenized into standard amino acid tokens plus special tokens (e.g.,

<cls>,<eos>). - Forward Pass: The sequence is passed through the pretrained ESM2 model (e.g., ESM2-3B or ESM2-15B) without fine-tuning.

- Representation Extraction: The hidden state outputs from the final transformer layer are extracted. The representations for all tokens correspond to the full sequence.

- Structure Prediction: These representations are used as input to a folding head (a simple trRosetta-style structure module) that predicts a distance distribution and dihedral angles for each residue pair.

- Structure Generation: The predicted distances and angles are converted into 3D coordinates using a differentiable structure module.

Protocol 2: Zero-Shot Fitness Prediction for Mutations

- Input: A wild-type protein sequence and a list of single or multiple point mutations.

- Sequence Scoring: The model computes the log-likelihood score for the wild-type (

S_wt) and each mutant sequence (S_mut). This is done by summing the log probabilities of each token in the sequence under the MLM objective. - Fitness Score Calculation: The pseudo-log-likelihood ratio is computed as the difference:

Δlog P = S_mut - S_wt. A higherΔlog Pindicates the model deems the mutant sequence more "natural," often correlating with functional fitness. - Validation: Scores are benchmarked against experimental deep mutational scanning (DMS) data to establish correlation metrics (e.g., Spearman's ρ).

Visualizations

Title: ESM2 Protein Structure Prediction Workflow

Title: Zero-Shot Mutation Fitness Prediction with ESM2

The Scientist's Toolkit: Key Research Reagents & Materials

Table 2: Essential Resources for Working with ESM2 in Research

| Item Name | Type (Software/Data/Service) | Primary Function in ESM2 Research |

|---|---|---|

| ESM2 Model Weights | Pre-trained Model | Provides the foundational parameters for all downstream tasks (available via Hugging Face, FAIR). |

Hugging Face transformers Library |

Software Library (Python) | Standard interface for loading ESM2 models, tokenizing sequences, and running inference. |

| PyTorch | Software Framework | Deep learning framework required to run ESM2 models. |

| UniRef90 (latest release) | Protein Sequence Database | The curated dataset used for training; used for benchmarking and understanding model scope. |

| Protein Data Bank (PDB) | Structure Database | Provides ground-truth 3D structures for validating ESM2's structure predictions and embeddings. |

| Deep Mutational Scanning (DMS) Datasets | Experimental Data | Benchmarks (e.g., from ProteinGym) for evaluating zero-shot fitness prediction accuracy. |

| ColabFold / OpenFold | Software Pipeline | Integrates ESM2 embeddings with fast, homology-free structure prediction for end-to-end analysis. |

| Biopython | Software Library | Handles sequence I/O, manipulation, and analysis of FASTA files in conjunction with ESM2 outputs. |

| High-Performance Computing (HPC) Cluster or Cloud GPU (A100/V100) | Hardware | Essential for running the largest ESM2 models (3B, 15B) and conducting large-scale inference. |

This analysis of ProtBERT is situated within a broader thesis investigating the transformative role of deep learning protein language models (pLMs), specifically ESM2 and ProtBert, in computational biology. While ESM2 exemplifies a causal, autoregressive architecture trained on raw sequence data, ProtBERT represents the alternative paradigm: a denoising autoencoder based on the BERT framework. Understanding ProtBERT's unique training approach is essential for comparing model philosophies and selecting the appropriate tool for tasks such as function prediction, variant effect analysis, and therapeutic protein design.

Core Architecture: Adaptation of BERT to Protein Sequences

ProtBERT is built upon the Bidirectional Encoder Representations from Transformers (BERT) architecture. Its core innovation is applying BERT's masked language modeling (MLM) objective to the "language" of proteins, where the vocabulary consists of the 20 standard amino acids plus special tokens.

- Tokenizer: A single-amino-acid tokenizer, where each character (e.g., "M", "K", "A") is treated as a distinct token.

- Embedding: Token embeddings are combined with learned positional embeddings to inform the model of residue order.

- Encoder Stack: Like BERT, it uses a multi-layer, bidirectional Transformer encoder. This allows every position in the sequence to incorporate context from all other positions, both left and right.

- Output: The model outputs a contextualized representation (embedding) for every input amino acid position.

Unique Training Approach: Masked Language Modeling on Proteins

The pre-training objective is what specializes BERT for proteins. A percentage of input amino acids (typically 15%) is randomly masked. The model must predict the original identity of these masked tokens based on the full, bidirectional context of the surrounding sequence.

Key Training Protocol Details:

- Dataset: Trained on the UniRef100 database (latest versions use UniRef100 BFD), containing millions of diverse protein sequences.

- Masking Strategy: Similar to BERT, using the [MASK] token 80% of the time, a random amino acid 10% of the time, and the unchanged original amino acid 10% of the time.

- Objective Function: Cross-entropy loss calculated only on the predictions for the masked positions.

- Implied Learning: This task forces the model to internalize the complex biophysical and evolutionary constraints governing protein sequences, learning concepts like secondary structure propensity, residue conservation, and co-evolutionary signals.

Comparative Quantitative Performance

ProtBERT's embeddings serve as powerful features for downstream prediction tasks. Performance is often benchmarked against other pLMs like ESM2.

Table 1: Performance Comparison on Protein Function Prediction (DeepFRI)

| Model (Embedding Source) | GO Molecular Function F1 (↑) | GO Biological Process F1 (↑) | Enzyme Commission F1 (↑) |

|---|---|---|---|

| ProtBERT (BFD) | 0.53 | 0.46 | 0.78 |

| ESM-2 (650M params) | 0.58 | 0.49 | 0.81 |

| One-Hot Encoding (Baseline) | 0.35 | 0.31 | 0.62 |

Table 2: Performance on Stability Prediction (Thermostability)

| Model | Spearman's ρ (↑) | RMSE (↓) |

|---|---|---|

| ProtBERT Fine-tuned | 0.73 | 1.05 °C |

| ESM-2 Fine-tuned | 0.75 | 0.98 °C |

| Traditional Features (e.g., PoPMuSiC) | 0.65 | 1.30 °C |

Experimental Protocol for Downstream Fine-tuning

A standard protocol for adapting ProtBERT to a specific task (e.g., fluorescence prediction) is outlined below.

Title: ProtBERT Fine-tuning Workflow for Property Prediction

Detailed Methodology:

- Data Preparation: Curate a labeled dataset (sequence, target value). Split into train/validation/test sets.

- Sequence Preprocessing: Truncate or pad sequences to a defined maximum length (e.g., 512 residues).

- Model Setup: Load the pre-trained ProtBERT model. Add a task-specific prediction head (a feed-forward neural network) on top of the pooled output (e.g., the [CLS] token embedding or mean of residue embeddings).

- Fine-tuning: Train the entire model (or only the task head) using backpropagation with a task-appropriate loss (MSE for regression, Cross-Entropy for classification). Use a low learning rate (e.g., 1e-5) to avoid catastrophic forgetting.

- Evaluation: Validate on the held-out test set using domain-relevant metrics (Spearman's ρ, RMSE, AUROC).

The Scientist's Toolkit: Key Research Reagent Solutions

Table 3: Essential Tools for Working with ProtBERT

| Item / Solution | Function in Research | Example / Note |

|---|---|---|

| Transformers Library (Hugging Face) | Provides the Python API to load, manage, and fine-tune ProtBERT and similar models. | AutoModelForMaskedLM, AutoTokenizer |

| Pre-trained Model Weights | The core trained parameters of ProtBERT, enabling transfer learning. | Rostlab/prot_bert (Hugging Face Hub) |

| Protein Sequence Database (UniRef) | Source data for pre-training and for creating custom fine-tuning datasets. | UniRef100, UniRef90 |

| High-Performance Compute (HPC) Cluster/GPU | Accelerates the computationally intensive fine-tuning and inference processes. | NVIDIA A100/V100 GPU |

| Feature Extraction Pipeline | Scripts to generate per-residue or per-sequence embeddings from raw FASTA files. | Outputs .npy or .h5 files of embeddings. |

| Downstream ML Library | Toolkit for building and training the task-specific prediction head. | PyTorch, Scikit-learn, TensorFlow |

| Visualization Suite | For interpreting attention maps or analyzing embedding spaces. | logomaker for attention, UMAP/t-SNE for embeddings |

What Do Embeddings Represent? Interpreting the Learned Biological Knowledge

Within the broader thesis on the applications of ESM2 (Evolutionary Scale Modeling) and ProtBERT in computational biology research, a central question emerges: what biological knowledge do these models' learned embeddings truly represent? This in-depth guide explores the interpretability of embeddings from state-of-the-art protein language models (pLMs), detailing how they encode structural, functional, and evolutionary principles critical for drug development and basic research.

Embeddings as Biological Knowledge Repositories

Protein language models are trained on millions of protein sequences to predict masked amino acids. Through this self-supervised objective, they learn to generate dense vector representations—embeddings—for each sequence or residue. Evidence indicates these embeddings encapsulate a hierarchical understanding of protein biology.

Table 1: Quantitative Correlations Between Embedding Dimensions and Protein Properties

| Protein Property | Model | Correlation Metric (R² / ρ) | Embedding Layer Used | Reference |

|---|---|---|---|---|

| Secondary Structure | ESM2-650M | 0.78 (3-state accuracy) | Layer 32 | Rao et al., 2021 |

| Solvent Accessibility | ProtBERT | 0.65 (relative accessibility) | Layer 24 | Elnaggar et al., 2021 |

| Evolutionary Coupling | ESM2-3B | 0.85 (precision top L/5) | Layer 36 | Lin et al., 2023 |

| Fluorescence Fitness | ESM1v | 0.67 (Spearman's ρ) | Weighted Avg Layers 33 | Hsu et al., 2022 |

| Binding Affinity | ProtBERT | 0.71 (ΔΔG prediction) | Layer 30 | Brandes et al., 2022 |

Experimental Protocols for Interpreting Embeddings

Protocol 1: Linear Projection for Property Prediction

This protocol tests if specific protein properties are linearly encoded in the embedding space.

- Embedding Extraction: Generate per-residue or per-protein embeddings from a frozen pLM (e.g., ESM2) for a curated dataset (e.g., ProteinNet).

- Label Alignment: Annotate each sample with target properties (e.g., secondary structure from DSSP, stability ΔΔG from experimental assays).

- Probe Training: Train a simple linear model (e.g., logistic regression for discrete, ridge regression for continuous properties) on the embeddings to predict the target property.

- Evaluation: Assess prediction accuracy via cross-validation. High accuracy suggests the property is linearly represented in the embedding manifold.

Protocol 2: Embedding Dimensionality Reduction and Clustering

This protocol visualizes the organization of the embedding space to uncover functional or structural groupings.

- Dataset Construction: Assemble a diverse set of protein sequences from distinct families (e.g., from CATH or PFAM).

- Embedding Generation: Compute sequence-level embeddings (typically via mean pooling of residue embeddings or using a specialized

token). - Dimensionality Reduction: Apply UMAP or t-SNE to project high-dimensional embeddings to 2D/3D.

- Clustering Analysis: Perform unsupervised clustering (e.g., HDBSCAN) on the reduced embeddings. Validate cluster purity against known protein annotations.

Visualizing the Pathway from Sequence to Knowledge

Title: pLM Embedding Generation and Interpretation Workflow

Title: Embedding Space as a Map of Protein Knowledge

The Scientist's Toolkit: Key Research Reagent Solutions

Table 2: Essential Tools for Embedding Interpretation Research

| Tool / Reagent | Provider / Library | Primary Function in Interpretation |

|---|---|---|

| ESM2 / ProtBERT Models (Pre-trained) | Hugging Face, FAIR | Source models for generating protein sequence embeddings. |

| PyTorch / TensorFlow | Meta, Google | Deep learning frameworks for loading models, extracting embeddings, and training probe networks. |

| Biopython | Open Source | Parsing protein sequence files (FASTA), handling PDB structures, and interfacing with biological databases. |

| Scikit-learn | Open Source | Implementing linear probes (regression/classification), clustering algorithms, and evaluation metrics. |

| UMAP / t-SNE | Open Source | Dimensionality reduction for visualizing high-dimensional embedding spaces. |

| DSSP | CMBI | Annotating secondary structure and solvent accessibility from 3D structures for probe training labels. |

| Pfam / CATH Databases | EMBL-EBI, UCL | Providing curated protein family and domain annotations for validating embedding clusters. |

| AlphaFold2 DB / PDB | EMBL-EBI, RCSB | Source of high-confidence protein structures for correlating geometric features with embeddings. |

| GEMME / EVcouplings | Public Servers | Generating independent evolutionary coupling scores for comparison with embedding-based contacts. |

This guide provides a technical roadmap for accessing and utilizing two pivotal pre-trained protein language models, ESM2 and ProtBERT, within computational biology research. These models form the foundation for numerous downstream tasks, from structure prediction to function annotation, accelerating drug discovery pipelines.

Accessing Pre-Trained Models

The primary repositories for these models are hosted on Hugging Face and proprietary GitHub repositories. The table below summarizes key access points and model specifications.

Table 1: Core Pre-trained Model Resources

| Model Family | Primary Repository | Key Variant & Size | Direct Access URL | Notable Features |

|---|---|---|---|---|

| ESM2 (Meta AI) | Hugging Face transformers / GitHub |

esm2t4815B (15B params) | https://huggingface.co/facebook/esm2t4815B | State-of-the-art scale, 3D structure embedding, high MSA depth simulation. |

| ESM2 | GitHub (Meta) | esm2t363B (3B params) | https://github.com/facebookresearch/esm | Provides scripts for finetuning, contact prediction, variant effect scoring. |

| ProtBERT (Tech.) | Hugging Face transformers |

protbertbfd (420M params) | https://huggingface.co/Rostlab/prot_bert | BERT architecture trained on BFD & UniRef100, excels in family-level classification. |

| ProtBERT-BFD | Hugging Face | prot_bert (420M params) | https://huggingface.co/Rostlab/protbertbfd | General-purpose model for remote homology detection. |

Initial Code Repositories & Frameworks

Starter code is essential for effective implementation. The following table outlines essential repositories.

Table 2: Essential Code Repositories and Frameworks

| Repository Name | Maintainer | Primary Purpose | Key Scripts/Modules | Language |

|---|---|---|---|---|

| ESM Repository | Meta AI | Model loading, finetuning, structure prediction. | esm/inverse_folding, esm/pretrained.py, scripts/contact_prediction.py |

Python, PyTorch |

| Transformers Library | Hugging Face | Unified API for model loading (ProtBERT, ESM). | pipeline(), AutoModelForMaskedLM, AutoTokenizer |

Python |

| BioEmbeddings Pipeline | BioEmbeddings | Easy-to-use pipeline for generating protein embeddings. | bio_embeddings.embed (supports both ESM & ProtBERT) |

Python |

| ProtTrans | RostLab | Consolidated repository for all protein language models. | Notebooks for embeddings, finetuning, visualization. | Python, Jupyter |

Experimental Protocols for Model Application

Protocol 1: Generating Per-Residue Embeddings with ESM2

Objective: Extract contextual embeddings for each amino acid in a protein sequence.

- Environment Setup: Install PyTorch and

fair-esmvia pip:pip install fair-esm. - Load Model & Tokenizer: Use

esm.pretrained.load_model_and_alphabet_local('esm2_t36_3B_UR50D'). - Data Preparation: Format sequences as a list of strings (e.g.,

["MKNKFKTQE..."]). Use the model's batch converter. - Inference: Pass tokenized batch to model with

repr_layers=[36]to extract features from the final layer. - Output: The

results["representations"][36]yields a tensor of shape(batch_size, seq_len + 1, embed_dim). Remove the BOS and EOS token embeddings for downstream analysis.

Protocol 2: Zero-Shot Variant Effect Prediction with ESM1v

Objective: Predict the functional impact of single amino acid variants.

- Model Loading: Load the ensemble of five ESM1v models from Hugging Face.

- Sequence Masking: For a wild-type sequence "AGHY", create masked variants for position 3: ["AGH[MASK]", "AGA[MASK]", "AGC[MASK]", ...].

- Logit Extraction: For each masked token, obtain the model's logits for all 20 amino acids at the masked position.

- Score Calculation: Compute the log-likelihood ratio:

score = log(p_mutant / p_wildtype). Average scores across the five-model ensemble. - Interpretation: Negative scores indicate deleterious effects; positive scores suggest neutral or stabilizing effects.

Protocol 3: Finetuning ProtBERT for Binary Classification

Objective: Adapt ProtBERT to classify protein sequences into two functional classes.

- Dataset: Prepare labeled FASTA files, split into train/validation/test sets (e.g., 70/15/15).

- Tokenization: Use

BertTokenizer.from_pretrained("Rostlab/prot_bert")with a maximum sequence length (e.g., 1024). - Model Architecture: Load

BertForSequenceClassificationfrom the Transformers library, specifyingnum_labels=2. - Training: Use

TrainerAPI with AdamW optimizer (lr=2e-5), batch size=8, for 5-10 epochs. Monitor validation accuracy. - Evaluation: Compute standard metrics (Accuracy, F1-Score, ROC-AUC) on the held-out test set.

Visualizing Experimental Workflows

Workflow for Using Pre-trained Protein Language Models

ProtBERT Finetuning for Classification

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Computational Toolkit

| Item/Resource | Function/Description | Typical Source/Provider |

|---|---|---|

| Pre-trained Model Weights | Frozen parameters providing foundational protein sequence representations. | Hugging Face Hub, Meta AI GitHub, RostLab. |

| Tokenizers (ESM & BERT) | Converts amino acid sequences into model-readable token IDs. Packaged with the model. | transformers library, fair-esm package. |

| High-Performance GPU | Accelerates model inference and training. Essential for large models (ESM2 15B) and batched processing. | NVIDIA (e.g., A100, V100, RTX 4090). |

| Embedding Extraction Pipeline | Standardized code to generate, store, and retrieve sequence embeddings for large datasets. | BioEmbeddings library, custom PyTorch scripts. |

| Variant Calling Dataset (e.g., ClinVar) | Curated set of pathogenic/benign variants for benchmarking variant effect prediction models. | NCBI ClinVar, ProteinGym benchmark. |

| Protein Structure Database (PDB) | Experimental 3D structures for validating contact maps or structure-based embeddings. | RCSB Protein Data Bank. |

| Sequence Database (UniRef) | Large, clustered protein sequence sets for training, evaluation, and retrieval tasks. | UniProt Consortium. |

| Finetuning Framework (e.g., Hugging Face Trainer) | High-level API abstracting training loops, mixed-precision training, and logging. | Hugging Face transformers library. |

From Theory to Bench: Practical Applications of ESM2 and ProtBERT in Biomedical Research

In the rapidly advancing field of computational biology, establishing a robust and reproducible computational environment is a foundational step. This guide details the essential libraries, dependencies, and configurations required to conduct research within the context of applying state-of-the-art protein language models like ESM2 and ProtBERT. These models have revolutionized tasks such as protein structure prediction, function annotation, and variant effect prediction, forming a core thesis in modern bioinformatics and drug discovery pipelines.

Core Python Environment & Package Management

A controlled environment prevents version conflicts and ensures reproducibility. Use Conda (Miniconda or Anaconda) or venv for environment isolation.

Primary Environment Setup:

Key Package Managers: pip (primary), conda (for complex binary dependencies).

Essential Scientific Computing & Data Manipulation Libraries

These libraries form the backbone for numerical operations and data handling.

Table 1: Core Numerical & Data Libraries

| Library | Version Range | Primary Function | Installation Command |

|---|---|---|---|

| NumPy | >=1.23.0 | N-dimensional array operations | pip install numpy |

| SciPy | >=1.9.0 | Advanced scientific computing | pip install scipy |

| pandas | >=1.5.0 | Data manipulation and analysis | pip install pandas |

| Biopython | >=1.80 | Biological data computation | pip install biopython |

Deep Learning Frameworks & Model Libraries

ESM2 and ProtBERT are built on PyTorch. TensorFlow may be required for supplementary tools.

Table 2: Deep Learning & Model Libraries

| Library | Version Range | Purpose in Computational Biology | ESM2/ProtBERT Support |

|---|---|---|---|

| PyTorch | >=1.12.0, <2.2.0 | Core ML framework; required for ESM2/ProtBERT | Required |

| Transformers (Hugging Face) | >=4.25.0 | Access and fine-tune ProtBERT & ESM2 | Required |

| fairseq (Facebook) | >=0.12.0 | Original framework for ESM2 models | Optional (for ESM2) |

| TensorFlow | >=2.10.0 | For tools using DeepMind's AlphaFold | Supplementary |

Installation Note: Install PyTorch from the official site based on your CUDA version for GPU support:

Specialized Computational Biology Dependencies

Table 3: Domain-Specific Libraries

| Library | Function | Critical Use-Case |

|---|---|---|

| DSSP | Secondary structure assignment | Feature extraction from PDB files |

| PyMOL, MDTraj | Molecular visualization & analysis | Analyzing model protein structure outputs |

| RDKit | Cheminformatics | Integrating small molecule data for drug discovery |

| HMMER | Sequence homology search | Benchmarking against traditional methods |

Installation: Some require system-level dependencies (e.g., dssp). Use conda where possible:

Experiment Tracking & Visualization Tools

Reproducibility is key. Track experiments and visualize results.

Table 4: Tracking & Visualization

| Tool | Type | Function |

|---|---|---|

| Weights & Biases (wandb) | Cloud-based logging | Track training metrics, hyperparameters, and outputs. |

| Matplotlib, Seaborn | Plotting libraries | Create publication-quality figures. |

| Plotly | Interactive plotting | Build explorable dashboards for results. |

Experimental Protocol: Embedding Extraction with ESM2

A fundamental experiment is extracting protein sequence embeddings for downstream tasks (e.g., classification, clustering).

Protocol:

- Environment: Activate your configured

comp_bioenvironment. - Input Data: Prepare a FASTA file (

sequences.fasta) with target protein sequences. - Script (

extract_esm2_embeddings.py):

- Execution:

python extract_esm2_embeddings.py

- Validation: Check output shape:

embeddings.shape should be (num_sequences, embedding_dimension).

Workflow & Pathway Visualizations

Diagram 1: ESM2/ProtBERT Embedding Application Workflow

Diagram 2: Core Computational Environment Dependency Stack

The Scientist's Toolkit: Research Reagent Solutions

Table 5: Essential Research "Reagents" for In Silico Experiments

Item

Function in Computational Experiments

Typical "Source" / Installation

Pre-trained Model Weights

Provide the foundational knowledge of protein language/ structure. Downloaded at runtime.

Hugging Face Hub (facebook/esm2_t*, Rostlab/prot_bert).

Reference Datasets

For training, fine-tuning, and benchmarking (e.g., Protein Data Bank, UniProt).

PDB, UniProt, Pfam. Use biopython or APIs to download.

Sequence Alignment Tool

Baseline method for comparative analysis (e.g., against BLAST, HMMER).

conda install -c bioconda blast hmmer.

Structure Visualization

Validate predicted structures or analyze binding sites.

PyMOL (licensed), UCSF ChimeraX (free).

HPC/Cloud GPU Quota

"Reagent" for computation; essential for training and large-scale inference.

Institutional clusters, AWS EC2 (p3/p4 instances), Google Cloud TPUs.

Within the broader thesis on the Applications of ESM2 and ProtBERT in computational biology research, this guide details the core methodology of generating protein embeddings—dense, numerical vector representations of protein sequences. These embeddings encode structural, functional, and evolutionary information, enabling downstream machine learning tasks such as function prediction, structure prediction, and drug target identification. This document serves as a technical manual for researchers and drug development professionals.

Foundational Models: ESM2 and ProtBERT

Two primary classes of transformer-based models are dominant in protein sequence representation learning.

ProtBERT (Protein Bidirectional Encoder Representations from Transformers) is adapted from NLP's BERT. It is trained on millions of protein sequences from UniRef100 using masked language modeling (MLM), where random amino acids in a sequence are masked, and the model learns to predict them based on context. ESM2 (Evolutionary Scale Modeling) is an autoregressive model trained on UniRef50. Unlike ProtBERT's MLM, ESM2 is trained causally, predicting the next amino acid in a sequence, which captures deeper evolutionary and structural patterns across billions of sequences.

Table 1: Core Comparison of ESM2 and ProtBERT

| Feature | ProtBERT | ESM2 (8M to 15B params) |

|---|---|---|

| Architecture | BERT-like Transformer (Encoder-only) | Transformer (Encoder-only) |

| Training Objective | Masked Language Modeling (MLM) | Causal Language Modeling |

| Primary Training Data | UniRef100 (~216M sequences) | UniRef50 / MetaGenomic data |

| Output Embedding | Contextual per-residue & pooled [CLS] | Contextual per-residue & mean pooled |

| Key Strength | Excellent for fine-tuning on specific tasks | State-of-the-art for structure/function prediction |

Experimental Protocol: Generating Embeddings

This protocol outlines the steps to generate protein embeddings using pre-trained models.

Materials and Software (The Scientist's Toolkit)

Table 2: Research Reagent Solutions for Embedding Generation

| Item | Function | Example Source/Library |

|---|---|---|

| Pre-trained Model Weights | Provide the learned parameters for the model. | Hugging Face Transformers, FAIR esm |

| Tokenization Script | Converts amino acid sequence into model-specific tokens (e.g., adding [CLS], [SEP]). | Included in model libraries. |

| Inference Framework | Environment to load model and perform forward pass. | PyTorch, TensorFlow |

| Sequence Database | Source of raw protein sequences for embedding. | UniProt, user-provided FASTA |

| Hardware with GPU | Accelerates tensor computations for large models/sequences. | NVIDIA GPUs (e.g., A100, V100) |

Detailed Methodology

Step 1: Environment Setup. Install necessary packages (e.g., transformers, fair-esm, torch).

Step 2: Sequence Preparation. Input a protein sequence as a string (e.g., "MKTV..."). Ensure it contains only standard amino acid letters.

Step 3: Tokenization & Batch Preparation. Use the model's tokenizer to convert the sequence into token IDs, adding necessary special tokens. Batch sequences of similar length for efficiency.

Step 4: Model Inference. Load the pre-trained model (e.g., esm2_t33_650M_UR50D or Rostlab/prot_bert). Run a forward pass with the tokenized batch, ensuring no gradient calculation.

Step 5: Embedding Extraction. Extract the last hidden layer outputs. For a per-residue embedding, use the tensor representing each amino acid (excluding special tokens). For a whole-protein embedding, compute the mean over all residue embeddings or use the dedicated [CLS] token embedding.

Step 6: Storage & Downstream Application. Save embeddings as NumPy arrays or vectors in a database for use in classification, clustering, or regression models.

Key Applications & Supporting Data

Embeddings serve as input features for diverse predictive tasks.

Table 3: Performance of Embedding-Based Predictions on Benchmark Tasks

| Downstream Task | Model Used | Benchmark Metric (Result) | Key Dataset |

|---|---|---|---|

| Protein Function Prediction | ESM2 (650M) | Gene Ontology (GO) F1 Score: 0.45 | GOA Database |

| Secondary Structure Prediction | ProtBERT | Q3 Accuracy: ~84% | CB513, DSSP |

| Solubility Prediction | ESM1b Embeddings | Accuracy: ~85% | eSol |

| Protein-Protein Interaction | ESM2 + MLP | AUROC: 0.92 | STRING Database |

| Subcellular Localization | Pooled ESM2 | Multi-label Accuracy: ~78% | DeepLoc 2.0 |

Visualizing Workflows and Relationships

Protein Embedding Generation and Application Workflow

Thesis Context: From Embeddings to Drug Development

This whitepaper details a critical application within a broader thesis exploring the transformative role of deep learning language models, specifically ESM2 and ProtBERT, in computational biology. The accurate prediction of missense variant pathogenicity is a fundamental challenge in genomics and precision medicine. While ProtBERT excels in general protein sequence understanding, the Evolutionary Scale Modeling (ESM) family, particularly ESM1v and ESM2, has demonstrated state-of-the-art performance in zero-shot mutation effect prediction by learning the evolutionary constraints embedded in billions of protein sequences. This guide provides a technical deep dive into leveraging these models for variant effect scoring.

Model Architectures and Core Principles

ESM1v (Evolutionary Scale Modeling-1 Variant) is a set of five models, each a 650M parameter transformer trained on the UniRef90 dataset (98 million unique sequences). It uses a masked language modeling (MLM) objective, learning to predict randomly masked amino acids in a sequence based on their evolutionary context.

ESM2 represents a significant architectural advancement, featuring a standard transformer architecture with rotary positional embeddings, trained on a vastly expanded dataset (UniRef50, 138 million sequences). Available in sizes from 8M to 15B parameters, its larger context window (up to 1024 residues) captures longer-range interactions critical for protein structure and function.

Both models operate on the principle that the log-likelihood of an amino acid at a position, given its evolutionary context, reflects its functional fitness. A pathogenic mutation typically has a low predicted probability.

Quantitative Performance Comparison

Table 1: Benchmark Performance of ESM1v, ESM2, and Comparative Tools

| Model / Tool | Principle | AUC (ClinVar BRCA1) | Spearman's ρ (DeepMutant) | Runtime (per 1000 variants) | Key Strength |

|---|---|---|---|---|---|

| ESM1v (ensemble) | Masked LM, ensemble of 5 models | 0.92 | 0.73 | ~45 min (CPU) | Robust zero-shot prediction |

| ESM2-650M | Masked LM, single model | 0.90 | 0.71 | ~30 min (CPU) | Long-range context, state-of-the-art embeddings |

| ESM2-3B | Masked LM, larger model | 0.91 | 0.72 | ~120 min (GPU) | Higher accuracy for complex variants |

| ProtBERT | Masked LM (BERT-style) | 0.85 | 0.65 | ~35 min (CPU) | General language understanding |

| EVmutation | Evolutionary coupling | 0.88 | 0.70 | Hours (MSA dependent) | Explicit co-evolution signals |

Table 2: Pathogenicity Prediction Concordance on Different Datasets

| Variant Set (Size) | ESM1v & ESM2 Agreement | ESM1v Disagrees (ESM2 Correct) | ESM2 Disagrees (ESM1v Correct) | Both Incorrect vs. Ground Truth |

|---|---|---|---|---|

| ClinVar Pathogenic/Likely Pathogenic (15k) | 89% | 6% | 4% | 1% |

| gnomAD "benign" (20k) | 93% | 3% | 3% | 1% |

| ProteinGym DMS (12 assays) | 85% (avg. correlation) | - | - | - |

Detailed Experimental Protocols

Protocol 1: Zero-Shot Variant Effect Scoring with ESM1v/ESM2

Objective: Compute a log-likelihood score for a missense variant without task-specific training.

Materials & Input:

- Wild-type Protein Sequence: FASTA format.

- Variant List: Single amino acid substitutions (e.g., P53 R175H).

- Model: Pre-trained ESM1v or ESM2 weights (available via Hugging Face

transformersor FAIR'sesmPython package). - Hardware: GPU (recommended for ESM2-3B/15B) or modern CPU.

Procedure:

- Sequence Tokenization: Tokenize the wild-type sequence using the model's specific tokenizer.

- Masked Logit Extraction:

a. For each variant at position

i, create a copy of the tokenized sequence. b. Mask the token at positioni. c. Pass the masked sequence through the model. d. Extract the logits for the masked position from the model's output. - Log Probability Calculation:

a. Apply softmax to the logits at position

ito get a probability distribution over all 20 amino acids. b. Record the log probability (log p) for the wild-type amino acid (wtlogp) and the *mutant* amino acid (mutlogp). - Scoring: Compute the log-likelihood ratio (LLR) as:

LLR = mut_logp - wt_logp. A more negative LLR indicates a higher predicted deleterious effect. - (ESM1v-specific) Ensemble Averaging: If using the five ESM1v models, repeat steps 2-4 for each model and average the LLR scores.

Interpretation: LLR thresholds can be calibrated. Typically, LLR < -2 suggests a deleterious/pathogenic effect, while LLR > -1 suggests benign.

Protocol 2: Embedding-Based Prediction with Downstream Training

Objective: Use ESM2 embeddings as features to train a supervised classifier (e.g., for ClinVar labels).

Procedure:

- Embedding Extraction:

a. Pass the wild-type sequence (or a window around the variant) through ESM2 without masking.

b. Extract the per-residue embeddings from the final layer (or a concatenation of layers).

c. For the variant position

i, extract the embedding vectore_i. - Feature Engineering: Optionally concatenate

e_iwith the LLR score from Protocol 1, and/or conservation scores from external tools. - Classifier Training: Use embeddings as input features to train a standard classifier (Random Forest, XGBoost, or shallow neural network) on labeled variant datasets (e.g., ClinVar).

- Validation: Perform rigorous cross-validation on held-out genes or variants to assess generalizability.

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Materials for ESM-Based Variant Prediction

| Item | Function & Description | Example/Format |

|---|---|---|

| Pre-trained Model Weights | Core inference engine. Downloaded from official repositories. | Hugging Face Model IDs: facebook/esm1v_t33_650M_UR90S_1 to 5, facebook/esm2_t33_650M_UR50D |

| Variant Calling Format (VCF) File | Standard input containing genomic variant coordinates and alleles. | VCF v4.2, requires annotation to protein consequence (e.g., with Ensembl VEP). |

| Protein Sequence Database | Source of canonical and isoform sequences for mapping variants. | UniProt Knowledgebase (Swiss-Prot/TrEMBL) in FASTA format. |

| Benchmark Datasets | For validation and model comparison. | ClinVar, ProteinGym Deep Mutational Scanning (DMS) benchmarks, gnomAD. |

ESM Python Package (esm) |

Official library for loading models, tokenizing sequences, and running inference. | PyPI installable package fair-esm. |

Hugging Face transformers Library |

Alternative interface for loading and using ESM models. | Integrated with the broader PyTorch ecosystem. |

| Hardware with CUDA Support | Accelerates inference for larger models (ESM2-3B/15B). | NVIDIA GPU with >16GB VRAM for ESM2-15B. |

Visualizations

ESM Zero-Shot Variant Scoring Workflow

Thesis Context: Variant Prediction as a Core ESM2/ProtBERT Application

This whitepaper presents an in-depth technical guide on the application of deep learning language models, specifically ESM2 and ProtBERT, for guiding rational mutations in proteins to enhance stability and function. It is framed within the broader thesis that transformer-based protein language models (pLMs) are revolutionizing computational biology by providing high-throughput, in silico methods to predict the effects of mutations, thereby accelerating the design-build-test-learn cycle in protein engineering.

Foundational Models: ESM2 and ProtBERT

Protein language models are trained on millions of evolutionary-related protein sequences to learn the underlying "grammar" and "semantics" of protein structure and function.

ESM2 (Evolutionary Scale Modeling-2): A transformer-based model developed by Meta AI. The largest variant, ESM2 650M parameters, was trained on ~65 million protein sequences from UniRef. It generates contextual embeddings for each amino acid residue, capturing evolutionary constraints and structural contacts. ESM2's primary strength lies in its state-of-the-art performance on structure prediction tasks, which is directly informative for stability engineering.

ProtBERT: A BERT-based model developed specifically for proteins by DeepMind and the TAPE benchmark creators. It uses a masked language modeling objective, learning to predict randomly masked amino acids in a sequence based on their context. This fine-grained understanding of local sequence-structure relationships is particularly useful for predicting functional sites and subtle functional changes.

A comparative summary of key features is provided in Table 1.

Table 1: Comparative Summary of ESM2 and ProtBERT

| Feature | ESM2 | ProtBERT |

|---|---|---|

| Model Architecture | Transformer (Decoder-like) | Transformer (Encoder, BERT) |

| Primary Training Objective | Causal Language Modeling | Masked Language Modeling (MLM) |

| Key Strength | State-of-the-art structure prediction; global context | Fine-grained residue-residue relationships; local context |

| Typical Embedding Use | Per-residue embeddings for contact/structure prediction | Per-residue or per-sequence embeddings for property prediction |

| Common Application in Design | Stability via structure maintenance, folding energy | Functional site prediction, identifying key residues |

Core Methodologies and Experimental Protocols

Predicting Mutation Effects with pLMs

Protocol: In silico Saturation Mutagenesis and Effect Scoring

- Input Sequence Preparation: Obtain the wild-type amino acid sequence (FASTA format).

- Model Inference:

- For each position i in the sequence, mask or replace it with each of the other 19 possible amino acids.

- Pass both the wild-type and mutant sequences through the pLM (ESM2 or ProtBERT).

- Extract the log-likelihood scores (for ProtBERT) or the pseudo-log-likelihood ratio (for ESM2) for the target residue.

- Effect Calculation:

- For ProtBERT: The effect is often calculated as the difference in the model's confidence (log probability) for the mutant residue versus the wild-type residue at the masked position. A large negative score suggests the mutation is evolutionarily disfavored, potentially destabilizing.

- For ESM2: Use the esm-variant-prediction suite. The model computes a pseudo-log-likelihood for the entire sequence. The effect is often reported as the log-odds ratio:

log( p(mutant) / p(wild-type) ). Alternatively, use the ESM-1v model architecture specifically designed for zero-shot variant effect prediction.

- Stability Prediction: Correlate the computed log-odds ratios with experimental measures like ΔΔG (change in folding free energy). Negative log-odds typically correlate with destabilizing mutations.

- Function Prediction: For functional sites, use the embeddings as input to a downstream classifier trained on known active/inactive variants, or analyze the top-k predicted residues at a masked functional position.

Table 2: Quantitative Performance Benchmarks on Common Datasets

| Model | Dataset (Task) | Key Metric | Reported Performance | Implication for Design |

|---|---|---|---|---|

| ESM-1v | DeepMut (Stability) | Spearman's ρ | 0.40 - 0.48 | Strong zero-shot stability prediction without task-specific training. |

| ESM2 (650M) | ProteinGym (Variant Effect) | Spearman's ρ (Ave.) | ~0.40 - 0.55 | Generalizable variant effect prediction across diverse assays. |

| ProtBERT | TAPE (Fluorescence) | Spearman's ρ | 0.68 | Excellent at predicting functional changes in specific protein families when fine-tuned. |

| ESM-IF1 (Inverse Folding) | de novo Design | Recovery Rate | ~40% | Can generate sequences that fold into a given backbone, useful for stability-constrained design. |

Workflow for Guiding Mutations

The following diagram illustrates the integrated computational workflow for guiding mutations using pLMs.

Figure 1: pLM-Guided Mutation Design and Validation Workflow.

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Computational and Experimental Reagents for pLM-Guided Design

| Item / Reagent | Function / Purpose | Example / Note |

|---|---|---|

| ESM2 Model Weights | Provides the foundational model for structure-aware sequence embeddings and variant effect prediction. | Available via Hugging Face transformers or Meta's GitHub repository. |

| ProtBERT Model Weights | Provides the foundational model for evolution-aware sequence embeddings and masked residue prediction. | Available via Hugging Face transformers (e.g., Rostlab/prot_bert). |

| ESM-Variant Prediction Toolkit | Python library specifically for running ESM-1v and related models on variant datasets. | Simplifies the process of scoring mutants. |

| ProteinGym Benchmark Suite | Curated dataset of deep mutational scans for evaluating variant effect prediction models. | Used for benchmarking custom pipelines. |

| Rosetta Suite | Physics-based modeling suite for detailed energy calculations (ΔΔG) and structure refinement. | Used to validate or supplement pLM predictions. |

| Site-Directed Mutagenesis Kit | Experimental generation of in silico designed mutants. | NEB Q5 Site-Directed Mutagenesis Kit or similar. |

| Differential Scanning Fluorimetry (DSF) | High-throughput experimental measurement of protein thermal stability (Tm). | Uses dyes like SYPRO Orange to measure unfolding. |

| Surface Plasmon Resonance (SPR) / Bio-Layer Interferometry (BLI) | Measures binding kinetics (KD, kon, koff) of wild-type vs. mutant proteins for functional assessment. | Critical for validating functional enhancements. |

Detailed Experimental Protocol: A Case Study

Protocol: Enhancing Thermostability of an Enzyme using ESM2-Guided Design

A. Computational Phase:

- Sequence Submission & Saturation Mutagenesis in silico:

- Input the enzyme's wild-type sequence into a custom Python script using the

esmPython package. - For each residue position (excluding absolutely conserved catalytic residues identified via multiple sequence alignment), generate 19 mutant sequences.

- Input the enzyme's wild-type sequence into a custom Python script using the

- Variant Effect Scoring:

- Use the

esm.pretrained.esm1v_t33_650M_UR90S_1()model. - For each mutant, compute the pseudo-log-likelihood of the sequence. Calculate the log-odds ratio:

LLR = log( p(mutant) / p(wild-type) ). - Rank all single mutants by their LLR score. Higher scores suggest the mutation is evolutionarily plausible and potentially stabilizing.

- Use the

- Structural Filtering:

- Map top-ranking mutations (e.g., LLR > 0) onto a high-resolution structure (experimental or AlphaFold2 prediction).

- Filter out mutations that introduce steric clashes or disrupt critical hydrogen bonds/salt bridges using PyMOL or Rosetta.

- Combinatorial Design:

- Select 5-10 top-ranking, structurally benign single mutations.

- Use a greedy search or combinatorial algorithm with ESM2 scoring to evaluate promising double and triple mutants.

B. Experimental Validation Phase:

- Gene Construction:

- Design oligonucleotide primers for the top 15-20 computationally designed variants (including single and combinatorial mutants).

- Perform site-directed mutagenesis on the gene cloned into an expression vector (e.g., pET vector).

- Sequence-confirm all constructs.

- Expression and Purification:

- Express variants in E. coli BL21(DE3) cells via auto-induction at 30°C for 18 hours.

- Purify proteins via immobilized metal affinity chromatography (IMAC) using a His-tag, followed by size-exclusion chromatography (SEC).

- Confirm purity and monodispersity by SDS-PAGE and SEC elution profile.

- Stability Assay (DSF):

- Dilute purified proteins to 0.2 mg/mL in a suitable buffer.

- Mix 10 µL of protein with 10 µL of 5X SYPRO Orange dye in a 96-well PCR plate.

- Run a thermal ramp from 25°C to 95°C at 1°C/min in a real-time PCR machine.

- Record fluorescence and calculate the melting temperature (Tm) for each variant. Report ΔTm relative to wild-type.

- Functional Assay:

- Perform standard kinetic assays (e.g., absorbance/fluorescence-based activity assay) under optimal conditions.

- Determine kcat and KM for wild-type and stabilized variants.

- Optional: Measure activity after incubation at elevated temperatures for various durations to assess thermostability of function.

The relationship between computational scores and experimental outcomes is conceptualized below.

Figure 2: Relationship Between pLM Scores and Experimental Outcomes.

The integration of ESM2 and ProtBERT into protein engineering pipelines provides a powerful, data-driven approach to navigate the vast mutational landscape. By combining ESM2's structural insights with ProtBERT's evolutionary and functional constraints, researchers can prioritize mutations that simultaneously enhance stability and preserve function. This in-depth guide outlines the methodologies, tools, and validation protocols necessary to implement this cutting-edge computational approach, directly contributing to the accelerated design of robust proteins for therapeutic and industrial applications.

Within computational biology, the high cost and time-intensive nature of wet-lab experimentation for functional annotation creates a pressing need for methods that can predict protein function with minimal labeled examples. Zero-Shot Learning (ZSL) and Few-Shot Learning (FSL) have emerged as critical paradigms, leveraging pre-trained protein language models like ESM2 and ProtBERT to infer function from sequence alone, without task-specific fine-tuning. This whitepaper details the technical foundations, experimental protocols, and applications of these methods, framed explicitly within a thesis on the applications of ESM2 and ProtBERT in computational biology research.

The exponential growth of protein sequence data from next-generation sequencing has far outpaced experimental functional characterization. Traditional supervised machine learning fails in this regime due to a lack of labeled training data for thousands of protein families or novel functions. ZSL and FSL, empowered by deep semantic representations from protein Language Models (pLMs), offer a path forward by transferring knowledge from well-characterized proteins to unlabeled or novel ones.

Core Technical Foundations

Protein Language Models as Semantic Encoders

- ESM2 (Evolutionary Scale Modeling): A transformer-based model trained on UniRef protein sequences. It learns evolutionary patterns and biochemical properties, producing embeddings that encapsulate structural and functional constraints.

- ProtBERT: A BERT-based model trained on UniRef100 and BFD, specialized for capturing contextual amino acid relationships, useful for identifying functional motifs.

These pLMs provide a dense, semantically meaningful embedding space where geometric proximity correlates with functional similarity, enabling generalization to unseen classes.

Formal Definitions

- Zero-Shot Learning (ZSL): The model predicts functions for classes not seen during any training phase. It requires an auxiliary information source (e.g., textual function descriptions, Gene Ontology (GO) graphs) to link the model's embedding space to unseen class labels.

- Few-Shot Learning (FSL): The model learns from a very small number of labeled examples per novel class (e.g., 1-10 examples), typically via meta-learning or rapid fine-tuning of a pre-trained pLM.

Experimental Protocols & Methodologies

Zero-Shot Functional Annotation Protocol

Aim: To annotate a protein of unknown function with GO terms without any protein-specific training for those terms.

Workflow:

- Embedding Generation: Compute the per-residue and pooled sequence representation for the query protein using ESM2 (

esm2_t36_3B_UR50D). - Auxiliary Information Processing:

- Obtain textual definitions of target GO terms (Molecular Function, Biological Process).

- Encode each GO term description using a text encoder (e.g., Sentence-BERT).

- Semantic Alignment: Project both protein embeddings and GO term text embeddings into a shared latent space via a lightweight neural network, trained on proteins with known annotations.

- Similarity Scoring: For the query protein embedding, compute cosine similarity against all GO term embeddings.

- Prediction: Rank GO terms by similarity score; terms above a calibrated threshold are assigned as predictions.

Few-Shot Protein Family Classification Protocol

Aim: To classify proteins into a novel family given only 5 support examples per family.

Workflow (Prototypical Network Approach):

- Support Set Creation: For N novel classes, create a support set

Scontainingklabeled examples per class (e.g.,N=10,k=5). - Prototype Computation: Encode all support examples with ProtBERT. For each class

c, compute its prototypep_cas the mean vector of its support embeddings. - Query Processing: Encode an unlabeled query protein

q. - Distance-Based Classification: Compute the Euclidean distance between

qand each class prototypep_c. - Prediction: Assign

qto the class with the nearest prototype.

Data & Performance Benchmarks

Recent benchmark studies on datasets like SwissProt and CAFA assess the performance of pLM-based ZSL/FSL.

Table 1: Zero-Shot GO Term Prediction Performance (Fmax Score)

| Model | Embedding Source | Molecular Function (MF) | Biological Process (BP) | Dataset |

|---|---|---|---|---|

| ESM2-ZSL | ESM2 3B (pooled) | 0.51 | 0.42 | CAFA3 Test |

| ProtBERT-ZSL | ProtBERT-BFD (CLS) | 0.48 | 0.39 | CAFA3 Test |

| Baseline (BLAST) | Sequence Alignment | 0.41 | 0.32 | CAFA3 Test |

Table 2: Few-Shot Protein Family Classification (Accuracy %)

| Model | Support Shots per Class | Novel Family Accuracy | Base Family Accuracy | Dataset |

|---|---|---|---|---|

| ProtBERT + Prototypical Nets | 5 | 78.5% | 91.2% | Pfam Split |

| ESM2 + Fine-Tuning (Adapter) | 10 | 82.1% | 93.7% | Pfam Split |

| ESM1b + Logistic Regression | 20 | 70.3% | 88.5% | Pfam Split |

Visualizing Workflows & Relationships

Title: Zero-Shot Learning for GO Annotation

Title: Few-Shot Learning with Prototypical Networks

Table 3: Key Reagent Solutions for ZSL/FSL Experiments

| Item | Function / Description | Example/Source |

|---|---|---|

| Pre-trained pLMs | Provide foundational protein sequence representations. | ESM2 (3B, 15B params), ProtBERT, from Hugging Face/ESM GitHub. |

| Annotation Databases | Source of ground-truth labels and textual descriptions for training & evaluation. | Gene Ontology (GO), Pfam, UniProtKB/Swiss-Prot. |

| Benchmark Datasets | Standardized splits for fair evaluation of ZSL/FSL performance. | CAFA Challenge Data, Pfam Seed Splits (for few-shot), DeepFRI datasets. |

| Text Embedding Models | Encode functional descriptions into vector space. | Sentence-BERT (all-mpnet-base-v2), BioBERT. |

| Semantic Alignment Code | Implementation for mapping protein & text embeddings to shared space. | Custom PyTorch/TensorFlow layers; often adapted from CLIP-style architectures. |

| Meta-Learning Libraries | Frameworks for implementing few-shot learning algorithms. | Torchmeta, Learn2Learn, or custom Prototypical/MAML code. |

| High-Performance Compute | GPU clusters for embedding extraction and model training. | NVIDIA A100/T4 GPUs (via cloud or local HPC). |

Zero-shot and few-shot learning, powered by ESM2 and ProtBERT, are transforming functional prediction in computational biology. They move the field beyond the limitations of labeled data, enabling rapid hypothesis generation for novel sequences. Future work will focus on integrating structural embeddings from models like AlphaFold2, exploiting hierarchical GO graphs more explicitly, and developing more robust meta-learning strategies for the extreme few-shot (k=1) scenario. These approaches are poised to become indispensable tools for researchers and drug development professionals aiming to decipher the protein universe.

Within the broader thesis on the Applications of ESM2 and ProtBERT in Computational Biology Research, this whitepaper examines a pivotal intersection where protein language models (pLMs) have revolutionized structural prediction. AlphaFold2 (AF2), developed by DeepMind, marked a paradigm shift by achieving unprecedented accuracy in the Critical Assessment of Protein Structure Prediction (CASP14). Concurrently, Meta AI's Evolutionary Scale Modeling (ESM) project advanced pLMs, culminating in ESMFold—a model that predicts protein structure directly from a single sequence. This guide explores the technical foundations of ESMFold, its distinctions from and synergies with AF2, and its integration into modern protein structure prediction pipelines.

Technical Foundations: From ESM2 to ESMFold

ESMFold is built upon the ESM-2 pLM, a transformer model trained on millions of protein sequences. Unlike traditional methods relying on multiple sequence alignments (MSAs), ESM-2 learns evolutionary and biophysical constraints implicitly from sequences alone.

Key Architecture:

- ESM-2 Backbone: A standard transformer encoder processes the tokenized amino acid sequence, generating a contextualized embedding for each residue.

- Structure Module: Attached to the final layer of ESM-2, this module converts residue embeddings into 3D atomic coordinates. It typically consists of:

- A linear layer to generate a frame (orientation) for each residue.

- A network to predict distances between residues.

- An iterative refinement process, often using invariant point attention (IPA, as in AF2), to generate the final all-atom structure.

The core innovation is the direct "sequence-to-structure" mapping, bypassing the computationally expensive MSA search and pairing step central to AF2's pipeline.

Diagram 1: ESMFold's Direct Sequence-to-Structure Pipeline (68 chars)

Comparative Analysis: ESMFold vs. AlphaFold2

While both predict high-accuracy structures, their mechanisms, inputs, and performance characteristics differ significantly.

Table 1: Core Comparison of ESMFold and AlphaFold2

| Feature | AlphaFold2 (AF2) | ESMFold |

|---|---|---|

| Primary Input | Single Sequence + Multiple Sequence Alignment (MSA) | Single Sequence only |

| Core Methodology | Evoformer (processes MSA/pairing) + Structure Module (IPA) | ESM-2 Transformer (pLM) + Lightweight Structure Module |

| Speed | Minutes to hours (MSA generation is bottleneck) | Seconds to minutes per structure |

| MSA Dependence | High accuracy relies on deep, informative MSA | Independent; accuracy from learned priors in pLM |

| Key Innovation | End-to-end differentiable, geometric deep learning | Transformer-based language model knowledge for structure |

| Best Performance | On targets with rich evolutionary data (high MSA depth) | On singleton proteins or where MSAs are shallow/unavailable |

| Computational Load | High (GPU memory & time for MSA/evoformer) | Lower (Forward pass of large transformer) |

Table 2: Quantitative Performance Benchmark (CASP14/15 Targets)

| Model | TM-score (Global)↑ | GDT_TS↑ | pLDDT↑ | Avg. Inference Time↓ |

|---|---|---|---|---|

| AlphaFold2 | 0.88 | 0.85 | 89.2 | ~45-180 mins |

| ESMFold | 0.71 | 0.68 | 79.3 | ~2-10 mins |

| ESMFold (w/o MSA) | 0.71 | 0.68 | 79.3 | ~2-10 mins |

| AF2 (No MSA) | 0.45 | 0.42 | 62.1 | ~30 mins |

Note: ESMFold performance is comparable to AF2 when AF2 is run without an MSA, but much faster. AF2 with MSA remains state-of-the-art in accuracy.

ESMFold's Role in AlphaFold2 Pipelines

ESMFold is not a wholesale replacement for AF2 but a powerful complement within broader structural biology workflows.

1. Pre-Screening and Prioritization: ESMFold's speed allows for rapid assessment of thousands of candidate proteins (e.g., from metagenomic databases) to prioritize high-confidence or novel folds for deeper, more resource-intensive AF2 analysis.

2. MSA Generation Augmentation: The embeddings from ESM-2 can be used to perform in-silico mutagenesis or generate profile representations that guide or augment traditional HMM-based MSA construction for AF2.

3. Hybrid or Initialization Strategies: ESMFold's predicted structures or distances can serve as starting points or priors for AF2's refinement process, potentially speeding convergence or escaping local minima.

4. Singleton and Low-MSA Target Prediction: For proteins with no evolutionary homologs (singletons) or shallow MSAs, ESMFold provides a high-accuracy solution where AF2's performance degrades.

Diagram 2: Integrated Decision Pipeline for Structure Prediction (94 chars)

Experimental Protocol: Validating ESMFold Predictions

This protocol outlines a standard workflow for using and experimentally validating ESMFold predictions, commonly employed in structural biology labs.

Protocol: In-silico Prediction and Validation

A. Computational Prediction Phase

- Input Preparation: Obtain the amino acid sequence of the target protein in FASTA format. Ensure it is under 1000 residues for standard GPU memory constraints.

- ESMFold Inference:

- Use the official ESMFold Colab notebook or local installation (requires PyTorch).

- Load the

esm.pretrained.esmfold_v1()model. - Pass the sequence through the model with default settings (num_recycles=3).

- Extract the predicted 3D coordinates (

.pdbfile), per-residue confidence metric (pLDDT), and predicted aligned error (PAE) matrix.

- AlphaFold2 Comparison (Optional but Recommended):

- Run the same sequence through a local AF2 installation or ColabFold (simplified version).

- Generate the AF2 prediction with MSAs enabled.

- Align the ESMFold and AF2 structures using TM-align or PyMOL.

B. Experimental Validation Phase (Exemplar: X-ray Crystallography)

- Cloning & Expression: Based on the predicted structured regions, design constructs for protein expression (e.g., in E. coli). The pLDDT score can guide truncation of disordered termini.

- Purification: Purify the protein using affinity (e.g., His-tag) and size-exclusion chromatography.

- Crystallization: Use the ESMFold/AF2 structure to inform crystallization strategies (e.g., surface entropy reduction mutagenesis).

- Data Collection & Structure Solution:

- Collect X-ray diffraction data.

- Use the ESMFold-predicted model as a molecular replacement (MR) search model in Phaser (CCP4 suite).

- Refine the model using Phenix or Refmac.

- Validation Metrics: Compare the experimental structure to the prediction using Root-Mean-Square Deviation (RMSD) of Cα atoms and GDT_TS scores.

The Scientist's Toolkit: Key Research Reagent Solutions

Table 3: Essential Tools for ESMFold/AF2 Research & Validation

| Item/Category | Function/Description | Example/Provider |

|---|---|---|

| Computational Resources | ||

| ESMFold Code & Weights | Primary model for sequence-to-structure prediction. | GitHub: facebookresearch/esm |

| ColabFold | Streamlined, cloud-based AF2/ESMFold with automated MSA. | GitHub: sokrypton/ColabFold |

| AlphaFold2 (Local) | Full AF2 pipeline for high-accuracy, MSA-dependent predictions. | GitHub: deepmind/alphafold |

| Validation Software | ||

| PyMOL / ChimeraX | Visualization, alignment, and analysis of 3D structures. | Schrödinger / UCSF |

| TM-align | Algorithm for comparing protein structures and calculating TM-score. | Zhang Lab Server |

| Experimental Reagents | ||

| Phaser (CCP4) | Molecular replacement software using predicted models for phasing. | MRC Laboratory of Molecular Biology |

| Surface Entropy Reduction (SER) Kits | Mutagenesis primers/kits to improve crystallization propensity based on predicted surface. | Commercial (e.g., from specialized oligo providers) |

| Databases | ||

| PDB (Protein Data Bank) | Repository for experimental structures; used for benchmarking. | rcsb.org |

| UniProt | Comprehensive protein sequence database for MSA generation. | uniprot.org |

| AlphaFold DB / ModelArchive | Pre-computed AF2 and ESMFold predictions for proteomes. | alphafold.ebi.ac.uk / modelarchive.org |

ESMFold represents a transformative approach within the pLM-driven structural biology landscape defined by ESM2 and ProtBERT. By decoupling structure prediction from explicit evolutionary information, it provides a fast, scalable, and complementary tool to the more accurate but resource-intensive AlphaFold2. Its primary role in AF2 pipelines is one of augmentation—enabling high-throughput pre-screening, aiding in challenging low-MSA cases, and offering potential hybrid strategies. As pLMs continue to grow in scale and sophistication, the integration of "structure-from-sequence" models like ESMFold will become increasingly central to computational biology and drug discovery pipelines, accelerating the exploration of the vast protein universe.

This case study examines the transformative role of large-scale protein language models (pLMs), specifically ESM2 and ProtBERT, in streamlining the identification of antigenic epitopes and the de novo design of therapeutic antibodies. Framed within the broader thesis on their applications in computational biology, we detail how these models leverage evolutionary and semantic protein sequence information to predict structure, function, and binding, thereby compressing years of experimental work into computational workflows.

Thesis Context: A core tenet of modern computational biology is that protein sequence encodes not only structure but also functional semantics. ESM2 (Evolutionary Scale Modeling) and ProtBERT (Protein Bidirectional Encoder Representations from Transformers) are pre-trained on billions of protein sequences, learning deep representations of biological patterns. This case study positions their application in immunology as a direct validation of that thesis, moving from sequence-based prediction to functional protein design.

Core Methodologies & Experimental Protocols

Epitope Prediction Using pLM-Derived Features

Objective: Identify linear and conformational B-cell epitopes from antigen protein sequences. Protocol:

- Input Processing: The antigen amino acid sequence is tokenized and fed into either ESM2 (e.g., esm2t33650M_UR50D) or ProtBERT.

- Embedding Extraction: Per-residue embeddings (contextual vector representations) are extracted from the final or penultimate layer of the model.

- Feature Augmentation: Embeddings are combined with auxiliary features (e.g., predicted solvent accessibility, flexibility from tools like DynaMine, phylogenetic conservation).

- Model Training: A supervised classifier (e.g., Random Forest, Gradient Boosting, or a shallow neural network) is trained on known epitope-annotated datasets (e.g., IEDB).

- Prediction & Validation: The trained model predicts epitope probability per residue. Top-scoring regions are synthesized as peptides for validation via ELISA with sera from immunized hosts or known positive-control antibodies.

De NovoAntibody Design with pLMs

Objective: Generate novel, stable antibody variable region sequences targeting a specified epitope. Protocol:

- Conditioning: The target antigen sequence or a motif of the predicted epitope is used as a conditional input. For ProtBERT, this can be formatted as a sequence-to-sequence task.

- Sequence Generation: Using fine-tuned versions of ESM2 or ProtBERT (e.g., via masked language modeling or encoder-decoder frameworks), the model generates candidate complementary-determining region (CDR) sequences, particularly the hypervariable CDR-H3.

- In silico Affinity Screening: Generated Fv (variable fragment) sequences are structurally modeled (using AlphaFold2 or ESMFold). Binding energy (ΔG) is estimated via rigid-body docking (e.g., with ClusPro) and molecular mechanics/generalized Born surface area (MM/GBSA) calculations.

- Developability Filtering: Candidates are filtered using pLM-perplexity scores (lower perplexity indicates more "protein-like" sequences) and predictors for aggregation propensity and polyspecificity.

- Experimental Expression: Selected heavy and light chain sequences are synthesized, cloned into IgG expression vectors, transiently expressed in HEK293 cells, and purified via Protein A chromatography for in vitro binding assays (SPR/BLI).

Table 1: Performance Comparison of pLM-Based Epitope Prediction Tools

| Model / Tool | Base pLM | AUC-ROC | Accuracy | Dataset (Reference) | Key Advantage |

|---|---|---|---|---|---|

| EPI-M | ESM-1b | 0.89 | 0.82 | IEDB Linear Epitopes | Integrates embeddings with physio-chemical features |

| Residue-BERT | ProtBERT | 0.91 | 0.84 | SARS-CoV-2 Spike | Captures long-range dependencies for conformational epitopes |

| EmbedPool | ESM2 | 0.93 | 0.86 | AntiGen-PRO | Uses attention weights to highlight key residues |

Table 2: Benchmark of De Novo Designed Antibodies (In Silico)

| Design Method | pLM Used | Success Rate* (Affinity < 100 nM) | Average Perplexity ↓ | Computational Time per Design (GPU-hours) |

|---|---|---|---|---|

| Masked CDR Inpainting | ESM2-650M | 22% | 8.5 | ~1.2 |

| Conditional Sequence Generation | ProtBERT | 18% | 9.1 | ~0.8 |

| Hallucination with MCMC | ESM2-3B | 31% | 7.8 | ~5.0 |

| Success defined by *in silico affinity prediction (MM/GBSA).* |

Visualized Workflows

Title: Epitope Prediction Workflow (100 chars)

Title: Antibody Design & Screening Pipeline (100 chars)

The Scientist's Toolkit: Key Research Reagent Solutions