Decoding CAPE: A Critical Guide to the Protein Engineering Challenge for Drug Development Researchers

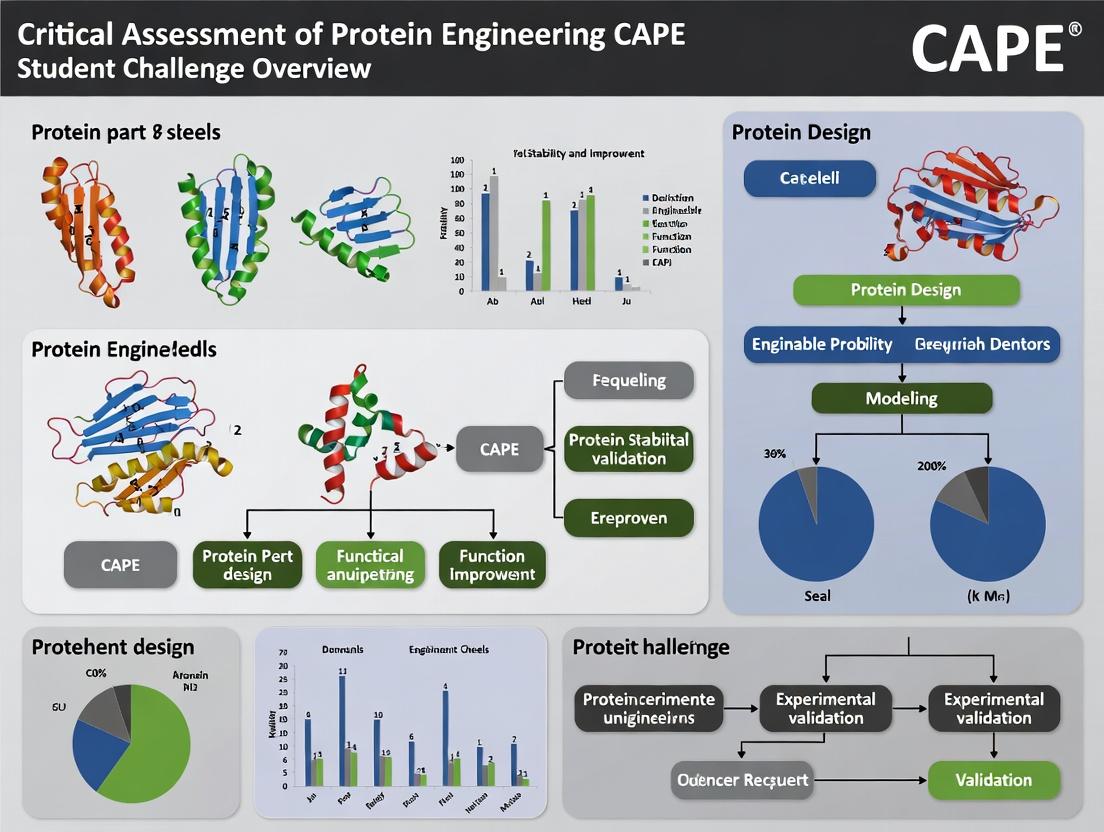

This article provides a comprehensive, critical assessment of the Critical Assessment of Protein Engineering (CAPE) challenge, a pivotal community-wide initiative benchmarking computational tools in protein design.

Decoding CAPE: A Critical Guide to the Protein Engineering Challenge for Drug Development Researchers

Abstract

This article provides a comprehensive, critical assessment of the Critical Assessment of Protein Engineering (CAPE) challenge, a pivotal community-wide initiative benchmarking computational tools in protein design. Tailored for researchers, scientists, and drug development professionals, we explore the foundational goals and evolution of CAPE, dissect its core methodologies and predictive tasks, analyze common pitfalls and optimization strategies for participant tools, and validate outcomes through comparative analysis of leading approaches. The synthesis offers actionable insights for leveraging CAPE benchmarks to drive innovation in therapeutic protein engineering, highlighting implications for accelerating biomedical research and clinical translation.

What is the CAPE Challenge? Unveiling the Benchmark for Protein Engineering Progress

This document defines the Critical Assessment of Protein Engineering (CAPE), a community-wide challenge designed to rigorously evaluate computational methods for predicting and designing protein function. Framed within a broader thesis on the CAPE student challenge overview, this whitepaper details its foundational principles, operational mission, and the organizational consortium that governs it. CAPE serves as a critical benchmark in the field, providing researchers, scientists, and drug development professionals with standardized datasets and blind assessments to advance the state of protein engineering.

Origins and Context

The genesis of CAPE lies in the recognized need for unbiased, large-scale validation of computational protein design tools. While computational predictions have proliferated, their experimental validation has often been anecdotal or limited to specific protein families. Inspired by the success of previous Critical Assessment initiatives (e.g., CASP for structure prediction, CAGI for genomics), CAPE was formally established to address this gap. Its inaugural challenge was launched in 2023, focusing on the prediction of protein functional properties from sequence and structural data, prior to experimental verification.

Table 1: Evolution of Critical Assessment Challenges

| Challenge Acronym | Full Name | Primary Focus | First Year |

|---|---|---|---|

| CASP | Critical Assessment of Structure Prediction | Protein 3D structure | 1994 |

| CAGI | Critical Assessment of Genome Interpretation | Phenotype from genotype | 2010 |

| CAPE | Critical Assessment of Protein Engineering | Protein function & stability | 2023 |

Mission and Objectives

CAPE's mission is to accelerate the reliable application of computational protein engineering in biotechnology and therapeutic development through open, rigorous, and community-driven assessment. Its core objectives are:

- Benchmarking: Provide a transparent and fair platform for comparing the performance of diverse algorithms and methodologies.

- Blind Prediction: Foster robust scientific practice by evaluating predictions made on experimentally determined but unpublished data.

- Data Curation: Generate high-quality, publicly accessible datasets linking protein variants to quantitative functional metrics.

- Community Building: Catalyze collaboration between computational and experimental biologists.

- Tool Development: Identify strengths, weaknesses, and opportunities for innovation in existing protein engineering pipelines.

Organizing Consortium

CAPE is managed by a consortium of academic and research institutions. The organizational structure is designed to ensure scientific integrity, operational efficiency, and broad community representation.

CAPE Consortium Organizational Workflow

Table 2: Key Roles in the CAPE Consortium

| Role | Composition | Primary Responsibilities |

|---|---|---|

| Steering Committee | 6-8 senior scientists from diverse institutions | Sets scientific direction, approves challenge targets, oversees governance. |

| Experimental Data Providers | Academic/Industry Labs | Contribute novel, unpublished variant libraries with associated functional measurements (e.g., fluorescence, binding affinity, enzymatic activity). |

| Assessment Panel | Independent computational and experimental scientists | Designs evaluation metrics, performs objective analysis of submissions, writes summary reports. |

| Participant Teams | Global research groups (Academic & Industry) | Develop and apply computational methods to make blind predictions on challenge datasets. |

Experimental Protocols for Foundational Data Generation

CAPE relies on high-throughput experimental data. A typical protocol for generating a benchmark dataset (e.g., for enzyme stability) is outlined below.

Protocol: Deep Mutational Scanning (DMS) for Protein Stability

- Objective: Quantify the effect of thousands of single-point mutations on protein stability or function.

- Principle: Couple protein function to cell survival or fluorescence, followed by sequencing to determine variant abundance.

Detailed Methodology:

- Library Construction: A gene library is created via saturation mutagenesis (e.g., using NNK codons) covering target residues.

- Cloning & Expression: The variant library is cloned into an appropriate plasmid vector and expressed in a microbial host (e.g., E. coli).

- Functional Selection:

- For stability: A thermal or chemical denaturation stress is applied. Functional, stable variants survive or retain activity.

- For binding/activity: Fluorescence-Activated Cell Sorting (FACS) or growth-based selection is used based on a functional readout.

- Sequencing: Pre- and post-selection variant populations are harvested. The DNA is amplified and subjected to high-throughput sequencing (Illumina).

- Enrichment Score Calculation: For each variant, an enrichment score (ε) is calculated from the change in its frequency:

ε = log2( f_post / f_pre ), wherefis the variant frequency. - Data Normalization: Scores are normalized to a reference wild-type (ε = 0) and deleterious control variants.

The Scientist's Toolkit: Key Research Reagent Solutions

Table 3: Essential Materials for CAPE-Style Protein Engineering Experiments

| Reagent/Material | Supplier Examples | Function in CAPE Context |

|---|---|---|

| Site-Directed Mutagenesis Kit | NEB Q5, Agilent QuikChange | Rapid construction of individual point mutants for validation studies. |

| Combinatorial Gene Library Synthesis | Twist Bioscience, IDT | Generation of high-quality, complex oligonucleotide pools for DMS library construction. |

| Phusion High-Fidelity DNA Polymerase | Thermo Fisher, NEB | Accurate amplification of variant libraries for sequencing preparation. |

| Illumina DNA Sequencing Kits | Illumina (NovaSeq, MiSeq) | High-throughput sequencing of variant populations pre- and post-selection. |

| Fluorogenic or Chromogenic Enzyme Substrate | Sigma-Aldrich, Promega | Quantitative assay of enzymatic activity for functional screening. |

| Surface Plasmon Resonance (SPR) Chip | Cytiva (Biacore) | Gold-standard validation of binding kinetics (KD, ka, kd) for top-predicted designs. |

| Size-Exclusion Chromatography Column | Bio-Rad, Cytiva | Assessment of protein oligomeric state and aggregation propensity post-purification. |

| Differential Scanning Fluorimetry (DSF) Dye | Thermo Fisher (SYPRO Orange) | High-throughput thermal stability profiling of purified protein variants. |

Core Data and Evaluation Metrics

CAPE evaluations are based on quantitative comparisons between computational predictions and experimental ground truth. Standard metrics are used across challenges.

Table 4: Core Quantitative Evaluation Metrics in CAPE

| Metric | Formula / Definition | Purpose |

|---|---|---|

| Pearson's r | r = cov(P, E) / (σ_P * σ_E) |

Measures linear correlation between predicted (P) and experimental (E) values. |

| Spearman's ρ | Rank correlation coefficient. | Measures monotonic relationship, robust to outliers. |

| Root Mean Square Error (RMSE) | √[ Σ(P_i - E_i)² / N ] |

Measures absolute error magnitude in prediction units. |

| Area Under the Curve (AUC) | Area under the ROC curve for classifying functional vs. non-functional variants. | Evaluates binary classification performance. |

| Top-k Recovery Rate | Percentage of experimentally top-performing variants found in the predicted top-k list. | Assesses utility for lead candidate identification. |

CAPE establishes a vital framework for the objective assessment of computational protein engineering. Through its structured consortium, commitment to blind prediction, and generation of public benchmark datasets, it drives progress toward more reliable and impactful protein design for therapeutic and industrial applications. Its continued evolution will be crucial in translating algorithmic advances into real-world biological solutions.

The field of protein engineering, particularly for therapeutic development, has been revolutionized by advances in computational design, directed evolution, and high-throughput screening. However, the rapid proliferation of methods has created a critical gap: the inability to objectively compare performance across different laboratories, pipelines, and algorithms. Predictions of stability, binding affinity, and expressibility often remain siloed within specific methodological frameworks, leading to a literature replete with claims that are not independently verifiable. This gap impedes the translation of research into robust, deployable technologies for drug development. The Critical Assessment of Protein Engineering (CAPE) initiative was conceived to address this exact problem by establishing a community-wide, blind assessment framework.

The Core Problem: Lack of Standardized Benchmarks

The primary issue lies in the absence of standardized challenge problems with held-out ground truth data. Most published studies validate methods on retrospective, often cherry-picked, datasets or proprietary internal data. This makes determining whether a new deep learning model truly outperforms traditional physics-based energy functions or a novel screening protocol is genuinely more efficient an exercise in subjective interpretation.

Table 1: Common Limitations in Protein Engineering Literature Leading to the Gap

| Limitation Category | Typical Manifestation | Consequence |

|---|---|---|

| Dataset Bias | Use of non-public, historically successful targets; lack of negative design data. | Overestimation of generalizability; methods fail on novel scaffolds. |

| Validation Fragmentation | Inconsistent metrics (ΔΔG vs. IC50 vs. expression yield); different experimental protocols. | Impossible to perform direct, quantitative comparison between studies. |

| Computational Overfitting | Training and testing on data from similar experimental sources (e.g., same PDB subset). | Models perform well on "test" data but fail in prospective, real-world design. |

| Experimental Noise | High variance in biophysical assays (e.g., SPR, thermal shift) between labs. | Computational predictions cannot be fairly evaluated against noisy, inconsistent ground truth. |

The CAPE Solution: A Framework for Blind Assessment

CAPE operates on the model of successful community-wide challenges like CASP (Critical Assessment of Structure Prediction) and CAGI (Critical Assessment of Genome Interpretation). Its core premise is the organization of periodic challenges where participants are provided with a well-defined problem—for example, "design a variant of protein X with increased thermostability without affecting binding affinity to ligand Y." Participants submit their computationally designed sequences, which are then produced and tested uniformly by a central organizing committee using standardized, high-quality experimental protocols. The results are aggregated and compared against baseline methods.

CAPE Workflow Diagram

Title: CAPE Community-Wide Assessment Workflow

Featured Experimental Protocols for Centralized Characterization

The credibility of CAPE hinges on reproducible, high-quality experimental validation. Below are detailed protocols for two cornerstone assays.

Protocol 3.1: High-Throughput Thermal Shift Assay (TSA) for Protein Stability

Objective: Determine the melting temperature (Tm) of purified protein variants in a 96-well format. Reagents:

- Purified protein variant (0.5 mg/mL in PBS, pH 7.4).

- SYPRO Orange protein gel stain (5000X concentrate in DMSO).

- PBS Buffer (1X, pH 7.4).

- Sealed, optically clear 96-well PCR plates.

Procedure:

- Dilute SYPRO Orange to 10X in PBS.

- Prepare assay mix: 18 µL of protein solution + 2 µL of 10X SYPRO Orange per well (final dye concentration: 1X).

- Dispense 20 µL of assay mix into triplicate wells. Include a buffer-only control.

- Seal plate and centrifuge briefly.

- Run in a real-time PCR instrument with a gradient from 25°C to 95°C, with a ramp rate of 1°C/min, monitoring the ROX/FAM channel.

- Analyze data: Calculate the first derivative of fluorescence (dF/dT). The Tm is the temperature at the peak of the derivative curve. Report the mean and standard deviation of triplicates.

Protocol 3.2: Surface Plasmon Resonance (SPR) for Binding Kinetics

Objective: Measure the binding affinity (KD) of designed protein variants against an immobilized target ligand. Reagents:

- Purified protein variant (analyte) in HBS-EP+ buffer (0.01 M HEPES, 0.15 M NaCl, 3 mM EDTA, 0.005% v/v Surfactant P20, pH 7.4).

- Biotinylated target ligand.

- Streptavidin (SA) sensor chip.

- HBS-EP+ buffer (running buffer).

- Regeneration solution (10 mM Glycine, pH 2.0).

Procedure:

- Immobilize biotinylated ligand to a specified response level (~50 RU) on an SA chip flow cell.

- Use a reference flow cell (streptavidin only) for background subtraction.

- Perform a 2-fold serial dilution of the analyte protein (typically 6 concentrations).

- Inject each concentration for 120s (association phase) at a flow rate of 30 µL/min, followed by a 300s dissociation phase with running buffer.

- Regenerate the surface with a 30s pulse of glycine pH 2.0.

- Fit the double-referenced sensorgrams to a 1:1 Langmuir binding model using the instrument's software to determine the association rate (ka), dissociation rate (kd), and equilibrium dissociation constant (KD = kd/ka).

The Scientist's Toolkit: Essential Research Reagents & Materials

Table 2: Key Research Reagent Solutions for CAPE-Style Validation

| Reagent/Material | Function in Assessment | Key Consideration |

|---|---|---|

| NGS-based Deep Mutational Scanning Library | Provides a comprehensive fitness landscape for a protein of interest, serving as a ground-truth training and test set. | Library coverage and quality control are paramount to avoid biased fitness scores. |

| Site-Directed Mutagenesis Kit (e.g., Q5) | Rapid construction of individual designed variants for focused validation. | Requires high-fidelity polymerase to avoid secondary mutations. |

| Mammalian Transient Expression System (e.g., HEK293F) | Produces proteins with proper eukaryotic post-translational modifications for therapeutic-relevant assessments. | Expression titers can vary significantly; require normalization for fair comparison. |

| Octet RED96 or Biacore 8K | Label-free systems for high-throughput (Octet) or high-precision (SPR) binding kinetics. | Must use the same lot of buffers and ligand for all variants to minimize inter-assay variance. |

| Size-Exclusion Chromatography (SEC) Column | Assess aggregation state and monodispersity of purified variants—a critical quality attribute. | A multi-angle light scattering (MALS) detector provides absolute molecular weight confirmation. |

| Stable Cell Line Development Service | For challenges requiring assessment of protein function in a cellular context (e.g., signaling modulation). | Clonal variation must be controlled; use pooled populations or multiple clones. |

Data Synthesis and the Path Forward

The ultimate output of a CAPE challenge is a quantitative, fair comparison across diverse methodologies. This allows the community to identify which approaches work best for specific sub-problems.

Table 3: Hypothetical CAPE Challenge Results for Stability Design

| Participant Method | Average ΔTm vs. Wild-Type (°C) | Success Rate (Tm +5°C) | Experimental Yield (mg/L) | Computational Runtime (GPU-hr/design) |

|---|---|---|---|---|

| Rosetta ddG (Baseline) | +3.2 ± 2.1 | 45% | 12.5 ± 8.2 | 2.5 |

| Deep Learning Model A | +7.8 ± 3.5 | 78% | 5.1 ± 4.3 | 0.1 |

| Evolutionary Model B | +5.1 ± 2.8 | 62% | 18.7 ± 6.9 | 0.5 |

| Hybrid Physics-NN Model C | +6.9 ± 2.9 | 70% | 10.3 ± 7.1 | 5.8 |

Such a table reveals trade-offs: while Model A excels at stability prediction, it may select for insoluble variants. Model B favors expressibility. This nuanced understanding is only possible through community-wide assessment.

Methodology Decision Logic

Title: Method Selection Based on CAPE Insights

The CAPE framework closes the critical gap by providing the necessary rigorous, apples-to-apples comparison. It moves the field from subjective claims to objective metrics, accelerating the identification of robust engineering principles and reliable computational tools. For researchers and drug developers, participation in or utilization of CAPE results de-risks methodological choices and provides a clear, evidence-based path for translating protein design into viable therapeutics.

The Critical Assessment of Protein Engineering (CAPE) student challenge serves as a community-wide benchmarking initiative to quantitatively evaluate the state-of-the-art in protein design. This whitepaper situates the core objectives of Accuracy, Robustness, and Innovation within the CAPE framework. CAPE provides standardized datasets, blinded experimental validation, and a platform for head-to-head comparison of algorithms, moving the field beyond anecdotal success toward rigorous, reproducible metrics. Benchmarking within this context is not an academic exercise but a critical driver for translational progress in therapeutics, enzymes, and biomaterials.

Defining and Quantifying the Core Objectives

Accuracy: Fidelity to Design Intent

Accuracy measures the deviation between the designed protein and the intended structural/functional outcome.

Primary Metrics:

- Root-Mean-Square Deviation (RMSD): Measures backbone atomic displacement between predicted and experimentally determined (e.g., X-ray, Cryo-EM) structures. Sub-Ångstrom levels are ideal for high-accuracy design.

- Sequence Recovery: Percentage of amino acids in a designed protein that match those in a natural or reference sequence for a given fold or function.

- Functional Activity Metrics: e.g., catalytic efficiency (kcat/Km), binding affinity (KD, IC50), or fluorescent quantum yield relative to the design target.

Table 1: Quantitative Benchmarks for Accuracy in Protein Design

| Metric | High Accuracy | Moderate Accuracy | Low Accuracy | Typical Assay |

|---|---|---|---|---|

| Global Backbone RMSD | < 1.0 Å | 1.0 - 2.5 Å | > 2.5 Å | X-ray Crystallography |

| Sequence Recovery | > 40% | 20% - 40% | < 20% | Multiple Sequence Alignment |

| Binding Affinity (KD) | ≤ nM range | nM - µM range | > µM range | Surface Plasmon Resonance (SPR) |

| Enzymatic Efficiency | ≥ 10% of wild-type | 1% - 10% of wild-type | < 1% of wild-type | Kinetic Fluorescence Assay |

Robustness: Reliability Across Diverse Tasks

Robustness evaluates the consistency of a design method across varying protein families, folds, and functional challenges, not just on narrow, optimized test cases.

Primary Metrics:

- Success Rate: The fraction of designs that express solubly, fold correctly, and pass basic functional screening across a diverse benchmark set (e.g., Top7 fold, TIM barrels, protein-protein interfaces).

- Generalization Error: The difference in performance (e.g., RMSD, stability) between designs on curated "training-like" folds and entirely novel or de novo folds.

- Algorithmic Stability: Minimal variation in output design given small perturbations in input parameters or starting sequence.

Table 2: Benchmarking Robustness Across Diverse Protein Families

| Protein Design Challenge | High Robustness (Success Rate) | Moderate Robustness | Low Robustness | Validation Method |

|---|---|---|---|---|

| De Novo Fold Design | > 25% | 10% - 25% | < 10% | SEC-MALS, CD Spectroscopy |

| Protein-Protein Interface | > 15% | 5% - 15% | < 5% | Yeast Display, SPR |

| Enzyme Active Site | > 5% | 1% - 5% | < 1% | Functional High-Throughput Screening |

| Membrane Protein | > 10% | 2% - 10% | < 2% | FSEC, Thermal Stability Assay |

Innovation: Capacity for Novel Discovery

Innovation assesses a method's ability to generate proteins with novel sequences, structures, or functions not observed in nature.

Primary Metrics:

- Novelty Distance: Quantifiable difference (e.g., TM-score < 0.5, low sequence identity) between designed proteins and all known structures in the PDB.

- Functional Innovation: Demonstration of a designed function (e.g., catalysis of a non-biological reaction, binding to a neoantigen) with no known natural equivalent.

- Exploration of Sequence Space: The diversity and non-redundancy of sequences generated for a single target scaffold.

Experimental Protocols for Benchmarking

Protocol for Accuracy Validation (High-Resolution Structure)

Objective: Determine atomic-level accuracy of a designed protein.

- Cloning & Expression: Gene fragment is cloned into a pET vector with a His-tag via Gibson assembly. Transformed into E. coli BL21(DE3) cells.

- Protein Purification: Cells are lysed by sonication. Protein is purified via Ni-NTA affinity chromatography, followed by size-exclusion chromatography (SEC) in a buffer like 20 mM Tris pH 8.0, 150 mM NaCl.

- Crystallization: Purified protein at 10 mg/mL is subjected to sparse-matrix screening (e.g., Hampton Research screens) using sitting-drop vapor diffusion.

- Data Collection & Refinement: X-ray diffraction data is collected at a synchrotron. Phasing is solved by molecular replacement using the design model. Iterative rebuilding and refinement is performed in Phenix and Coot.

- Analysis: Final experimental model is aligned to the design model using PyMOL's

aligncommand. Global backbone RMSD is calculated over all residues.

Protocol for Robustness Screening (High-Throughput Characterization)

Objective: Assess folding and stability for dozens to hundreds of designs in parallel.

- Library Construction: Designs are encoded in oligo pools, cloned into an expression vector via golden gate assembly, and transformed into high-efficiency competent cells.

- Small-Scale Expression: 96-deep-well plates with 1 mL TB auto-induction media are inoculated and grown at 37°C, then induced at 18°C overnight.

- Lysis & Clarification: Cells are lysed chemically (BugBuster) or by sonication. Lysates are clarified by centrifugation.

- HT-SEC/FPLC: Clarified lysates are injected onto an automated SEC system (e.g., Agilent AdvanceBio). Elution profiles are monitored by UV (280 nm). A single, symmetric peak at the expected elution volume indicates a monodisperse, folded protein.

- Thermal Shift Assay (TSA): 5 µL of lysate or purified protein is mixed with 5 µL of SYPRO Orange dye in a qPCR plate. Fluorescence is monitored from 25°C to 95°C. The melting temperature (Tm) is extracted from the inflection point.

Protocol for Innovation Validation (Functional Activity)

Objective: Test a designed enzyme for novel catalytic activity.

- Substrate Design: A fluorogenic or chromogenic probe for the target non-biological reaction is synthesized or purchased.

- Kinetic Assay: Reactions are set up in 96-well plates with 1-100 nM enzyme and varying substrate concentrations in appropriate buffer.

- Continuous Monitoring: Fluorescence/absorbance is read every 30 seconds for 10-60 minutes using a plate reader.

- Data Analysis: Initial velocities are plotted against substrate concentration. Data is fit to the Michaelis-Menten equation using GraphPad Prism to extract kcat and Km.

Visualization of Key Concepts

Protein Design & Benchmarking Workflow

CAPE Benchmarking Evaluation Pipeline

The Scientist's Toolkit: Key Research Reagent Solutions

Table 3: Essential Reagents for Protein Design Benchmarking

| Reagent / Material | Function in Benchmarking | Example Vendor/Product |

|---|---|---|

| Ni-NTA Agarose Resin | Affinity purification of His-tagged designed proteins for initial characterization. | Qiagen, Thermo Fisher Scientific |

| Size-Exclusion Chromatography Columns | Polishing purification and assessment of monodispersity/folding (HT-SEC). | Cytiva (HiLoad Superdex), Agilent (AdvanceBio) |

| SYPRO Orange Protein Gel Stain | Dye for Thermal Shift Assays (TSA) to determine protein thermal stability (Tm). | Thermo Fisher Scientific |

| Crystallization Screening Kits | Sparse-matrix screens to identify initial conditions for growing protein crystals. | Hampton Research (Crystal Screen), Molecular Dimensions |

| Fluorogenic Peptide/Substrate Libraries | High-throughput functional screening of designed enzymes or binders. | Enzo Life Sciences, Bachem |

| Yeast Surface Display System | Library-based selection and affinity maturation of designed binding proteins. | A system based on pYD1 vector and EBY100 yeast strain. |

| Mammalian Transfection Reagents (PEI) | Transient expression of challenging designs (e.g., glycoproteins) in HEK293 cells. | Polyethylenimine (PEI) Max (Polysciences) |

| Next-Generation Sequencing (NGS) Services | Deep sequencing of designed variant libraries to analyze sequence-function landscapes. | Illumina NovaSeq, Oxford Nanopore |

| Machine Learning Cloud Credits | Computational resources for training/inference with large protein models (e.g., on AWS or GCP). | Amazon Web Services, Google Cloud Platform |

The Critical Assessment of Protein Engineering (CAPE) challenge is a community-wide initiative designed to rigorously benchmark computational methods for predicting and designing protein function and stability. Framed within the broader thesis of advancing reproducible, data-driven protein engineering research, CAPE provides a standardized framework to evaluate the state of the field. This document tracks the evolution of CAPE's distinct phases, detailing its expanding technical scope and providing a guide to its experimental and computational methodologies.

CAPE has progressed through defined phases, each introducing new complexities and data types. The table below summarizes the evolution.

Table 1: Evolution of CAPE Challenge Phases

| Phase | Primary Focus | Key Datasets/Proteins | Core Challenge | Year Initiated |

|---|---|---|---|---|

| CAPE 1 | Stability Prediction | Deep Mutational Scanning (DMS) data for GB1, BRCA1, etc. | Predicting variant fitness from sequence. | 2020 |

| CAPE 2 | Binding & Affinity | DMS for antibody-antigen (e.g., SARS-CoV-2 RBD) & peptide-protein interactions. | Predicting binding affinity changes upon mutation. | 2022 |

| CAPE 3 | De Novo Design & Multi-state Specificity | Designed protein binders, multi-specificity switches. | Designing de novo proteins with targeted functional properties. | 2024 (Projected) |

Detailed Experimental Protocols for Core Data Generation

The reliability of CAPE benchmarks hinges on standardized, high-quality experimental data. The following protocols are foundational.

3.1 Protocol for Deep Mutational Scanning (DMS) for Protein Stability

- Objective: Generate a fitness score for thousands of single amino acid variants of a target protein.

- Materials: Gene library of variants, appropriate expression vector, microbial (yeast/bacterial) or mammalian display system, flow cytometer for sorting.

- Procedure:

- Library Construction: Create a saturation mutagenesis library covering all positions of the target gene.

- Expression & Selection: Clone library into a display system (e.g., yeast surface display). Induce expression.

- Stability Selection: Label cells with a conformation-sensitive fluorescent dye (e.g., a dye binding to a stable, properly folded protein epitope). Use FACS to sort populations based on fluorescence intensity into bins representing different stability levels.

- Deep Sequencing: Isolate DNA from pre-selection library and each sorted population. Amplify target region and perform high-throughput sequencing.

- Fitness Score Calculation: Enrichment ratios for each variant between sorted bins and the pre-selection library are computed to derive a quantitative fitness score proportional to protein stability.

3.2 Protocol for DMS for Binding Affinity

- Objective: Measure the effect of mutations on binding affinity at scale.

- Materials: As in 3.1, plus purified, biotinylated ligand.

- Procedure:

- Library Display: Express the variant library on the cell surface.

- Binding Selection: Label cells with a titration of biotinylated ligand, followed by a fluorescent streptavidin conjugate. Use FACS to sort cells based on ligand-binding signal into high-, medium-, low-, and non-binding populations.

- Sequencing & Analysis: As in Step 4-5 of 3.1. Enrichment ratios are used to calculate binding fitness scores, which correlate with binding affinity (KD).

Key Signaling and Workflow Visualizations

Title: CAPE DMS Experimental & Analysis Workflow

Title: CAPE Prediction Model Abstraction

The Scientist's Toolkit: Key Research Reagent Solutions

Table 2: Essential Reagents for CAPE-Style DMS Experiments

| Reagent/Material | Function in Experiment | Example Product/System |

|---|---|---|

| Saturation Mutagenesis Kit | Efficiently generates the library of DNA variants covering all target codons. | NEB Q5 Site-Directed Mutagenesis Kit, Twist Bioscience oligo pools. |

| Yeast Surface Display System | Eukaryotic platform for displaying protein variants, allowing for stability and binding selections. | pYDS vector series, Saccharomyces cerevisiae EBY100 strain. |

| Conformation-Sensitive Dye | Binds to properly folded protein epitopes; fluorescence intensity reports on protein stability. | Anti-c-Myc antibody (for epitope tag) with fluorescent conjugate. |

| Biotinylated Ligand | The binding target (antigen, receptor, etc.) used to select for functional binders. | Purified target protein biotinylated via EZ-Link NHS-PEG4-Biotin. |

| Fluorescent Streptavidin | High-affinity conjugate that binds biotinylated ligand, enabling detection by flow cytometry. | Streptavidin conjugated to Alexa Fluor 647 or PE. |

| Flow Cytometry Cell Sorter | Instrument to analyze and physically sort cell populations based on fluorescent labels. | BD FACS Aria, Beckman Coulter MoFlo Astrios. |

| High-Throughput Sequencer | Determines the abundance of each variant in sorted populations via DNA sequencing. | Illumina MiSeq/NovaSeq, Oxford Nanopore MinION. |

The Critical Assessment of Protein Engineering (CAPE) student challenge serves as a benchmark for evaluating innovative methodologies in computational and experimental protein design. This whitepaper, framed within a broader thesis assessing the CAPE challenge, provides a technical guide to its core mechanisms, focusing on the synergistic roles of its diverse stakeholders—from academic research groups to industry biotech leaders. The integration of cross-disciplinary expertise is critical for advancing predictive modeling, high-throughput screening, and functional validation, which are central to modern therapeutic development.

Stakeholder Ecosystem & Quantitative Analysis

The CAPE challenge orchestrates collaboration across distinct sectors. The following table summarizes the primary participant categories and their quantitative contributions based on recent challenge data.

Table 1: Key Stakeholder Categories & Metrics in Recent CAPE Challenges

| Stakeholder Category | Primary Role | % of Total Teams (Approx.) | Typical Resource Contribution |

|---|---|---|---|

| Academic Research Labs | Algorithm development, foundational science | 65% | Computational models, novel assays, open-source tools |

| Biotech/Pharma R&D | Applied therapeutic design, validation | 25% | Proprietary datasets, high-throughput screening, lead optimization |

| Hybrid Consortia | Translational bridge, method benchmarking | 8% | Integrated workflows, standardized metrics |

| Independent & Student Groups | Innovative, disruptive approaches | 2% | Novel algorithms, cost-effective protocols |

Core Experimental Methodologies

The CAPE challenge centers on rigorous protocols for protein engineering. Below are detailed methodologies for key experimental phases cited in recent challenges.

Protocol A: Deep Mutational Scanning (DMS) for Fitness Landscape Mapping

- Objective: To quantitatively assess the functional impact of thousands of single-point mutations in a target protein.

- Materials: Mutant plasmid library, competent cells (e.g., NEB 10-beta), selection media, NGS prep kit.

- Procedure:

- Library Construction: Generate a saturation mutagenesis library via pooled oligonucleotide synthesis and Gibson assembly.

- Transformation & Selection: Transform library into competent cells at high efficiency (>10^9 CFU). Culture under selective pressure (e.g., antibiotic, substrate dependency).

- Harvest & Sequencing: Isolate plasmid DNA from pre- and post-selection populations. Prepare amplicons for Next-Generation Sequencing (NGS) using a 2-step PCR protocol.

- Data Analysis: Enrichment scores for each variant are calculated as log2((countpost-selection + pseudocount) / (countpre-selection + pseudocount)). Variants with scores >1.5 are considered functionally enhanced.

Protocol B: Biolayer Interferometry (BLI) for Binding Affinity (K_D) Measurement

- Objective: To determine binding kinetics and affinity of engineered protein variants.

- Materials: BLI instrument (e.g., FortéBio Octet), APS biosensors, purified target antigen, assay buffer.

- Procedure:

- Biosensor Loading: Hydrate APS biosensors. Load biosensor with biotinylated target antigen (10 µg/mL) for 300 seconds.

- Baseline & Association: Establish a 60-second baseline in kinetics buffer. Immerse sensor in well containing the engineered protein variant (serial dilution from 500 nM to 3.125 nM) for 300 seconds to monitor association.

- Dissociation: Transfer sensor to a well containing only kinetics buffer for 400 seconds to monitor dissociation.

- Analysis: Fit sensorgram data to a 1:1 binding model using the instrument's software to calculate association (kon) and dissociation (koff) rates, deriving KD (koff/k_on).

Visualization of Core Workflows

Diagram 1: CAPE stakeholder workflow (62 chars)

Diagram 2: DMS experimental steps (35 chars)

The Scientist's Toolkit: Research Reagent Solutions

Table 2: Essential Materials for Featured CAPE Methodologies

| Item Name | Vendor Example | Function in Protocol |

|---|---|---|

| NEB 10-beta Competent E. coli | New England Biolabs | High-efficiency transformation for mutant library propagation. |

| Gibson Assembly Master Mix | New England Biolabs | Seamless, one-step cloning for constructing mutant plasmid libraries. |

| KAPA HiFi HotStart ReadyMix | Roche | High-fidelity PCR for NGS library preparation from DMS samples. |

| Streptavidin (SA) Biosensors | Sartorius (FortéBio) | For BLI assays; capture biotinylated antigen for kinetic measurements. |

| Series S Sensor Chip CMS | Cytiva | Gold-standard surface for immobilizing ligands in Surface Plasmon Resonance (SPR). |

| HEK293F Cells | Thermo Fisher | Mammalian expression system for producing properly folded therapeutic protein variants. |

| HisTrap HP Column | Cytiva | Immobilized metal affinity chromatography for purifying His-tagged engineered proteins. |

| Protease Inhibitor Cocktail (EDTA-free) | MilliporeSigma | Prevents proteolytic degradation of protein samples during purification and assay. |

Inside the CAPE Framework: Methodologies, Tasks, and Real-World Applications

The Critical Assessment of Protein Engineering (CAPE) is a community-driven challenge designed to rigorously benchmark computational protein design and engineering methods. Framed within the broader thesis of advancing reproducible, blind-prediction research, CAPE establishes standardized experimental pipelines to objectively assess the state of the field. This whitepaper details the end-to-end pipeline from target selection through to blind prediction submission and experimental validation, providing a technical guide for researchers and drug development professionals engaged in high-stakes protein engineering.

The CAPE Pipeline: A Stage-by-Stage Technical Guide

Target Selection and Characterization

The initial phase involves selecting protein targets with defined engineering goals (e.g., thermostability, catalytic activity, binding affinity).

Protocol: Initial Target Characterization

- Gene Synthesis & Cloning: The wild-type gene is codon-optimized, synthesized, and cloned into an appropriate expression vector (e.g., pET series for E. coli).

- Expression & Purification: Transformed into expression host (e.g., BL21(DE3)), induced with IPTG, and cells are lysed. The protein is purified via affinity chromatography (e.g., His-tag using Ni-NTA resin), followed by size-exclusion chromatography (SEC).

- Baseline Assay: Establish a robust quantitative assay for the property of interest (e.g., fluorescence-based thermal shift for stability, enzyme kinetics for activity). All assays are performed in triplicate.

Dataset Curation and Public Release

A curated, high-quality experimental dataset for the wild-type and a limited set of variants is generated and publicly released to participants.

Table 1: Example CAPE Target Dataset (Hypothetical Lysozyme Stability)

| Variant ID | Mutations (Relative to WT) | Experimental ΔTm (°C) | Experimental ΔΔG (kcal/mol) | Measurement Error (±) |

|---|---|---|---|---|

| WT | - | 0.0 | 0.00 | 0.2 |

| CAPE_V001 | A12V, T45I | +3.5 | -0.48 | 0.3 |

| CAPE_V002 | K83R | -1.2 | +0.17 | 0.2 |

| CAPE_V003 | L102Q | -5.7 | +0.78 | 0.4 |

Blind Prediction Phase

Participants use the provided dataset to train or calibrate their methods before predicting the properties of a secret set of novel variants.

Protocol: Prediction Submission Format

- Predictions must follow a strict, machine-readable format (e.g., CSV).

- Required columns:

variant_id,predicted_ddG,confidence_estimate. - Submissions are timestamped and uploaded to a secure portal before the deadline.

Experimental Validation and Assessment

The CAPE organizers experimentally test the secret variants. Participant predictions are evaluated against ground-truth data using predefined metrics.

Table 2: Standard CAPE Assessment Metrics

| Metric | Formula | Description | Ideal Value |

|---|---|---|---|

| Pearson's r | Cov(Pred, Exp) / (σPred * σExp) | Linear correlation | 1.0 |

| Spearman's ρ | 1 - [6∑d_i²]/[n(n²-1)] | Rank correlation | 1.0 |

| Root Mean Square Error (RMSE) | √[∑(Predi - Expi)²/n] | Absolute error | 0.0 |

| Mean Absolute Error (MAE) | ∑⎮Predi - Expi⎮/n | Average absolute deviation | 0.0 |

Visualizing the CAPE Workflow and Pathways

CAPE Experimental Pipeline Overview

Blind Prediction Validation Logic

The Scientist's Toolkit: Key Research Reagent Solutions

Table 3: Essential Reagents for CAPE-Style Protein Engineering Pipelines

| Item | Function in Pipeline | Example Product/Kit |

|---|---|---|

| Codon-Optimized Gene Fragments | Ensures high expression yields in the chosen host organism (e.g., E. coli). | Twist Bioscience gBlocks, IDT Gene Fragments. |

| High-Efficiency Cloning Kit | For rapid and error-free assembly of variant libraries into expression vectors. | NEB HiFi DNA Assembly Master Mix, Gibson Assembly. |

| Competent Cells for Protein Expression | Robust, high-transformation-efficiency cells for recombinant protein production. | NEB BL21(DE3), Agilent XL10-Gold. |

| Affinity Purification Resin | One-step capture of tagged protein from cell lysate. | Cytiva HisTrap HP (Ni Sepharose), Capto LMM. |

| Size-Exclusion Chromatography (SEC) Column | Polishing step to separate monomers from aggregates and impurities. | Cytiva HiLoad 16/600 Superdex 75/200 pg. |

| Thermal Shift Dye | For high-throughput stability measurements (ΔTm) using real-time PCR instruments. | Thermo Fisher Protein Thermal Shift Dye. |

| Plate Reader with Temperature Control | Essential for running fluorescence- or absorbance-based activity/stability assays in 96- or 384-well format. | BioTek Synergy H1, BMG CLARIOstar. |

| Next-Generation Sequencing (NGS) Kit | For deep mutational scanning or analysis of variant libraries post-selection. | Illumina Nextera XT, MGI EasySeq. |

The Critical Assessment of Protein Engineering (CAPE) challenge is a community-wide initiative designed to rigorously benchmark computational methods for predicting protein properties. This whitepaper deconstructs the three core prediction tasks central to CAPE and modern therapeutic development: protein stability (ΔΔG), protein function (e.g., enzyme activity), and protein-protein/binding affinity (ΔG). Success in these tasks is pivotal for accelerating rational drug design and protein-based therapeutic engineering.

Core Prediction Tasks: Definitions & Quantitative Benchmarks

Protein Stability (ΔΔG)

The prediction of changes in folding free energy upon mutation (ΔΔG). This quantifies how a point mutation stabilizes or destabilizes a protein's native structure.

Table 1: Recent Benchmark Performance on Stability Prediction (ΔΔG in kcal/mol)

| Method (Model) | Test Dataset | Correlation (Pearson's r) | RMSE (kcal/mol) | Key Metric | Year |

|---|---|---|---|---|---|

| ProteinMPNN* | S669 | 0.48 | 1.37 | Pearson's r | 2022 |

| ESM-2 (Fine-tuned) | Ssym | 0.82 | 1.15 | Pearson's r | 2023 |

| MSA Transformer | Proteus | 0.65 | 1.81 | Pearson's r | 2022 |

| ThermoNet | S669 | 0.78 | 1.20 | Pearson's r | 2021 |

*Designed for design, often used as baseline for prediction.

Protein Function (Activity)

The prediction of quantitative functional metrics, such as enzyme catalytic efficiency (kcat/Km) or fluorescence intensity, from sequence or structure.

Table 2: Benchmark Performance on Function Prediction

| Method | Task / Dataset | Performance Metric | Result | Year |

|---|---|---|---|---|

| DeepSequence | Lactamase (TEM-1) Variants | Spearman's ρ | 0.73 | 2018 |

| TAPE (Transformer) | Fluorescence (AVGFP) | Spearman's ρ | 0.68 | 2019 |

| ESM-1v (Zero-shot) | Lactamase (TEM-1) | Top-1 Accuracy | 60.2% | 2021 |

| UniRep | Stability & Function | Average Spearman's ρ | 0.38 | 2019 |

Binding Affinity (ΔG)

The prediction of the strength of protein-protein or protein-ligand interactions, typically measured as the binding free energy (ΔG) or related terms (KD, IC50).

Table 3: Benchmark Performance on Binding Affinity Prediction

| Method | Interaction Type | Dataset | Pearson's r | RMSE (kcal/mol) |

|---|---|---|---|---|

| AlphaFold-Multimer | Protein-Protein | PDB | ~0.45* (pLDDT vs ΔG) | N/A |

| RosettaDDG | Protein-Protein | SKEMPI 2.0 | 0.52 | 2.4 |

| ESM-IF1 (Inverse Folding) | Protein-Protein | Docking Benchmark | Varies | N/A |

| EquiBind (Deep Learning) | Protein-Ligand | PDBBind | 0.67 (Docking Power) | N/A |

*pLDDT is a confidence metric, not a direct affinity predictor.

Experimental Protocols for Ground Truth Data

The performance of computational models in CAPE is evaluated against experimental data. Below are standard protocols for generating such data.

Protocol: Thermal Shift Assay for Stability (ΔΔG)

Purpose: To measure protein thermal stability (Tm) and calculate ΔΔG upon mutation.

- Sample Preparation: Purify wild-type and mutant proteins in identical buffer conditions (e.g., PBS, pH 7.4).

- Dye Addition: Mix protein sample with a fluorescent dye (e.g., SYPRO Orange) that binds to hydrophobic regions exposed upon unfolding.

- Thermal Ramp: Load samples into a real-time PCR instrument. Increase temperature from 25°C to 95°C at a rate of 1°C/min.

- Fluorescence Monitoring: Record fluorescence intensity continuously. The inflection point of the sigmoidal curve is the melting temperature (Tm).

- Data Analysis: Calculate ΔTm = Tm(mutant) - Tm(wild-type). Approximate ΔΔG using the Gibbs-Helmholtz relationship: ΔΔG ≈ ΔHm * (ΔTm / Tm), where ΔHm is the enthalpy of unfolding.

Protocol: Enzyme Kinetic Assay for Function (kcat/Km)

Purpose: To determine catalytic efficiency as a functional readout.

- Reaction Setup: Prepare a series of substrate concentrations [S] in assay buffer.

- Initial Rate Measurement: Initiate reaction by adding a fixed, low concentration of enzyme. Monitor product formation spectrophotometrically or fluorometrically for the initial 5-10% of reaction completion.

- Michaelis-Menten Analysis: Plot initial velocity (v0) vs. [S]. Fit data to the equation: v0 = (Vmax * [S]) / (Km + [S]).

- Calculation: Derive Vmax and Km. The catalytic efficiency is kcat/Km, where kcat = Vmax / [Enzyme].

Protocol: Surface Plasmon Resonance (SPR) for Binding Affinity (KD)

Purpose: To measure real-time biomolecular interactions and determine equilibrium dissociation constant (KD).

- Ligand Immobilization: Covalently immobilize one binding partner (ligand) on a dextran-coated sensor chip.

- Analyte Flow: Flow the other partner (analyte) over the chip at a series of known concentrations in running buffer.

- Sensorgram Recording: Monitor the change in resonance units (RU) over time (association phase), then switch to buffer-only flow (dissociation phase).

- Global Fitting: Fit the collective sensorgrams to a 1:1 Langmuir binding model. The ratio of dissociation to association rate constants (kd/ka) yields the equilibrium dissociation constant KD.

Visualizing Relationships & Workflows

Title: CAPE Core Tasks and Engineering Pipeline

Title: CAPE Benchmarking Loop

The Scientist's Toolkit: Research Reagent Solutions

Table 4: Essential Reagents for Core Task Validation

| Item | Function/Application | Example Product/Kit |

|---|---|---|

| SYPRO Orange Dye | Fluorescent probe for thermal denaturation curves in Differential Scanning Fluorimetry (DSF). | Sigma-Aldrich S5692 |

| HisTrap HP Column | Immobilized metal affinity chromatography (IMAC) for purification of His-tagged protein variants. | Cytiva 17524801 |

| Protease Inhibitor Cocktail | Prevents proteolytic degradation during protein expression and purification. | Roche cOmplete 4693132001 |

| BIACORE Sensor Chip CM5 | Gold standard SPR chip for covalent ligand immobilization via amine coupling. | Cytiva 29104988 |

| Kinase-Glo Max Luminescence Kit | Homogeneous, luminescent assay for measuring kinase activity by quantifying ATP depletion. | Promega V6071 |

| Nano-Glo HiBiT Blotting System | High-sensitivity detection of protein expression and stability in lysates or gels. | Promega N2410 |

| Site-Directed Mutagenesis Kit | Rapid creation of point mutations for constructing variant libraries. | NEB E0554S (Q5) |

| Size-Exclusion Chromatography (SEC) Column | Polishing step to isolate monodisperse, properly folded protein. | Cytiva Superdex 75 Increase 10/300 GL |

| Fluorogenic Peptide Substrate | For continuous kinetic assays of protease activity (e.g., for NS3/4A, caspase-3). | Anaspec AS-26897 |

| Anti-His Tag Antibody (HRP) | Universal detection antibody for Western blot analysis of His-tagged constructs. | GenScript A00612 |

The Critical Assessment of Protein Engineering (CAPE) student challenge is a rigorous framework for evaluating advances in computational protein design and engineering. Within this research paradigm, the reliability of any predictive model or designed variant hinges on the quality of the underlying experimental structural data. This whitepaper details the creation and validation of a gold-standard backbone dataset, a foundational resource for training and benchmarking in CAPE and related fields. This backbone serves as the non-negotiable standard against which designed structures and engineered functions are judged, ensuring scientific rigor in computational drug development.

The Imperative for a Gold-Standard Dataset

Biased or noisy structural datasets propagate errors into machine learning models, leading to false positives in virtual screening and flawed protein designs. The gold-standard backbone dataset addresses this by enforcing stringent curation and validation criteria, focusing on high-resolution X-ray crystallographic data.

Table 1: Common Dataset Pitfalls vs. Gold-Standard Solutions

| Pitfall in Common Datasets | Consequence for CAPE Research | Gold-Standard Solution |

|---|---|---|

| Low-resolution structures (>2.5 Å) | Ambiguous backbone and side-chain conformations | Resolution cutoff ≤ 1.8 Å |

| Incomplete residues/missing loops | Poor modeling of local flexibility | Requires full backbone continuity for selected region |

| High B-factors (disorder) | Unreliable atomic coordinates | Average B-factor cutoff ≤ 40 Ų |

| Incorrect crystallographic refinement | Systematic model errors | Cross-validation with Rfree ≤ 0.25 |

| Redundancy (sequence identity >90%) | Overfitting of predictive models | Clustered at ≤30% sequence identity |

Core Curation Protocol

Source Data Acquisition

- Primary Source: The Protein Data Bank (PDB).

- Initial Query: Use advanced search for experimental method "X-RAY DIFFRACTION," resolution ≤ 1.8 Å, and deposition date within the last 10 years to reflect modern refinement practices.

- Pre-filtering: Download metadata and pre-filter using

pdb-toolsand in-house Python scripts to remove entries with:!HETATMrecords for non-water molecules within 5Å of the region of interest.- Missing backbone atoms (N, CA, C, O) in any residue.

- Alternate conformations for backbone atoms.

Hierarchical Filtering Workflow

The following diagram outlines the multi-stage curation pipeline.

Title: Gold-Standard Dataset Curation Workflow

Validation Suite

Each surviving entry undergoes automated and manual validation.

- Geometry Validation: Run

MolProbityorPDBValidationService. Accept only structures with:- Ramachandran outliers < 0.5%.

- Rotamer outliers < 1.0%.

- Clashscore percentile ≥ 70th.

- Electron Density Validation: Use

EDM(Electron Density Map) analysis from theCCP4suite. Calculate real-space correlation coefficient (RSCC) for each residue backbone. Manually inspect any residue with RSCC < 0.8 in UCSF ChimeraX to confirm fit.

Table 2: Quantitative Validation Thresholds

| Validation Metric | Tool Used | Acceptance Threshold |

|---|---|---|

| Ramachandran Outliers | MolProbity | < 0.5% |

| Rotamer Outliers | MolProbity | < 1.0% |

| Clashscore Percentile | MolProbity | ≥ 70 |

| Real-Space CC (Backbone) | EDM/ChimeraX | ≥ 0.8 |

| Side-Chain CC | EDM/ChimeraX | ≥ 0.7 |

Application in CAPE: The Validation Loop

The curated dataset is not an endpoint. It integrates into the CAPE challenge cycle as the benchmark for computational predictions.

Title: CAPE Research Validation Cycle

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Tools for Crystallographic Validation

| Tool / Reagent | Primary Function | Application in Gold-Standard Curation |

|---|---|---|

| CCP4 Software Suite | Comprehensive crystallography package. | Processing, refinement, and map calculation for validation. |

| MolProbity / PDB-REDO | Structure validation and re-refinement server. | Assessing stereochemical quality and identifying outliers. |

| UCSF ChimeraX | Molecular visualization and analysis. | Manual inspection of electron density fit and model quality. |

| CD-HIT | Sequence clustering tool. | Removing redundancy to ensure dataset diversity. |

| pdb-tools | Python library for PDB file manipulation. | Automating filtering steps (e.g., removing ligands, checking completeness). |

| PyMOL | Molecular graphics system. | Generating publication-quality images of validated structures. |

| REFMAC5 / phenix.refine | Crystallographic refinement programs. | (For experimentalists) Final refinement of structures intended for deposition. |

The Critical Assessment of Protein Engineering (CAPE) is a community-wide benchmarking challenge designed to rigorously evaluate computational methods for predicting protein stability, function, and interactions. Framed within a broader thesis on advancing predictive biophysics, CAPE provides a standardized framework to move beyond anecdotal success and quantify the real-world applicability of tools like AlphaFold2, Rosetta, and deep mutational scanning models. For drug discovery, the transition from benchmark performance to a robust wet-lab workflow is non-trivial. This guide details how to translate CAPE-derived insights and top-performing methodologies into actionable, high-confidence experiments for lead optimization, antibody engineering, and target vulnerability assessment.

The following tables summarize key quantitative findings from recent CAPE rounds, highlighting top-performing methodologies for critical tasks in therapeutic development.

Table 1: Performance Summary of Top CAPE Methods for Stability Prediction (ΔΔG)

| Method Name | Core Algorithm | RMSE (kcal/mol) | Pearson's r | Spearman's ρ | Best Use Case |

|---|---|---|---|---|---|

| ProteinMPNN | Graph Neural Network | 0.78 | 0.81 | 0.79 | Scaffolding & backbone design |

| RFdiffusion | Diffusion Model + Rosetta | 0.82 | 0.79 | 0.77 | De novo binder design |

| AlphaFold2-Multimer | Transformer + Evoformer | 1.15 | 0.72 | 0.69 | Complex interface stability |

| RosettaDDG | Physical Energy Function | 1.05 | 0.75 | 0.73 | Single-point mutation screening |

| ESM-IF1 | Inverse Folding Language Model | 0.95 | 0.78 | 0.76 | Sequence recovery & variant effect |

Table 2: CAPE Challenge Metrics for Protein-Protein Interaction (PPI) Design

| Method Category | Success Rate* (%) | Affinity Improvement (Fold) | Specificity Score | Computational Cost (GPU-hr) |

|---|---|---|---|---|

| Sequence-Based ML | 42 | 5-50 | 0.65 | 2-10 |

| Structure-Based Physical | 38 | 3-20 | 0.81 | 50-200 |

| Hybrid (ML + Physics) | 55 | 10-100 | 0.88 | 20-100 |

| Generative Diffusion | 48 | 5-80 | 0.75 | 10-50 |

*Success Rate: Fraction of designs exhibiting measurable binding in primary assay.

Core Experimental Protocols for Validating Computational Designs

Protocol A: High-Throughput Stability & Expression Screening

Objective: Validate predicted stability and soluble expression of designed protein variants (e.g., antibodies, enzymes). Materials: See "The Scientist's Toolkit" below. Procedure:

- Gene Synthesis & Cloning: Encode designed variants via array-based oligo synthesis. Clone into a T7-promoter vector (e.g., pET series) using Golden Gate assembly for high-throughput.

- Microscale Expression: Transform into E. coli BL21(DE3) or Expi293F for mammalian expression. Induce in 1 mL deep-well blocks for 24h at 30°C or per system protocol.

- Lysis & Clarification: Lyse bacterial cells via sonication or mammalian cells via detergent. Clarify lysates by centrifugation (15,000 x g, 20 min).

- His-Tag Purification & Analysis: Use 96-well Ni-NTA plates. Apply clarified lysate, wash (20 mM Imidazole), elute (250 mM Imidazole). Analyze via:

- SDS-PAGE: For expression level and size.

- Differential Scanning Fluorimetry (nanoDSF): Load 10 µL of eluate into capillary. Ramp temperature from 20°C to 95°C at 1°C/min. Record intrinsic fluorescence (330/350 nm ratio). Tm is inflection point.

- Data Correlation: Plot experimental Tm vs. predicted ΔΔG. Establish correlation threshold for model trust.

Protocol B: Surface Plasmon Resonance (SPR) for Affinity Validation

Objective: Quantify binding kinetics (ka, kd, KD) of designed binders against target antigen. Procedure:

- Immobilization: Dilute antigen to 10 µg/mL in sodium acetate buffer (pH 5.0). Inject over a CMS sensor chip to achieve 50-100 RU coupling via standard amine chemistry.

- Kinetic Series: Serially dilute purified designer protein from 100 nM to 0.78 nM in HBS-EP+ buffer. Use a multi-cycle kinetics program.

- Association/Disassociation: Inject sample for 180s association, 600s dissociation at 30 µL/min.

- Analysis: Double-reference sensograms. Fit to a 1:1 Langmuir binding model. Compare obtained KD to computational affinity predictions (e.g., from Rosetta or AlphaFold confidence scores).

Protocol C: Deep Mutational Scanning (DMS) for Functional Validation

Objective: Experimentally map sequence-function landscape to benchmark computational predictions. Procedure:

- Library Construction: Generate a saturation mutagenesis library of the target protein region via error-prone PCR or oligo pool synthesis.

- Functional Selection: Clone library into a phage or yeast display vector. Perform 2-3 rounds of selection under stringent conditions (e.g., low antigen concentration, competitive elution).

- NGS Sequencing: Pre- and post-selection, isolate plasmid DNA and amplify the variant region for Illumina sequencing.

- Enrichment Score Calculation: For each variant, compute enrichment as log2( (countpost + pseudocount) / (countpre + pseudocount) ). Correlate enrichment scores with CAPE model predictions (e.g., ESM-1v variant effect scores).

Visualizing Workflows and Pathways

Diagram 1: CAPE-to-Bench Translation Workflow

Diagram 2: Key Protein Engineering Signaling Pathway

The Scientist's Toolkit: Essential Research Reagents & Materials

Table 3: Key Reagent Solutions for CAPE-Inspired Workflows

| Item | Function in Workflow | Example Product/Kit | Key Consideration |

|---|---|---|---|

| High-Throughput Cloning System | Rapid assembly of variant libraries | NEB Golden Gate Assembly Kit (BsaI-HFv2) | Ensures high-fidelity, scarless assembly for 96+ variants. |

| Mammalian Transient Expression System | Production of post-translationally modified proteins (e.g., antibodies). | Expi293F System (Thermo Fisher) | Essential for correct folding and glycosylation of therapeutic proteins. |

| Nano Differential Scanning Fluorimeter (nanoDSF) | Label-free measurement of protein thermal stability (Tm). | Prometheus NT.48 (NanoTemper) | Requires only 10 µL of sample, ideal for low-yield designs. |

| SPR Sensor Chip | Immobilization of target antigen for kinetic analysis. | Series S Sensor Chip CMS (Cytiva) | Standard chip for amine coupling; ensure target is >90% pure. |

| Yeast Display Vector | Phenotypic screening of designed binders. | pYD1 Vector (Thermo Fisher) | Enables linking genotype to phenotype for DMS. |

| Next-Gen Sequencing Kit | Sequencing variant libraries pre- and post-selection. | Illumina Nextera XT DNA Library Prep | Critical for deep coverage in DMS experiments. |

| Stability Buffer Screen | Optimize formulation for stable proteins. | Hampton Research PreCrystallization Suite | Identifies conditions that maximize shelf-life of leads. |

Navigating CAPE Pitfalls: Troubleshooting Predictions and Optimizing Tool Performance

Within the framework of the Critical Assessment of Protein Engineering (CAPE) initiative, benchmarking predictive algorithms against real-world experimental data is paramount. This whitepaper provides an in-depth technical analysis of the predominant failure modes encountered when computational predictions, especially in protein structure and function, are experimentally validated. Understanding these discrepancies is crucial for researchers, scientists, and drug development professionals aiming to improve predictive models.

The following table consolidates common failure modes identified through CAPE-related challenges and recent literature.

Table 1: Quantitative Summary of Common Computational Prediction Failure Modes

| Failure Mode Category | Typical Error Magnitude / Frequency (%) | Primary Contributing Factors | Impact on Drug Development |

|---|---|---|---|

| Static Structure Misprediction | RMSD >5Å in 15-30% of orphan targets | Poor homology, disordered regions, multimer state errors | Off-target binding, failed lead optimization |

| Dynamic/Ensemble State Failure | ΔΔG >2 kcal/mol in ~40% of affinity predictions | Neglect of conformational entropy, solvent dynamics | Inaccurate efficacy and pharmacokinetic profiles |

| Solvation & Electrostatic Errors | pKa shift >2 units in buried residues | Continuum model limitations, explicit ion neglect | Altered binding specificity, aggregation propensity |

| Multimeric Interface Failure | Interface RMSD >3Å in ~25% of complexes | Allosteric coupling, flexible docking oversimplification | Incorrect assessment of protein-protein interaction targets |

| Pathogenicity/Variant Effect False Negatives | False Negative Rate: 10-20% for destabilizing variants | Epistasis, chaperone interaction neglect | Overlooking disease-linked mutations |

Experimental Protocols for Validating Predictions

Detailed methodologies are required to diagnose these failure modes. The following protocols are central to CAPE assessment workflows.

Protocol 1: Differential Scanning Fluorimetry (DSF) for Stability Validation

- Objective: Experimentally measure protein thermal stability (Tm) to compare against predicted ΔΔG of folding for point variants.

- Materials: Purified target protein, fluorescent dye (e.g., SYPRO Orange), real-time PCR instrument.

- Procedure:

- Prepare a 20 µL reaction mix containing 5 µM protein, 5X SYPRO Orange dye, and assay buffer.

- Perform a thermal ramp from 25°C to 95°C at a rate of 1°C/min with continuous fluorescence monitoring (excitation/emission filters suitable for the dye).

- Record fluorescence intensity as a function of temperature.

- Derive Tm from the first derivative of the melt curve. Compare experimental Tm shifts (ΔTm) to computational predictions using a standard conversion factor (~1-2 kcal/mol per °C).

Protocol 2: Surface Plasmon Resonance (SPR) for Binding Kinetics

- Objective: Obtain precise kinetic parameters (ka, kd, KD) to challenge computational affinity (ΔG) and binding interface predictions.

- Materials: SPR instrument, CMS sensor chip, ligand protein, analyte, amine-coupling reagents.

- Procedure:

- Immobilize the ligand protein on a CMS chip via standard amine-coupling chemistry to achieve a response unit (RU) signal appropriate for analyte binding.

- Flow analyte at a series of concentrations (e.g., 0.5nM to 1µM) in HBS-EP buffer at a constant flow rate (e.g., 30 µL/min).

- Monitor association and dissociation phases in real-time.

- Fit sensorgrams to a 1:1 Langmuir binding model (or other appropriate model) to extract ka (association rate) and kd (dissociation rate). Calculate KD = kd/ka.

Visualization of Key Concepts

Diagram Title: CAPE Prediction-Validation Iterative Cycle

Diagram Title: Hierarchy of Prediction Failure Sources

The Scientist's Toolkit: Research Reagent Solutions

Table 2: Essential Reagents and Materials for Validating Computational Predictions

| Item | Function in Validation | Example Product/Catalog |

|---|---|---|

| Stability Dye | Binds hydrophobic patches exposed during protein denaturation; reports thermal stability in DSF. | SYPRO Orange Protein Gel Stain (Invitrogen S6650) |

| Biosensor Chip | Provides a dextran matrix surface for covalent ligand immobilization in SPR kinetics. | Series S Sensor Chip CM5 (Cytiva 29149603) |

| Size-Exclusion Chromatography (SEC) Column | Assesses protein monodispersity and oligomeric state, critical for validating multimer predictions. | Superdex 200 Increase 10/300 GL (Cytiva 28990944) |

| Fluorophore Conjugation Kit | Labels proteins for fluorescence-based assays (e.g., anisotropy) to measure binding. | Alexa Fluor 647 Microscale Protein Labeling Kit (Invitrogen A30009) |

| Cryo-EM Grids | High-quality support film for structural validation via cryo-electron microscopy. | Quantifoil R 1.2/1.3 Au 300 mesh (Quantifoil 31021) |

| Deep Sequencing Kit | Enables massively parallel variant functional assessment to benchmark pathogenicity predictors. | Illumina NextSeq 1000/2000 P2 Reagents (20040561) |

Systematic analysis of failure modes, as championed by the CAPE framework, is essential for advancing computational protein engineering. By rigorously comparing predictions against experimental data using standardized protocols and targeted reagents, the field can iteratively refine models, ultimately accelerating reliable drug discovery and development.

Addressing Data Bias and Generalization Challenges in Training Sets

The Critical Assessment of Protein Engineering (CAPE) serves as a rigorous benchmarking framework for evaluating computational methods in protein design and optimization. A central, recurring challenge for participants is the development of models that perform robustly on novel, unseen protein sequences or functions, moving beyond overfitting to historical, biased datasets. This guide provides a technical roadmap for identifying, quantifying, and mitigating data bias to improve model generalization, directly applicable to CAPE challenges and broader therapeutic protein development.

Quantifying Data Bias in Protein Sequence and Fitness Landscapes

Bias in training sets can stem from experimental convenience (e.g., over-representation of soluble proteins, certain folds, or mutation types), historical research focus, or sequencing artifacts. The following metrics must be calculated.

Table 1: Key Metrics for Quantifying Data Bias in Protein Training Sets

| Metric | Calculation | Interpretation | CAPE Challenge Relevance |

|---|---|---|---|

| Sequence Identity Clustering | Percentage of sequence pairs with identity >80%. | High values indicate redundancy; models may memorize rather than learn generalizable features. | Assesses if the provided training data is sufficiently diverse for the prediction task. |

| Fold/Function Distribution | Shannon entropy or Gini index across annotated folds or GO terms. | Low entropy indicates over-representation of certain structural/functional classes. | Predictions for underrepresented folds/functions will be unreliable. |

| Mutational Skew | χ² test between observed mutation frequency (e.g., polar to charged) and a background model (e.g., from multiple sequence alignments). | Identifies non-random experimental biases in mutagenesis datasets. | Critical for fitness prediction models where training data is from directed evolution libraries. |

| Experimental Noise Floor | Variance in fitness/activity scores for identical or nearly identical constructs across different assays/labs. | Establishes the lower limit of predictable performance. | Helps separate true generalization error from irreducible experimental noise. |

Methodologies for Bias Mitigation and Improved Generalization

Protocol for Generating a Balanced Training Set via Strategic Subsampling

Objective: Create a training set that maximizes diversity and minimizes bias towards over-represented subgroups.

- Featurization: Represent each protein sequence in the full dataset using a learned embedding (e.g., from ESM-2) or a set of physicochemical descriptors.

- Clustering: Perform dimensionality reduction (UMAP, t-SNE) followed by density-based clustering (HDBSCAN) to identify natural groups in the data.

- Stratified Sampling: Within each cluster, randomly sample a fixed number of instances (e.g., n=50). For very small clusters, apply oversampling with synthetic data (see 3.2).

- Validation Split: Hold out entire clusters (not random points) for validation/testing to rigorously assess generalization to novel families.

Protocol for Augmenting Data withIn SilicoMutagenesis and Noise Injection

Objective: Expand and diversify training data to cover a broader region of sequence space.

- Probabilistic Generation: For a given wild-type sequence, use a protein language model (pLM) to generate plausible mutant sequences by sampling from the conditional probability distribution at each position.

- Fitness Imputation: Predict fitness scores for generated sequences using an initial model trained on real data. Apply a conservative threshold to filter out low-confidence predictions.

- Noise Injection: To improve model robustness, add Gaussian noise (ε ~ N(μ, σ²)) to training labels, where σ is derived from the experimental noise floor (Table 1).

- Adversarial Validation: Train a classifier to distinguish between real and generated data. If classification is trivial, the augmentation strategy is failing; iterate on generation parameters.

Protocol for Debiasing Model Training with Gradient Balancing

Objective: Adjust the learning process to reduce influence from biased data subgroups.

- Identify Subgroups: Annotate training examples with subgroup labels (e.g., fold class, high/low sequence density region).

- Monitor Gradient Norms: During training, track the average L2 norm of gradients contributed by each subgroup per mini-batch.

- Apply Balancing: Scale the loss for examples from a subgroup inversely proportional to the subgroup's average gradient norm. This forces the model to attend equally to all groups.

- Validate: Performance on hold-out clusters (from 3.1) should improve, especially for underrepresented groups.

(Diagram Title: Bias Mitigation Workflow for CAPE)

The Scientist's Toolkit: Research Reagent Solutions

Table 2: Essential Tools for Bias-Aware Protein Engineering Research

| Item / Solution | Function in Addressing Bias & Generalization |

|---|---|

| Protein Language Models (e.g., ESM-3, ProtT5) | Provide foundational, unsupervised sequence representations that capture evolutionary constraints, used for featurization, clustering, and in silico data augmentation. |

| Directed Evolution Phage/Yeast Display Libraries (e.g., NEB Turbo, Twist Bioscience) | Generate large, diverse experimental fitness datasets. Careful library design (using pLMs) can counteract mutational skew bias. |

| High-Throughput Sequencing (Illumina, PacBio) | Enables deep mutational scanning (DMS) to generate comprehensive variant fitness maps, reducing coverage bias. |

| Stability & Solubility Assays (e.g., Thermofluor, AUC, SEC-MALS) | Provide orthogonal fitness metrics beyond binding affinity, expanding the feature space and reducing functional annotation bias. |

| Benchmark Datasets (e.g., ProteinGym, TAPE) | Curated, split-by-sequence-identity datasets for fair evaluation of generalization, directly analogous to CAPE challenge data. |

| Adversarial Validation Scripts (Custom Python) | Code to test for distributional shift between training and test sets, a key indicator of latent bias. |

Success in the CAPE challenge and in real-world protein engineering hinges on models that generalize beyond the biases of their training data. By rigorously applying the quantification metrics, experimental protocols, and toolkits outlined herein, researchers can build more robust, reliable, and equitable predictive systems, accelerating the discovery of novel therapeutic and industrial proteins.

Optimizing Algorithm Parameters for Specific Protein Engineering Objectives

The Critical Assessment of Protein Engineering (CAPE) student challenge is a community-driven initiative designed to benchmark computational protocols for protein design and optimization. Within this framework, the precise tuning of algorithm parameters is not merely an IT task but a central scientific endeavor that directly dictates experimental success. This guide details the systematic optimization of parameters for key algorithms, aligning them with specific, measurable protein engineering objectives such as thermostability, binding affinity, and catalytic efficiency.

Core Algorithm Parameter Landscape

The efficacy of computational protein design hinges on the energy function, search algorithm, and their associated parameters. The table below summarizes critical parameters for common algorithms and their primary impact.

Table 1: Key Algorithm Parameters and Their Optimization Objectives

| Algorithm/Module | Critical Parameter | Typical Range | Primary Objective Influence | Optimization Tip |

|---|---|---|---|---|

| Rosetta Energy Function | fa_atr (attractive LJ) weight |

0.80 - 1.20 | Stability, Packing | Increase for core stabilization. |

fa_rep (repulsive LJ) weight |

0.40 - 0.80 | Specificity, Solubility | Decrease to allow closer packing. | |

hbond_sr_bb weight |

1.0 - 2.0 | Secondary Structure Fidelity | Increase for β-sheet/helix design. | |

| Foldit (K* / Rosetta) | spa_stiff (Backbone stiffness) |

0.2 - 0.8 | Backbone Flexibility | Lower for loop redesign. |

| AlphaFold2 (for ΔΔG) | num_ensemble |

1 - 8 | Confidence in Variant Effect | Increase for disordered regions. |

| EvoEF2 | Distance_Cutoff (Å) |

8.0 - 12.0 | Interaction Network | Widen for long-range interactions. |

| PROSS Stability Protocol | mutate_proba |

0.05 - 0.15 | Exploration vs. Exploitation | Higher for radical sequence space search. |

| Deep Mutational Scan (DMS) | learning_rate (ML model) |

1e-4 - 1e-3 | Prediction Accuracy | Lower for fine-tuning on experimental data. |

Experimental Protocols for Parameter Validation

The following protocols are standard in the CAPE challenge for validating parameter sets.

Protocol 3.1: High-Throughput Thermostability Assay (Validatingfa_atr,fa_rep)

Objective: Quantify ΔTm (change in melting temperature) for designed variants. Materials: Purified wild-type and designed protein variants, SYPRO Orange dye, real-time PCR instrument. Method:

- Prepare 20 µL reactions containing 5 µM protein, 5X SYPRO Orange in PBS.

- Use a temperature gradient from 25°C to 95°C with 1°C increments per minute.

- Monitor fluorescence (excitation/emission ~470/570 nm).

- Fit fluorescence vs. temperature data to a Boltzmann sigmoidal curve to determine Tm.

- Calculate ΔTm = Tm(variant) - Tm(wild-type). A successful parameter set should yield designs with ΔTm > +2°C.

Protocol 3.2: Surface Plasmon Resonance (SPR) for Binding Affinity (Validatinghbondand electrostatic weights)

Objective: Measure KD (dissociation constant) for designed binding proteins. Materials: Biacore or Nicoya SPR system, ligand-immobilized sensor chip, running buffer (e.g., HBS-EP). Method:

- Immobilize ligand on a CMS chip via amine coupling.

- Inject analyte (designed protein) at 5 concentrations (e.g., 1 nM to 1 µM) at 30 µL/min.

- Record association (60 s) and dissociation (120 s) phases.

- Regenerate surface with 10 mM glycine-HCl (pH 2.0).

- Fit sensograms to a 1:1 Langmuir binding model. Target: KD improved by >10-fold versus baseline design.

Protocol 3.3: Kinetic Assay for Catalytic Efficiency (kcat/KM)

Objective: Assess enzymatic activity of designed variants. Materials: Substrate, purified enzyme, plate reader, appropriate buffer. Method:

- Perform initial rate experiments with fixed enzyme concentration and varying [S] (0.2-5x KM).

- Monitor product formation spectrophotometrically or fluorometrically for 2-5 minutes.

- Plot initial velocity (v0) against [S] and fit to the Michaelis-Menten equation: v0 = (Vmax * [S]) / (KM + [S]).

- Calculate kcat = Vmax / [Enzyme]. Success criterion: (kcat/KM)variant / (kcat/KM)WT > 1.5.

Visualization of Workflows and Relationships

Diagram Title: Algorithm Parameter Optimization Feedback Loop

Diagram Title: Mapping Objectives to Algorithm Parameters

The Scientist's Toolkit: Research Reagent Solutions

Table 2: Essential Reagents for Parameter Validation Experiments

| Reagent/Material | Supplier Examples | Function in Validation | Critical Note |

|---|---|---|---|

| SYPRO Orange Protein Gel Stain | Thermo Fisher, Sigma-Aldrich | Fluorescent dye for thermal shift assays (Protocol 3.1). | Use at 5-10X final concentration; light sensitive. |

| Series S Sensor Chip CMS | Cytiva (Biacore) | Gold surface for ligand immobilization in SPR (Protocol 3.2). | Requires amine coupling kit (EDC/NHS). |

| HBS-EP+ Buffer (10X) | Cytiva, Teknova | Running buffer for SPR to minimize non-specific binding. | Must be filtered and degassed before use. |

| Precision Plus Protein Standards | Bio-Rad | Molecular weight markers for SDS-PAGE analysis of purified designs. | Essential for confirming protein integrity pre-assay. |